Enterprise integration is more challenging than ever before. The IT evolution requires the integration of more and more technologies. Applications are deployed across the edge, hybrid, and multi-cloud architectures. Traditional middleware such as MQ, ETL, ESB does not scale well enough or only processes data in batch instead of real-time. This post explores why Apache Kafka is the new black for integration projects, how Kafka fits into the discussion around cloud-native iPaaS solutions, and why event streaming is a new software category. A concrete real-world example shows the difference between event streaming and traditional integration platforms respectively iPaaS.

What is iPaaS (Enterprise Integration Platform as a Service)?

iPaaS (Enterprise Integration Platform as a Service) is a term coined by Gartner. Here is the official Gartner definition: “Integration Platform as a Service (iPaaS) is a suite of cloud services enabling development, execution, and governance of integration flows connecting any combination of on-premises and cloud-based processes, services, applications, and data within individual or across multiple organizations.” The acronym eiPaaS (Enterprise Integration Platform as a Service)” is used in some reports as a replacement for iPaaS.

The Gartner Magic Quadrant for iPaaS shows various vendors:

Three points stand out for me:

- Many very different vendors provide a broad spectrum of integration solutions.

- Many vendors (have to) list various products to provide an iPaaS; this means different technologies, codebases, support teams, etc.

- No Kafka offering (like Confluent, Cloudera, Amazon MSK) is in the magic quadrant.

The last bullet point makes me wonder if Kafka-based solutions should be considered iPaaS or not?

Is Apache Kafka an iPaaS?

I don’t know. It depends on the definition of the term “iPaaS”. Yes, Kafka solutions fit into the iPaaS, but it is just a piece of the event streaming success story.

Kafka is an event streaming platform. Use cases differ from traditional middleware like MQ, ETL, ESB, or iPaaS. Check out real-world Kafka deployments across industries if you don’t know the use cases yet.

Kafka does not directly compete with ETL tools like Talend or Informatica, MQ frameworks like IBM MQ or RabbitMQ, API Management platforms like MuleSoft, and cloud-based iPaaS like Boomi or TIBCO. At least not if people understand the differences between Kafka and traditional middleware. For that reason, many people (including me) think that Event Streaming should be its Magic Quadrant.

Having said this, all these very different vendors are in the iPaaS Magic Quadrant. So, should Kafka respectively its vendors be in here? I think so because I have seen hundreds of customers leverage the Kafka ecosystem as a cloud-native, scalable, event-driven integration platform, often in hybrid and multi-cloud architectures. And that’s an iPaaS.

What’s different with Kafka as an Integration Platform?

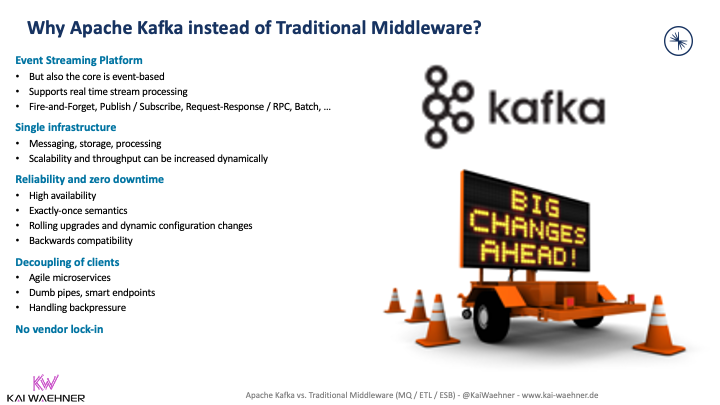

If you are new to this discussion, check out the article “Apache Kafka vs. MQ, ETL, ESB” or the related slides and video. Here is my summary on why Kafka is unique for integration scenarios and therefore adopted everywhere:

A unique selling point for event streaming is the ability to leverage a single platform. In contrast, other iPaaS solutions require different products (including codebases, support teams, integration between the additional tech, etc.).

Kafka as Cloud-native and Serverless iPaaS

Fundamental differences exist between modern iPaaS solutions and traditional legacy middleware; this includes the software architecture, the scalability and operations of the platform, and data processing capabilities. On a high level, an “Kafka iPaaS” requires the following characteristics:

- Cloud-native Infrastructure: Elasticity is vital for success in the modern IT world. The ability to scale up and down (= expanding and shrinking Kafka clusters) is mandatory. This capability enables starting with a small pilot project and scaling up or handling load spikes (like Christmas business in retail).

- Automated Operations: Truly serverless SaaS should always be the preferred option if the software runs in a public cloud. Orchestration tools like Kubernetes and related tools (like a Kafka operator for Kubernetes) are the next best option in a self-managed data center or at the edge outside a data center.

- Complete Platform: An integration platform requires real-time messaging and storage for backpressure handling and long-running processes. Data integration and continuous data processing are mandatory, too. Hence, an “Kafka iPaaS” is only possible if you have access to various pre-built Kafka-native connectors to open standards, legacy systems, and modern SaaS interfaces. Otherwise, Kafka needs to be combined with another middleware like an iPaaS or an ETL tool like Apache Nifi.

- Single Solution: It sounds trivial, but most other middleware solutions use several codebases and products under the hood. Just look at stacks from traditional players such as IBM and Oracle or open-source-driven Cloudera. The complex software stack makes it much harder to provide end-to-end integration, 24/7 operations, and mission-critical support. Don’t get me wrong: Kafka-native solutions like Confluent Cloud also include different products with additional pricing (like a fully-managed connector or data governance add-ons), but all run on a single Kafka-native platform.

From this perspective, some Kafka solutions are modern, cloud-native, scalable, iPaaS. Having said this, would I be happy if you consider some Kafka solutions as an iPaaS on your technology radar? No, not really!

Event Streaming is its Software Category!

While some Kafka solutions can be used as iPaaS, this is only one of many usage scenarios for event streaming. However, as explained above, Kafka-based solutions differ greatly from other iPaaS solutions in the Gartner Magic Quadrant. Hence, event streaming deserves its software category.

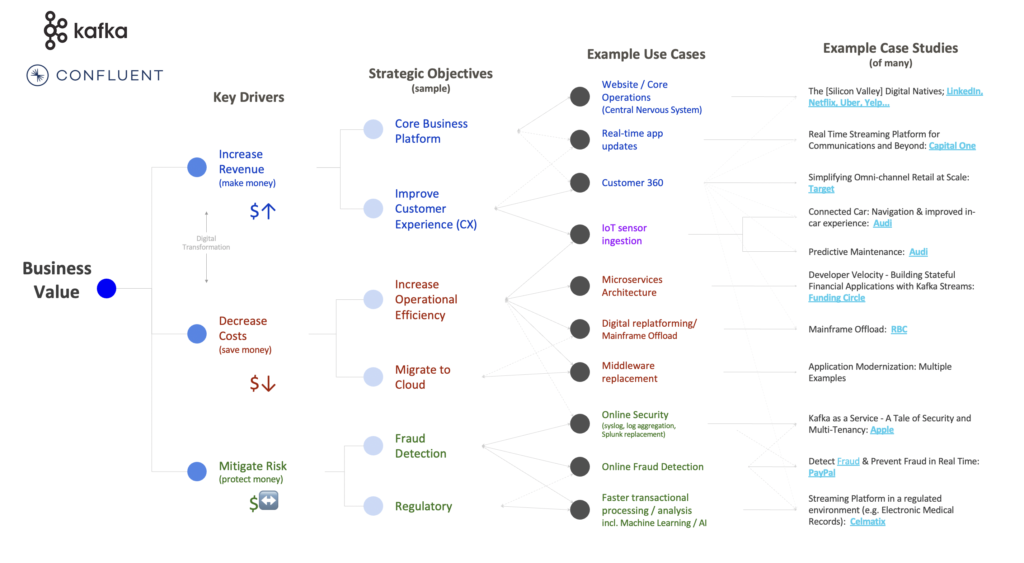

If you still wonder what I mean, check out event streaming use cases across industries to understand the difference between Kafka and traditional iPaaS, MQ, ETL, ESB, API tools. Here is a relatively old but still fantastic diagram that summarizes the broad spectrum of use cases for event streaming:

TL;DR: Kafka provides capabilities for various use cases, not just integration scenarios. Many new innovative business applications are built with Kafka. It is not just an integration platform but a unique suite of capabilities for end-to-end data processing in real-time at scale.

New concepts like Data Mesh also prove the evolution. The basic principles are not unique: Domain-driven design, microservices, true decoupling of services, but now with much more focus on data as a product. The latter means it is turning from a cost center into a profit center and innovative new services. Event streaming is a critical component of a data mesh-powered enterprise architecture as real-time almost always beats slow data across use cases.

The Non-Existing Event Streaming Gartner Magic Quadrant or Forrester Wave

Unfortunately, a Gartner Magic Quadrant or Forrester Wave for Event Streaming does NOT exist today. While some event streaming solutions fit into some of these reports (like the Gartner iPaaS Magic Quadrant or the Forrester Wave for Streaming Analytics), it is still an apple to orange comparison.

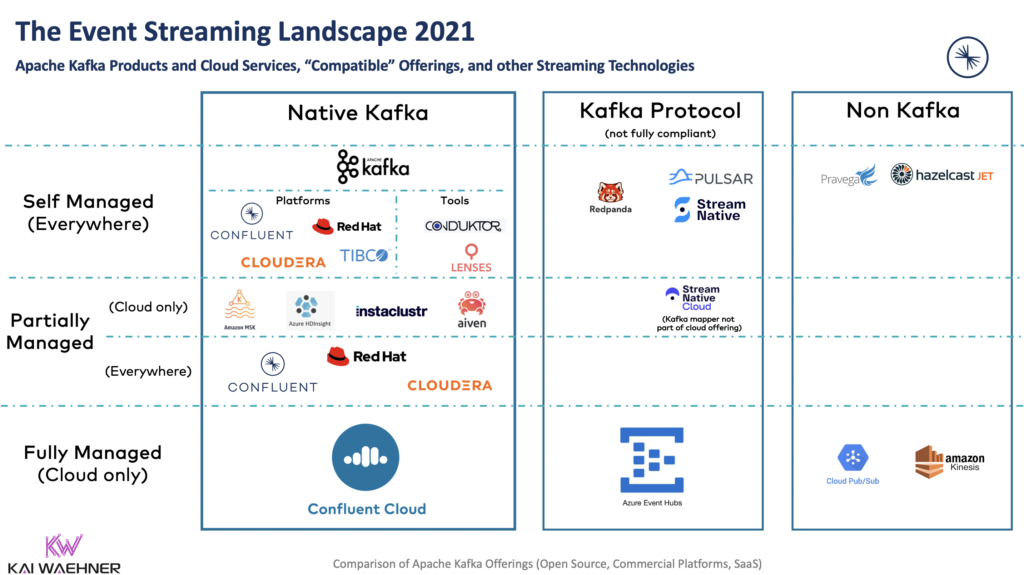

Event Streaming is its category. Many software vendors built their entire business around this category. Confluent is the leader in this space – note that I am biased as a Confluent employee, but I guess there is no question around this statement 🙂 Many other companies emerge around Kafka, or in a related way using the Kafka protocol, or competitive event streaming offerings such as Amazon Kinesis or Apache Pulsar.

The following Event Streaming Landscape 2021 summarizes the current status:

I hope to see a Gartner Magic Quadrant for Event Streaming and a Forrester Wave for Event Streaming soon, too.

Open Source vs. Partially Managed vs. Fully-Managed Event Streaming

One more aspect to point out: You might have noticed that I said, “some event streaming solutions can be considered an iPaaS”. The word “some” is a crucial detail. Just providing an open-source framework is not enough.

iPaaS requires a complete offering, ideally as fully-managed services. Many vendors for event streaming use Kafka, Pulsar, or another framework but do NOT provide a complete offering with operations tooling, commercial 24/7 support, user interfaces, etc. The following resources should help you learn more about the event streaming landscape in 2021:

- Why Kafka became a Standard API like Amazon S3

- Comparison of event streaming and Kafka vendors

- Apache Kafka versus Apache Pulsar

TL;DR: Evaluate the various offerings. A lot of capabilities are just marketing! Many “fully-managed services” are only partially managed instead of serverless and with very limited SLAs and support. Some other offerings provide plenty of cool features but are more an alpha version and overselling than a mature battle-service solution. A counterexample is the complexity in T-Mobile’s report about upgrading Amazon MSK. This shows the difference between “promoting and selling a fully managed service” and the “not at all fully-managed reality”. A truly fully-managed offering does NOT require the end user to upgrade the infrastructure.

Kafka as Event Streaming iPaaS at Deutsche Bahn (German Railway)

Let’s now look at a practicable example to understand why a traditional iPaaS cannot help in use cases that require event streaming and why this combination of capabilities in a single technology sets a new software category.

This section explores a real-world use case with the journey of Deutsche Bahn (German Railway) providing a great customer experience to their customers. This example uses Event Streaming as iPaaS (regarding my definition of these terms).

Use Case: Improve Customer Experience with Real-time Notifications

The use case sounds very simple: Improve the customer experience by providing real-time information and notifications to customers across various channels like websites, smartphones, and displays at train stations.

Delays and cancellations happen in a complex rail network like in Germany. Frequent travelers accept this downside. Nobody can do anything against lousy weather, self-murder using a traveling train, and technical defects.

However, customers at least expect real-time information and notifications so that they can wait in a coffee shop or lounge instead of freezing at the station platform for minutes or even hours. The reality at Deutsche Bahn was a different sequence of notifications: 10min delay, 20min delay, 30min delay, train canceled – take the next train.

The goal of the project Reisendeninformation (= traveler information system) was to improve the day-to-day experience of millions of travelers across Germany by delivering up-to-date, accurate, and consistent travel information in any location.

Initial Project: A mess of different Integration Technologies

Again, the use case sounds simple. Let’s send real-time notifications to customers if a train is delayed or canceled. Every magic black iPaaS box can do this:

Is this true or just the marketing of all the integration vendors? Can each black box integrate all the different systems to correlate events in real-time?

Deutsche Bahn started with an open-source messaging platform to send real-time notifications. Unfortunately, the team quickly found out that not every piece of information was coming in in real-time. So, a caching and storage system was added to the project to handle the late-arriving information from some legacy batch or file-based systems. Now, the data from the legacy systems needed to be integrated. Hence, an integration framework was installed to connect to files, databases, and other applications. Now, the data needed to be processed, correlating real-time and non-real-time data from various systems. A stream processing engine can do this.

The pilot project included several different frameworks. A few skeptical questions came up:

- How to scale this combination of very different technologies?

- How to get end-to-end support across various platforms?

- Is this cost-efficient?

- What is the time-to-market for new features?

Deutsche Bahn re-evaluated their tech stack and found out that Apache Kafka provides all the required capabilities out-of-the-box within one platform.

The Migration to Cloud-native Kafka

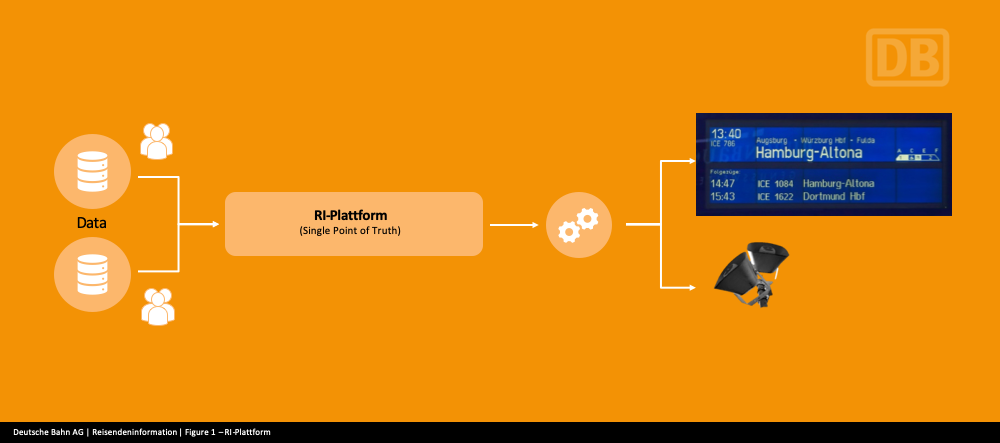

The team at Deutsche Bahn re-architected their pilot project. Here is the new solution leveraging Kafka as the single point of truth between various systems, technologies, and communication paradigms:

A traditional iPaaS can implement this scenario. But with several codebases, technologies, and clusters, even if you select one software vendor! Some iPaaS might even do well in the beginning but struggle to scale up. Only event streaming allows to start small but scales up with no need to re-architect the infrastructure.

Today, the project is in production. Check your DB Navigator mobile app to get real-time updates about all trains in Germany. Living in Germany, I appreciate this new service to have a much better traveling experience.

Learn more about the project from the Deutsche Bahn team. They gave several public talks at different conferences and wrote on the Confluent Blog about their Kafka journey. Though, the journey did not end here 🙂 As described in their blog post, Deutsche Bahn is now evaluating the migration from a self-managed Confluent Platform deployment in the public cloud to the fully-managed, truly serverless Confluent Cloud offering to reduce TCO and improve time-to-market.

Complete Platform: Kafka is more than just Messaging

A project like the one described above is only possible with a complete platform. Many people still think about Kafka as an ingestion layer into a data lake or data warehouse, as this was one of the first prominent Kafka use cases. Data ingestion is still an excellent use case today. Many projects already use more than just the core of Kafka to implement this. Kafka Connect provides out-of-the-box connectivity between Kafka and the data store. If you are in the public cloud, you even get integrated in a fully-managed, serverless manner whether you need to integrate with a 1st party cloud service like Amazon S3, Google Cloud BigQuery, Azure Cosmos DB, or other 3rd SaaS like MongoDB Atlas, Snowflake, or Databricks.

Continous Kafka-native stream processing is the next level of a complete platform. For instance, Deutsche Bahn leverages Kafka Streams a lot for their data correlations in real-time at scale. Other companies use ksqlDB as a fully-managed function in Confluent Cloud. The enormous benefit is that you don’t need yet another platform or service for streaming analytics. A complete platform makes the architecture more cost-efficient, and end-to-end integration is easier from SLA and support perspective.

A complete platform requires many additional services “on top”, like visualization of data flows, data governance, role-based access control, audit logs, self-service capabilities, user interfaces, visual coding/code generation tools, etc. Visual coding is the point where traditional middleware and iPaaS tools are stronger today than event streaming offerings.

3rd Party integration via Open API and non-Kafka Tools

So far, you learned why event streaming is its software category and how Deutsche Bahn is an excellent example to show this. However, event streaming is NOT the silver bullet for every problem! When exploring if Kafka and MQ/ETL/ESB are friends, enemies, or frenemies, I already pointed this out. For instance, MQ or an ESB can complement event streaming in an integration project, depending on your project requirements.

Let’s go back to Deutsche Bahn. As mentioned, their real-time traveler information platform is live, with Kafka as the single point of truth. Recently, Deutsche Bahn announced a partnership with Google and 3rd Party Integration with Google Maps:

Real-time Schedule Updates to 3rd Party Google Maps API

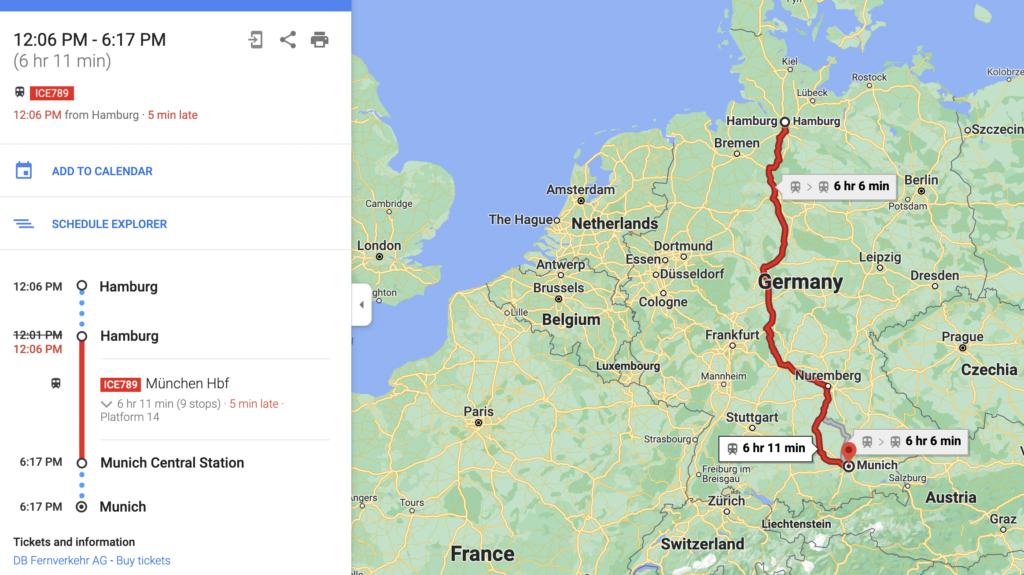

The integration provides real-time train schedule updates to Google Maps users:

The integration allows to reach new people and expand the business. Users can buy train tickets via one click from the Google Maps page.

I don’t know what technology or product this 3rd party integration uses. The heart of Deutsche Bahn’s real-time infrastructure enables new innovative business models and collaboration with partners.

Likely, this integration between Deutsche Bahn and Google Maps does not directly use the Kafka protocol (even though this is done sometimes, for instance, see Here Technologies Open API for their mapping service).

Event Streaming is complementary to other services. In this example, the project team might have used an API Management platform to provide internal APIs to external consumers, including access control, billing, and reporting. The article “Apache Kafka and API Management / API Gateway – Friends, Enemies or Frenemies?” explores the relationship between event streaming and API Management.

Event Streaming Everywhere – Cloud, Data Center, Edge

Real-time beats slow data everywhere. This is a new software category because we don’t just send events into another database via a messaging system. Instead, we use and correlate data from different data source in real-time. That’ the real added value and game changer in innovative projects.

Hence, event streaming must be possible in every location. While cloud-first is a viable strategy for many IT projects, edge and hybrid scenarios are and will continue to be very relevant.

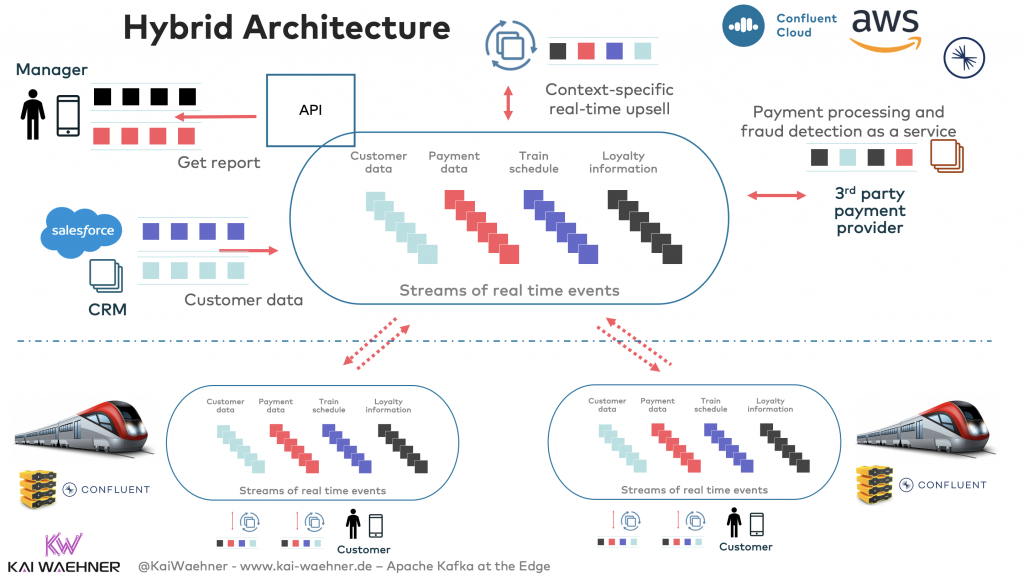

Think about a project related to the Deutsche Bahn example above (but being completely fictive): A hybrid architecture with real-time applications the cloud and edge computing within the trains:

I covered this in other articles, including “Edge Use Cases for Apache Kafka Across Industries“. TL;DR: Leverage the open architecture of event streaming for real-time data processing everywhere, including multi-cloud, data centers, and edge deployments (i.e., outside a data center). The enterprise architecture does not need various technologies and products to implement real-time data processing and integration with separate iPaaS, ETL tools, ESBs, MQ systems.

However, once again, it is crucial to understand how event streaming fits into the enterprise architecture. For instance, Kafka is often combined with IoT technologies such as MQTT for the last mile integration with IoT devices in these edge scenarios.

Slide Deck and Video for “Apache Kafka vs. Cloud-native iPaaS Middleware”

Here are the related slides for this topic:

Click on the button to load the content from www.slideshare.net.

And the video recording of this presentation:

Kafka is a cloud-native iPaaS, and much more!

Kafka is the new black for integration projects across industries because of its unique combination of capabilities. Some Kafka solutions are part of the iPaaS category, with trade-offs like any other integration platform.

However, event streaming is its software category. Hence, iPaaS is just one usage of Kafka or other similar event streaming platforms. Real-time data beats slow data. For that reason, event streaming is the backbone for many projects to process data in motion (but also integrate with other systems that store data at rest for reporting, model training, and other use cases).

How do you leverage event streaming and Kafka as an integration platform? What projects did you already work on or are in the planning? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.

1 comment

Thanks much for producing this kind of content. It is very informative and helpful.

Quick question: in the video there is a mention of a “Confluent Stream Designer for Kafka Data Streams”. Is that a product or tool currently available?

I could not find any mention of it in the Confluent website.

Thanks