Apache Kafka 4.0 is more than a version bump. It marks a pivotal moment in how modern organizations build, operate, and scale their data infrastructure. While developers and architects may celebrate feature-level improvements, the true value of this release is what it enables at the business level: operational excellence, faster time-to-market, and competitive agility powered by data in motion. Kafka 4.0 represents a maturity milestone in the evolution of the event-driven enterprise.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And download my free book about data streaming use cases and business value, including customer stories across all industries.

From Event Hype to Event Infrastructure

Over the last decade, Apache Kafka has evolved from a scalable log for engineers at LinkedIn to the de facto event streaming platform adopted across every industry. Banks, automakers, telcos, logistics firms, and retailers alike rely on Kafka as the nervous system for critical data.

Today, over 150,000 organizations globally use Apache Kafka to enable real-time operations, modernize legacy systems, and support digital innovation. Kafka 4.0 moves even deeper into this role as a business-critical backbone. If you want to learn more about use case and industry success stories, download my free ebook and subscribe to my newsletter.

Version 4.0 of Apache Kafka signals readiness for CIOs, CTOs, and enterprise architects who demand:

- Uninterrupted uptime and failover for global operations

- Data-driven automation and decision-making at scale

- Flexible deployment across on-premises, cloud, and edge environments

- A future-proof foundation for modernization and innovation

Apache Kafka 4.0 doesn’t just scale throughput—it scales business outcomes:

This post does not cover the technical improvements and new features of the 4.0 release, like ZooKeeper removal, Queues for Kafka, and so on. Those are well-documented elsewhere. Instead, it highlights the strategic business value Kafka 4.0 delivers to modern enterprises.

Kafka 4.0: A Platform Built for Growth

Today’s IT leaders are not just looking at throughput and latency. They are investing in platforms that align with long-term architectural goals and unlock value across the organization.

Apache Kafka 4.0 offers four core advantages for business growth:

1. Open De Facto Standard for Data Streaming

Apache Kafka is the open, vendor-neutral protocol that has become the de facto standard for data streaming across industries. Its wide adoption and strong community ecosystem make it both a reliable choice and a flexible one.

Organizations can choose between open-source Kafka distributions, managed services like Confluent Cloud, or even build their own custom engines using Kafka’s open protocol. This openness enables strategic independence and long-term adaptability—critical factors for any enterprise architect planning a future-proof data infrastructure.

2. Operational Efficiency at Enterprise Scale

Reliability, resilience, and ease of operation are key to any business infrastructure. Kafka 4.0 reduces operational complexity and increases uptime through a simplified architecture. Key components of the platform have been re-engineered to streamline deployment and reduce points of failure, minimizing the effort required to keep systems running smoothly.

Kafka is now easier to manage, scale, and secure—whether deployed in the cloud, on-premises, or at the edge in environments like factories or retail locations. It reduces the need for lengthy maintenance windows, accelerates troubleshooting, and makes system upgrades far less disruptive. As a result, teams can operate with greater efficiency, allowing leaner teams to support larger, more complex workloads with greater confidence and stability.

Storage management has also evolved in the past releases by decoupling compute and storage. This optimization allows organizations to retain large volumes of event data cost-effectively without compromising performance. This extends Kafka’s role from a real-time pipeline to a durable system of record that supports both immediate and long-term data needs.

With fewer manual interventions, less custom integration, and more built-in intelligence, Kafka 4.0 allows engineering teams to focus on delivering new services and capabilities—rather than maintaining infrastructure. This operational maturity translates directly into faster time-to-value and lower total cost of ownership at enterprise scale.

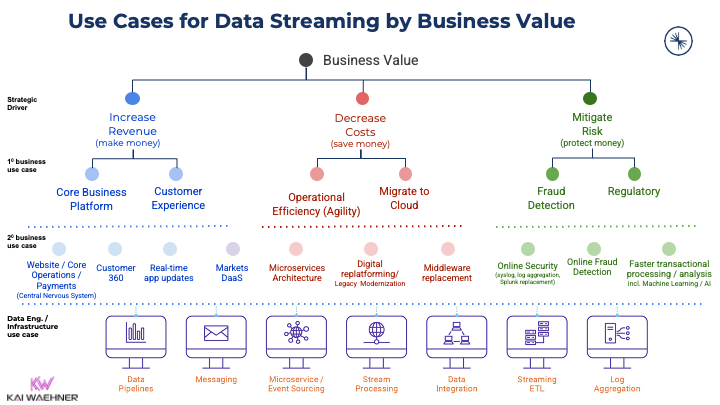

3. Innovation Enablement Through Real-Time Data

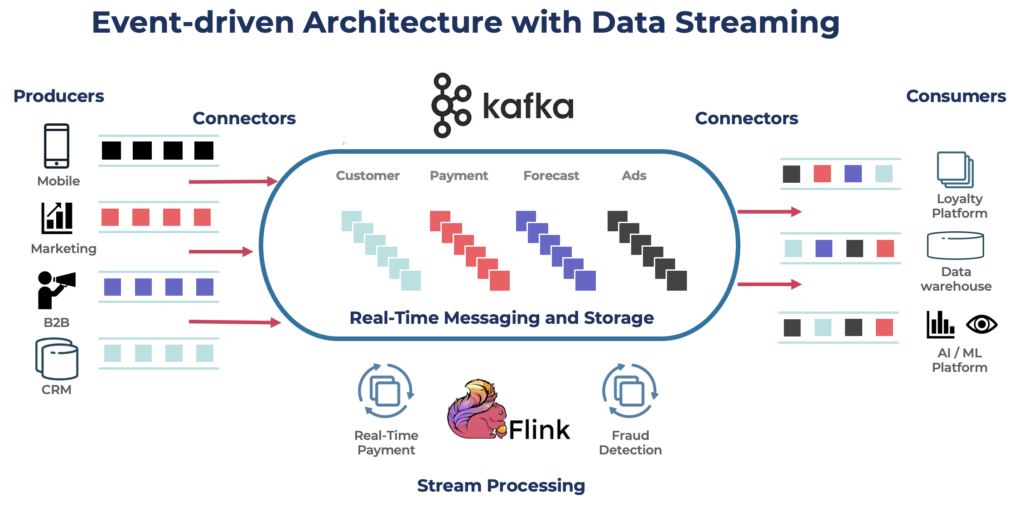

Real-time data unlocks entirely new business models: predictive maintenance in manufacturing, personalized digital experiences in retail, and fraud detection in financial services. Kafka 4.0 empowers teams to build applications around streams of events, driving automation and responsiveness across the value chain.

This shift is not just technical—it’s organizational. Kafka decouples producers and consumers of data, enabling individual teams to innovate independently without being held back by rigid system dependencies or central coordination. Whether building with Java, Python, Go, or integrating with SaaS platforms and cloud-native services, teams can choose the tools and technologies that best fit their goals.

This architectural flexibility accelerates development cycles and reduces cross-team friction. As a result, new features and services reach the market faster, experimentation is easier, and the overall organization becomes more agile in responding to customer needs and competitive pressures. Kafka 4.0 turns real-time architecture into a strategic asset for business acceleration.

4. Cloud-Native Flexibility

Kafka 4.0 reinforces Kafka’s role as the backbone of hybrid and multi-cloud strategies. In a data streaming landscape that spans public cloud, private infrastructure, and on-premise environments, Kafka provides the consistency, portability, and control that modern organizations require.

Whether deployed in AWS, Azure, GCP, or edge locations like factories or retail stores, Kafka delivers uniform performance, API compatibility, and integration capabilities. This ensures operational continuity across regions, satisfies data sovereignty and regulatory needs, and reduces latency by keeping data processing close to where it’s generated.

Beyond Kafka brokers, it is the Kafka protocol itself that has become the standard for real-time data streaming—adopted by vendors, platforms, and developers alike. This protocol standardization gives organizations the freedom to integrate with a growing ecosystem of tools, services, and managed offerings that speak Kafka natively, regardless of the underlying engine.

For instance, innovative data streaming platforms built using the Kafka protocol, such as WarpStream, provide a Bring Your Own Cloud (BYOC) model to allow organizations to maintain full control over their data and infrastructure while still benefiting from managed services and platform automation. This flexibility is especially valuable in regulated industries and globally distributed enterprises, where cloud neutrality and deployment independence are strategic priorities.

Kafka 4.0 not only supports cloud-native operations—it strengthens the organization’s ability to evolve, modernize, and scale without vendor lock-in or architectural compromise.

Real-Time as a Business Imperative

Data is no longer static. It is dynamic, fast-moving, and continuous. Businesses that treat data as something to collect and analyze later will fall behind. Kafka enables a shift from data at rest to data in motion.

Kafka 4.0 supports this transformation across all industries. For instance:

- Automotive: Streaming data from factories, fleets, and connected vehicles

- Banking: Real-time fraud detection and transaction analytics

- Telecom: Customer engagement, network monitoring, and monetization

- Healthcare: Monitoring devices, alerts, and compliance tracking

- Retail: Dynamic pricing, inventory tracking, and personalized offers

These use cases cannot be solved by daily batch jobs. Kafka 4.0 enables systems—and decision-making—to operate at business speed. “The Top 20 Problems with Batch Processing (and How to Fix Them with Data Streaming)” explore this in more detail.

Additionally, Apache Kafka ensures data consistency across real-time streams, batch processes, and request-response APIs—because not all workloads are real-time, and that’s okay.

The Kafka Ecosystem and the Data Streaming Landscape

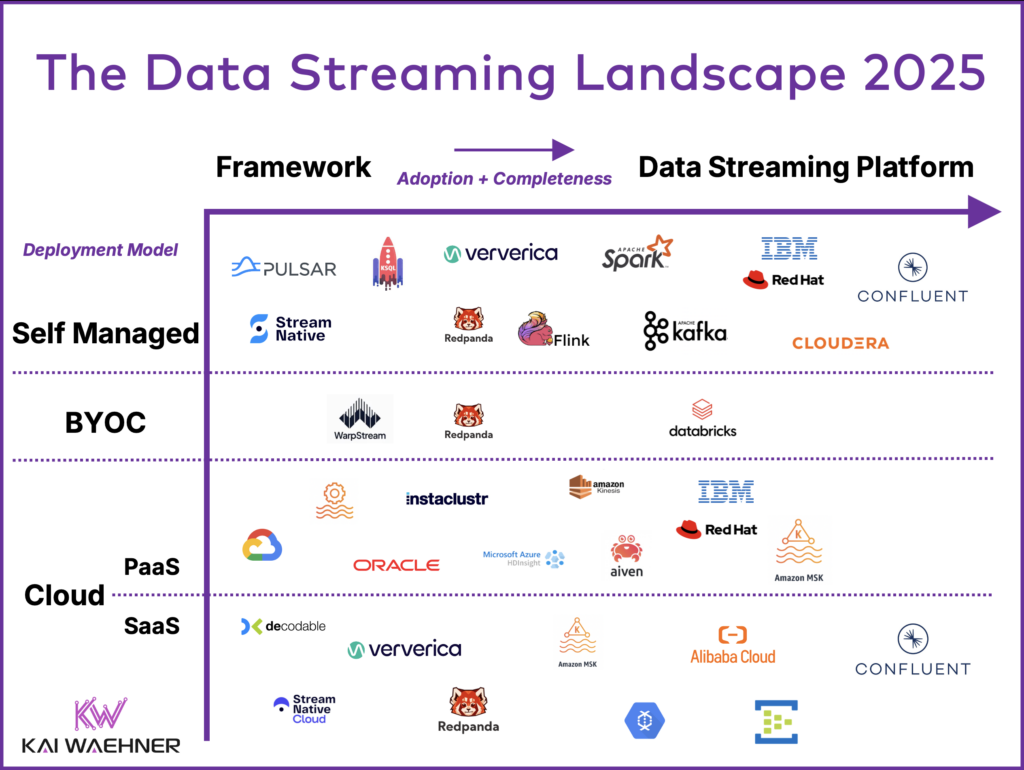

Running Apache Kafka at enterprise scale requires more than open-source software. Kafka has become the de facto standard for data streaming, but success with Kafka depends on using more than just the core project. Real-time applications demand capabilities like data integration, stream processing, governance, security, and 24/7 operational support.

Today, a rich and rapidly developing data streaming ecosystem has emerged. Organizations can choose from a growing number of platforms and cloud services built on or compatible with the Kafka protocol—ranging from self-managed infrastructure to Bring Your Own Cloud (BYOC) models and fully managed SaaS offerings. These solutions aim to simplify operations, accelerate time-to-market, and reduce risk while maintaining the flexibility and openness that Kafka is known for.

Confluent leads this category as the most complete data streaming platform, but it is part of a broader ecosystem that includes vendors like Amazon MSK, Cloudera, Azure Event Hubs, and emerging players in cloud-native and BYOC deployments. The data streaming landscape explores all the different vendors in this software category:

The market is moving toward complete data streaming platforms (DSP)—offering end-to-end capabilities from ingestion to stream processing and governance. Choosing the right solution means evaluating not only performance and compatibility but also how well the platform aligns with your business strategy, security requirements, and deployment preferences.

Kafka is at the center—but the future of data streaming belongs to platforms that turn Kafka 4.0’s architecture into real business value.

The Road Ahead with Apache Kafka 4.0 and Beyond

Apache Kafka 4.0 is a strategic enabler responsible for driving modernization, innovation, and resilience. It directly supports the key transformation goals:

- Modernization without disruption: Kafka integrates seamlessly with legacy systems and provides a bridge to cloud-native, event-driven architectures.

- Platform standardization: Kafka becomes a central nervous system across departments and business units, reducing fragmentation and enabling shared services.

- Faster ROI from digital initiatives: Kafka accelerates the launch and evolution of digital services, helping teams iterate and deliver measurable value quickly.

Kafka 4.0 reduces operational complexity, unlocks developer productivity, and allows organizations to respond in real time to both opportunities and risks. This release marks a significant milestone in the evolution of real-time business architecture.

Kafka is no longer an emerging technology—it is a reliable foundation for companies that treat data as a continuous, strategic asset. Data streaming is now as foundational as databases and APIs. With Kafka 4.0, organizations can build connected products, automate operations, and reinvent the customer experience easier than ever before.

And with innovations on the horizon—such as built-in queueing capabilities, brokerless writes directly to object storage, and expanded transactional guarantees supporting the two-phase commit protocol (2PC)—Kafka continues to push the boundaries of what’s possible in real-time, event-driven architecture.

The future of digital business is real-time. Apache Kafka 4.0 is ready.

Want to learn more about Kafka in the enterprise? Let’s connect and exchange ideas. Subscribe to the Data Streaming Newsletter. Explore the Kafka Use Case Book for real-world stories from industry leaders.