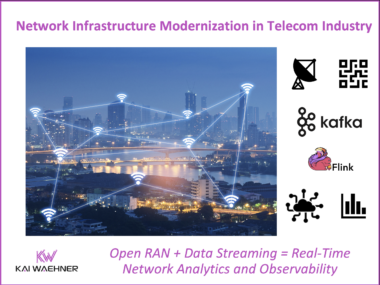

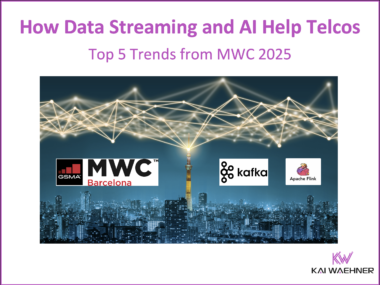

Open RAN and Data Streaming: How the Telecom Industry Modernizes Network Infrastructure with Apache Kafka and Flink

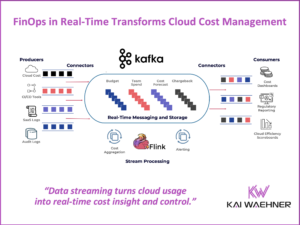

Open RAN is transforming telecom by decoupling hardware and software to unlock flexibility, innovation, and cost savings. But to fully realize its potential, telcos need real-time data streaming for observability, automation, and AI. This post shows how Apache Kafka, Apache Flink, and a diskless data streaming platform like Confluent WarpStream help telco operators scale RAN data processing securely and cost-effectively.