Augmented Reality (AR) and Virtual Reality (VR) get traction across industries far beyond gaming. Retail, manufacturing, transportation, healthcare, and other verticals leverage it more and more. This blog post explores a retail demo that integrates a cutting-edge augmented reality mobile shopping experience with the backend systems via the event streaming platform Apache Kafka.

Augmented Reality (AR) and Virtual Reality (VR)

Augmented reality (AR) is an interactive experience of a real-world environment where the objects in the real world are enhanced by computer-generated perceptual information. AR is a system that fulfills three basic features: a combination of real and virtual worlds, real-time interaction, and accurate 3D registration of virtual and real objects.

Pokemon Go from Nintendo is one of the most famous AR applications used by millions of people. The adoption of AR increased a lot in the last few years as modern smartphones and tablets support AR out-of-the-box. Fun fact: Pokemon Go is also a great success story for Google and Kubernetes to provide an elastic and scalable infrastructure: Bringing Pokémon GO to life on Google Cloud.

.Augmented reality alters one’s ongoing perception of a real-world environment, whereas Virtual Reality (VR) completely replaces the user’s real-world environment with a simulated one. While the below demo is for AR, the combination with Kafka is possible for VR use cases similarly.

Apache Kafka and Augmented Reality SDKs

Before we jump to the use case, let’s be clear AR and VR are not directly related to Apache Kafka. However, both are complementary to implement innovative use cases. Most AR and VR applications require communication with backend services and other users to provide the expected user experience. In real-time. At scale. 24/7. That’s why Kafka is a perfect fit for AR and VR, similarly to all the other use cases across all verticals where event streaming helps.

AR and VR applications are built with specific 3D engines. This includes:

- Game engines (that move into other markets more and more) like Unity 3D, Unreal Engine, CryEngine, Lumberyard

- Physics engines like Bullet, Havok, PhysX

- Vertical solutions, for instance, Industry 4.0 / Industrial IoT (IIoT) products from PTC, Siemens, or General Electric

- AR/VR SDK that is either included in the 3D engines or separate technologies such as Apple’s ARKit for iOS devices, ARCore from Google, Vuforia, or EasyAR

Fun fact: Many of these engines and vertical solutions do not just sell the software. They also provide additional services on top of their products. For the same reason, as in other companies, these services’ central nervous system is often Apache Kafka. For instance, Unity is a heavy user of Apache Kafka in Confluent Cloud to handle on average about half a million events per second to process millions of dollars of monetary transactions for their in-game purchase and advertisement services.

Use Cases for Kafka and Augmented Reality

This blog post explores a use case from the retail industry: Online shopping with an enhanced user experience and location-based services. Similar examples exist across industries:

- Mobility services: Enhanced customer experience and location-based services for navigation, logistics, …

- Manufacturing: Education and training on simulated machines, equipment repair

- Gaming: Augmented real-world environments

- Smart City: Simulated planning of urban, electricity, water, …

- Industry-agnostic: Improved planning (before production), ergonomy simulation tests,

- Etc.

The heart of the applications is the AR/VR hardware and app.

The data’s central nervous system is Apache Kafka to integrate with other systems and correlate the aggregated data sets continuously in real-time.

The Architecture of a Cutting Edge Retail Example

Kafka is the new black in retail across various use cases. Learn more details about event streaming in retail for omnichannel customer 360 experiences and hybrid Kafka retail architectures with edge deployments in the smart retail store.

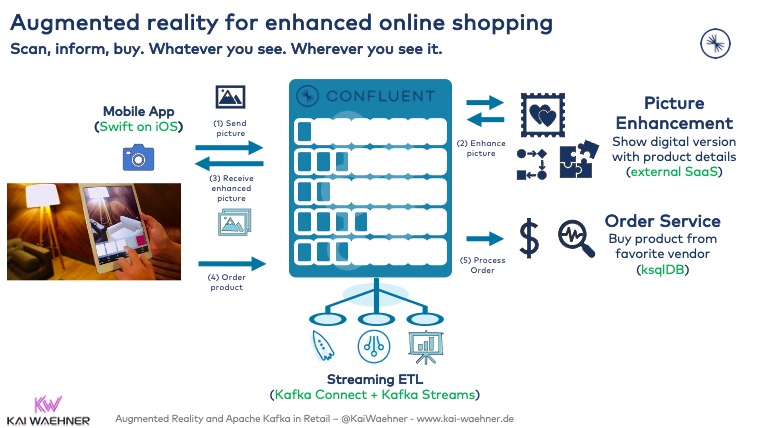

The following example demonstrates online shopping with an enhanced user experience and location-based services. Customers can buy anything they see. Anywhere. The customer makes a picture of the thing. The backend processes the picture and augments it with additional information such as a digital picture, product details, and different shops to buy it.

This example shows how important the integration of the AR app with the company’s backend services is:

A few notes on the architecture:

- The AR enhancements are possible on the client device or server-side. This depends on the business case and technical environment. For instance, the app could store images for products and only load updated details like the server’s price.

- Kafka supports the handling of large messages (like images from the phone camera). Nevertheless, a comparison of the trade-offs is inevitable.

- The example uses Swift on the mobile app and Kafka-native stream processing tools (Kafka Streams and ksqlDB) on the backend side. However, the architecture is very flexible. Any other technology or 3rd party SaaS service is possible. That’s the beauty of Kafka’s strong support for domain-driven design (DDD).

Live Demo Video

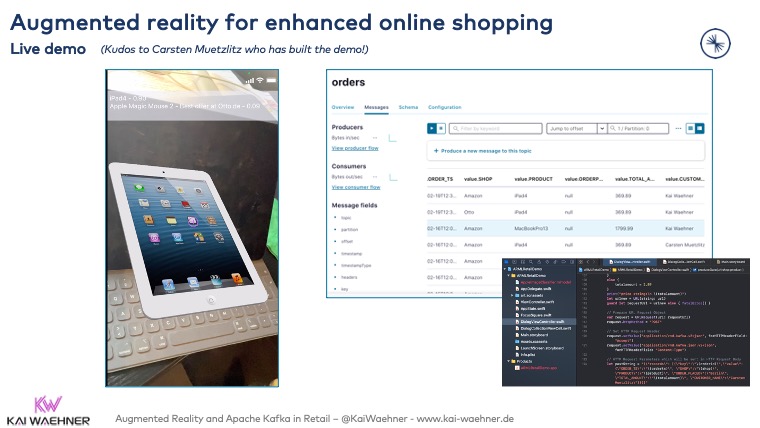

The following video demonstrates the above use case of a smart retail experience with AR. Kudos to my colleague Carsten Muetzlitz who implemented the demo:

The demo uses Swift, ARKit, Xcode, and Confluent Cloud. Hence, it only supports Apple iOS devices. But hey, it is just a demo to share the idea of building an AR app and integrate/correlate the data in real-time at scale with other backend systems using the Kafka ecosystem. Confluent Cloud provides the serverless SaaS offering so that the developer can focus on building the AR app and business applications in the backend.

Augmented Reality + Apache Kafka = Science Fiction?!

Augmented Reality and Virtual Reality are still in the early stages. Use cases like the online shopping experience – where you can buy anything you see anywhere – are often just a vision. But it is not far away from reality.

Kafka deployments exist across industries and business units already. If you want to build an innovative AR or VR app, it is just another microservices and mobile app to connect to the Kafka infrastructure. The integration of AR/VR into the supply chain is pretty straightforward. Kafka is typically already connected to the payment infrastructure, real-time inventory, and so on.

What are your experiences and plans for event streaming together with AR/VR use cases? Did you already build applications with Apache Kafka? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.