The rise of data in motion in the insurance industry is visible across all lines of business, including life, healthcare, travel, vehicle, and others. Apache Kafka changes how enterprises rethink data. This blog post explores use cases and architectures for event streaming. Real-world examples from Generali, Centene, Humana, and Tesla show innovative insurance-related data integration and stream processing in real-time.

Digital Transformation in the Insurance Industry

Most insurance companies have similar challenges:

- Challenging market environments

- Stagnating economy

- Regulatory pressure

- Changes in customer expectations

- Proprietary and monolithic legacy applications

- Emerging competition from innovative insurtechs

- Emerging competition from other verticals that add insurance products

Only a good transformation strategy guarantees a successful future for traditional insurance companies. Nobody wants to become the next Nokia (mobile phone), Kodak (photo camera), or BlockBuster (video rental). If you fail to innovate in time, you are done.

Real-time beats slow data. Automation beats manual processes. The combination of these two game changers creates completely new business models in the insurance industry. Some examples:

- Claims processing including review, investigation, adjustment, remittance or denial of the claim

- Claim fraud detection by leveraging analytic models trained with historical data

- Omnichannel customer interactions including a self-service portal and automated tools like NLP-powered chatbots

- Risk prediction based on lab testing, biometric data, claims data, patient-generated health data (depending on the laws of a specific country)

These are just a few examples.

The shift to real-time data processing and automation is key for many other use cases, too. Machine learning and deep learning enable the automation of many manual and error-prone processes like document and text processing.

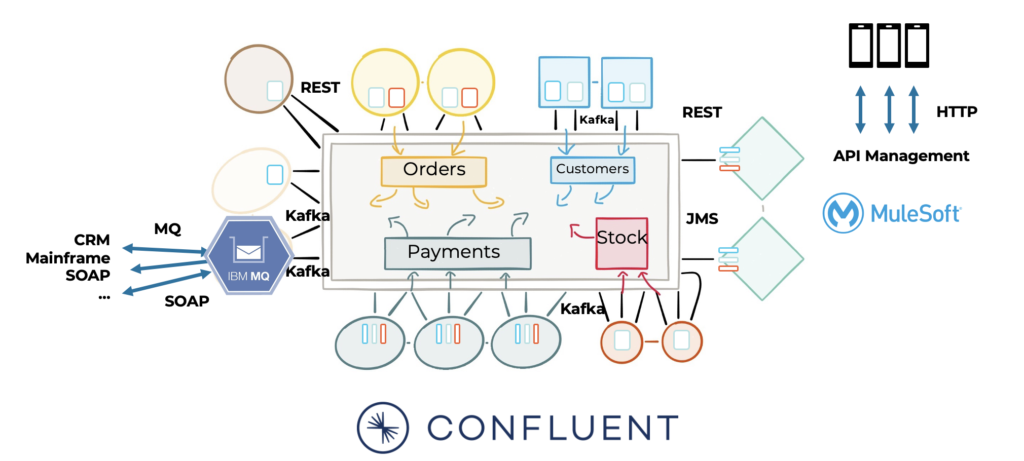

The Need for Brownfield Integration

Traditional insurance companies usually (have to) start with brownfield integration before building new use cases. The integration of legacy systems with modern application infrastructures and the replication between data centers and public or private cloud-native infrastructures are a key piece of the puzzle.

Common integration scenarios use traditional middleware that is already in place. This includes MQ, ETL, ESB, and API tools. Kafka is complementary to these middleware tools:

More details about this topic are available in the following two posts:

- Kafka and Traditional Middleware – Friends, Enemies, Frenemies?

- Apache Kafka vs. API Management / API Gateway

Greenfield Applications at Insurtech Companies

Insurtechs have a huge advantage: They can start greenfield. There is no need to integrate with legacy applications and monolithic architectures. Hence, some traditional insurance companies go the same way. They start from scratch with new applications instead of trying to integrate old and new systems.

This setup has a huge architectural advantage: There is no need for traditional middleware as only modern protocols and APIs need to be integrated. No monolithic and proprietary interfaces such as Cobol, EDI, or SAP BAPI/iDoc exist in this scenario. Kafka makes new applications agile, scalable, and flexible with open interfaces and real-time capabilities.

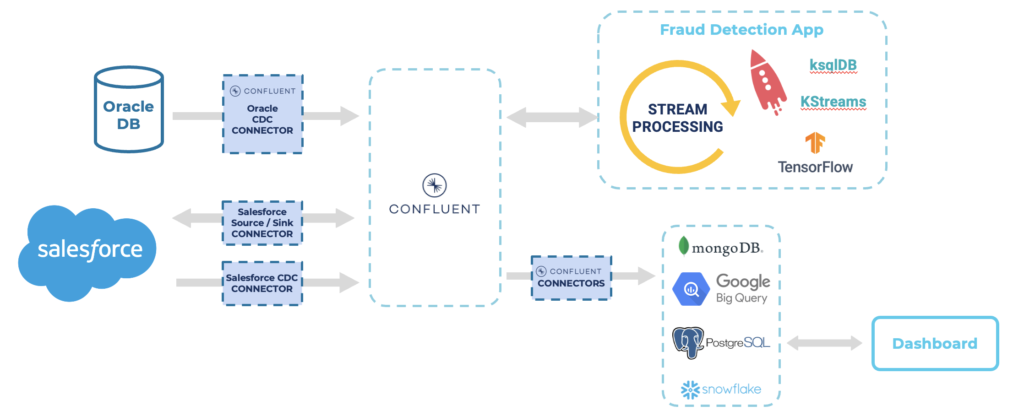

Here is an example of an event streaming architecture for claim processing and fraud detection with the Kafka ecosystem:

Real-World Deployments of Kafka in the Insurance Industry

Let’s take a look at a few examples of real-world deployments of Kafka in the insurance industry.

Generali – Kafka as Integration Platform

Generali is one of the top ten largest insurance companies in the world. The digital transformation from Generali Switzerland started with Confluent as a strategic integration platform. They started their journey by integrating with hundreds of legacy systems like relational databases. Change Data Capture (CDC) pushes changes into Kafka in real-time. Kafka is the central nervous system and integration platform for the data. Other real-time and batch applications consume the events.

From here, other applications consume the data for further processing. All applications are decoupled from each other. This is one of the unique benefits of Kafka compared to other messaging systems. Real decoupling and domain-driven design (DDD) are not possible with traditional MQ systems or SOAP / REST web services.

Design Principles of Generali’s Cloud-Native Architecture

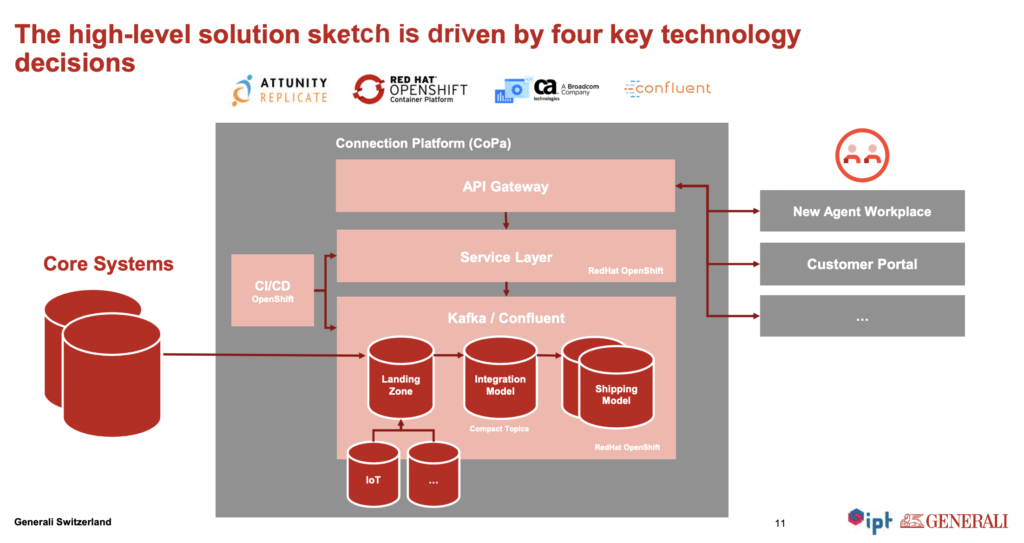

The key design principles for the next-generation platform at Generali include agility, scalability, cloud-native, governance, data, and event processing. Hence, Generali’s architecture is powered by a cloud-native infrastructure leveraging Kubernetes and Apache Kafka:

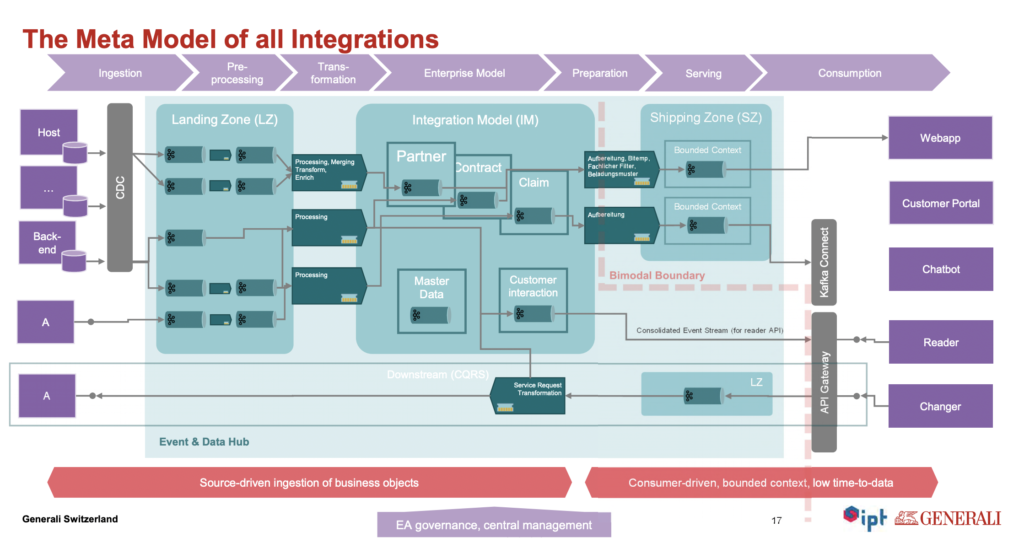

The following integration flow shows the scalable microservice architecture of Generali. The streaming ETL process includes data integration and data processing decoupled environments:

Centene – Integration and Data Processing at Scale in Real-Time

Centene is the largest Medicaid and Medicare Managed Care Provider in the US. Their mission statement is “transforming the health of the community, one person at a time”. The healthcare insurer acts as an intermediary for both government-sponsored and privately insured health care programs.

Centene’s key challenge is growth. Many mergers and acquisitions require a scalable and reliable data integration platform. Centene chose Kafka due to the following capabilities:

- highly scalable

- high autonomy and decoupling

- high availability and data resiliency

- real-time data transfer

- complex stream processing

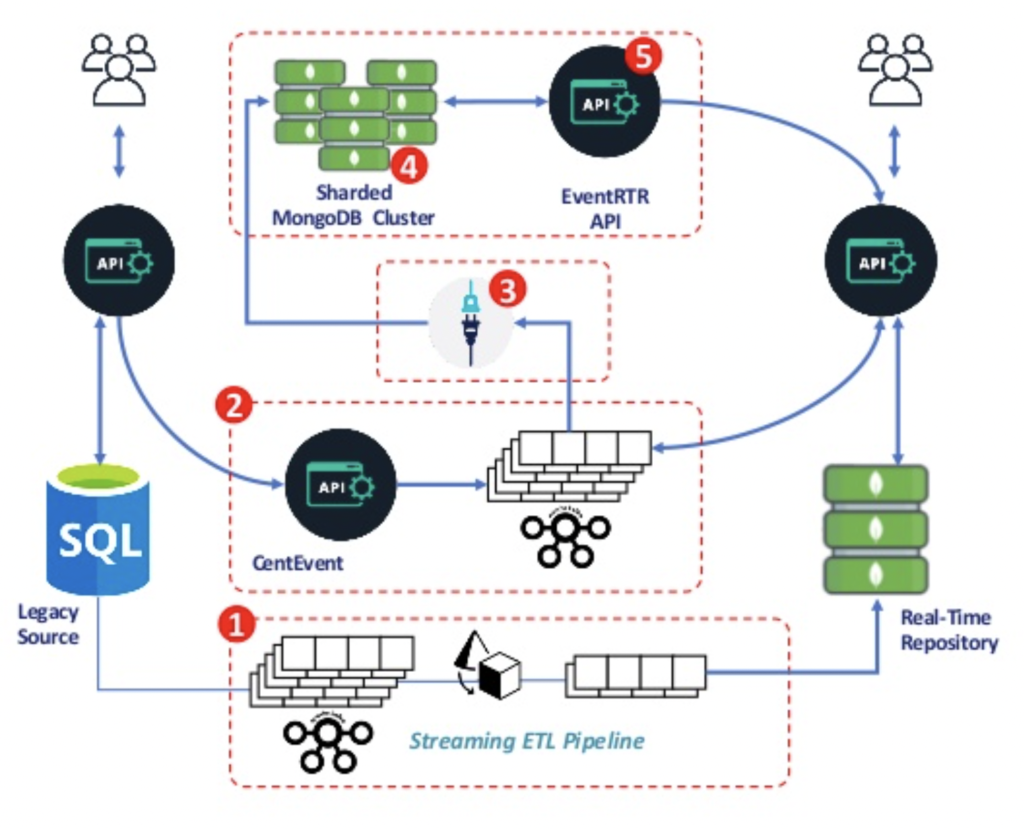

Centene’s architecture uses Kafka for data integration and orchestration. Legacy databases, MongoDB, and other applications and APIs leverage the data in real-time, batch, and request-response:

Swiss Mobiliar – Decoupling and Orchestration

Swiss Mobiliar (Schweizerische Mobiliar aka Die Mobiliar) is is the oldest private insurer in Switzerland.

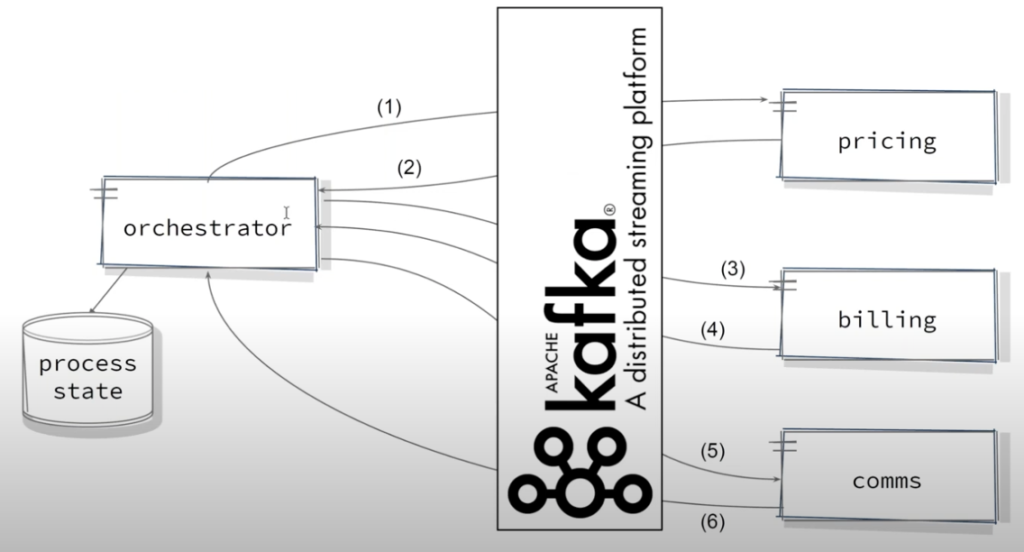

Event Streaming with Kafka supports various use cases at Swiss Mobiliar:

- Orchestrator application to track the state of a billing process

- Kafka as database and Kafka Streams for data processing

- Complex stateful aggregations across contracts and re-calculations

- Continuous monitoring in real-time

Their architecture shows the decoupling of applications and orchestration of events:

Also, check out the on-demand webinar with Mobiliar and Spoud to learn more about their Kafka usage.

Humana – Real-Time Integration and Analytics

Humana Inc. is a for-profit American health insurance. In 2020, the company ranked 52 on the Fortune 500 list.

Humana leverages Kafka for real-time integration and analytics. They built an interoperability platform to transition from an insurance company with elements of health to truly a health company with elements of insurance.

Here are the key characteristics of their Kafka-based platform:

- Consumer-centric

- Health plan agnostic

- Provider agnostic

- Cloud resilient and elastic

- Event-driven and real-time

Kafka integrates conversations between the users and the AI platform powered by IBM Watson. The platform captures conversational flows and processes them with natural language processing (NLP) – a deep learning concept.

Some benefits of the platform:

- Adoption of open standards

- Standardized integration partners

- In-workflow integration

- Event-driven for real-time patient interactions

- Highly scalable

freeyou – Stateful Streaming Analytics

freeyou is an insurtech for vehicle insurance. Streaming analytics for real-time price adjustments powered by Kafka and ksqlDB enable new business models. Their marketing slogan shows how they innovate and differentiate from traditional competitors:

“We make insurance simple. With our car insurance, we make sure that you stay mobile in everyday life – always and everywhere. You can take out the policy online in just a few minutes and manage it easily in your freeyou customer account. And if something should happen to your vehicle, we’ll take care of it quickly and easily.”

A key piece of freeyou’s strategy is a great user experience and automatic price adjustments in real-time in the backend. Obviously, Kafka and its stream processing ecosystem are a perfect fit here.

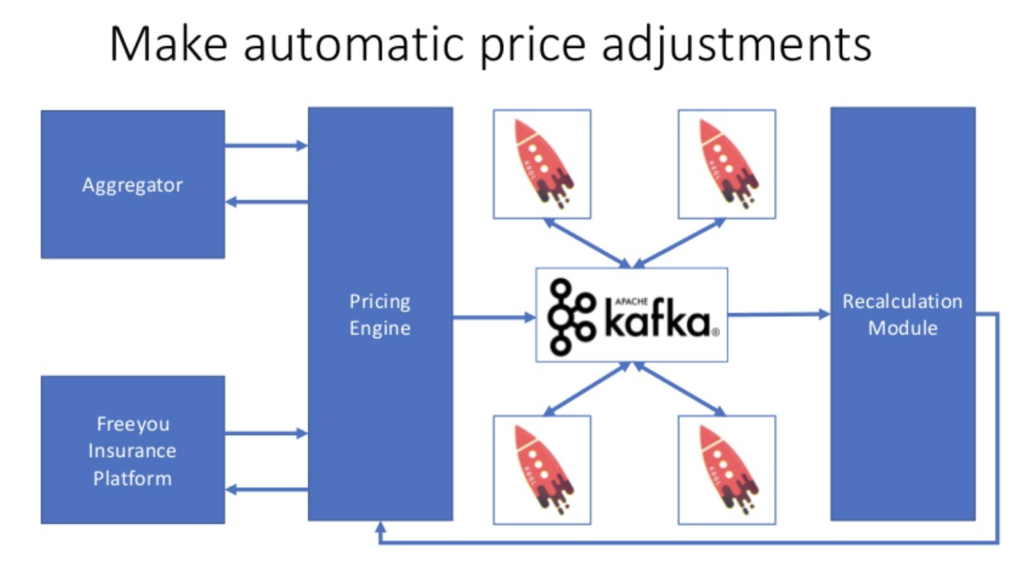

As discussed above, the huge advantage of an insurtech is the possibility to start from the greenfield. No surprise that freeyou’s architectures leverage cutting-edge design and technology. Kafka and KQL enable streaming analytics within the pricing engine, recalculation modules, and other applications:

Tesla – Carmaker and Utility Company, now also Car Insurer

Everybody knows: Tesla is an automotive company that sells cars, maintenance, and software upgrades.

More and more people know: Tesla is a utility company that sells energy infrastructure, solar panels, and smart home integration.

Almost nobody knows: Tesla is a car insurer for their car fleet (limited to a few regions in the early phase). That is the obvious next step if you already collect all the telemetry data from all your cars on the street.

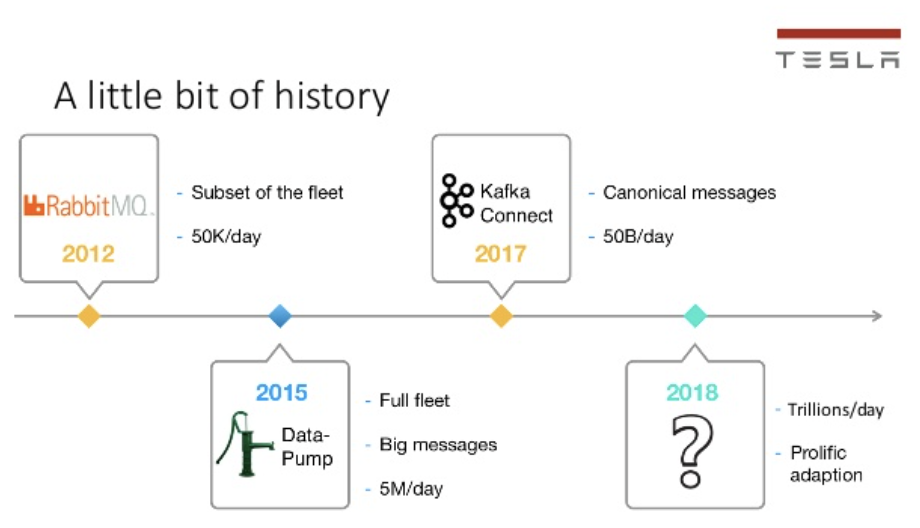

Tesla has built a Kafka-based data platform infrastructure “to support millions of devices and trillions of data points per day”. Tesla showed an interesting history and evolution of their Kafka usage at a Kafka Summit in 2019:

Tesla’s infrastructure heavily relies on Kafka.

There is no public information about Telsa using Kafka for their specific insurance applications. But at a minimum, the data collection from the cars and parts of the data processing relies on Kafka. Hence, I thought this is a great example to think about innovation in car insurance.

Tesla: “Much Better Feedback Loop”

Elon Musk made clear: “We have a much better feedback loop” instead of being statistical like other insurers. This is a key differentiator!

There is no doubt that many vehicle insurers will use fleet data to calculate insurance quotes and provide better insurance services. For sure, some traditional insurers will partner with vehicle manufacturers and fleet providers. This is similar to smart city development, where several enterprises partner to build new innovative use cases.

Connected vehicles and V2X (Vehicle to X) integrations are the starting point for many new business models. No surprise: Kafka plays a key role in the connected vehicles space (not just for Tesla).

Many benefits are created by a real-time integration pipeline:

- Shift from human experts to automation driven by big data and machine learning

- Real-time telematics data from all its drivers’ behavior and the performance of its vehicle technology (cameras, sensors, …)

- Better risk estimation of accidents and repair costs of vehicles

- Evaluation of risk reduction through new technologies (autopilot, stability control, anti-theft systems, bullet-resistant steel)

For these reasons, event streaming should be a strategic component of any next-generation insurance platform.

Slide Deck: Kafka in the Insurance Industry

The following slide deck covers the above discussion in more detail:

Click on the button to load the content from www.slideshare.net.

Kafka Changes How to Think Insurance

Apache Kafka changes how enterprises rethink data in the insurance industry. This includes brownfield data integration scenarios and greenfield cutting-edge applications. The success stories from traditional insurance companies such as Generali and insurtechs such as freeyou prove that Kafka is the right choice everywhere.

Kafka and its ecosystem enable data processing at scale in real-time. Real decoupling allows the integration between monolith legacy systems and modern cloud-native infrastructure. Kafka runs everywhere, from edge deployments to multi-cloud scenarios.

What are your experiences and plans for low latency use cases? What use case and architecture did you implement? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.