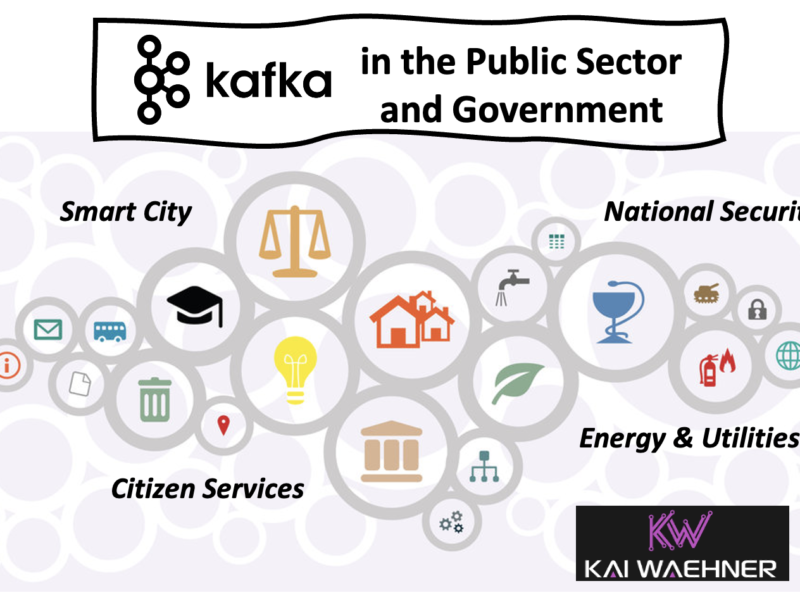

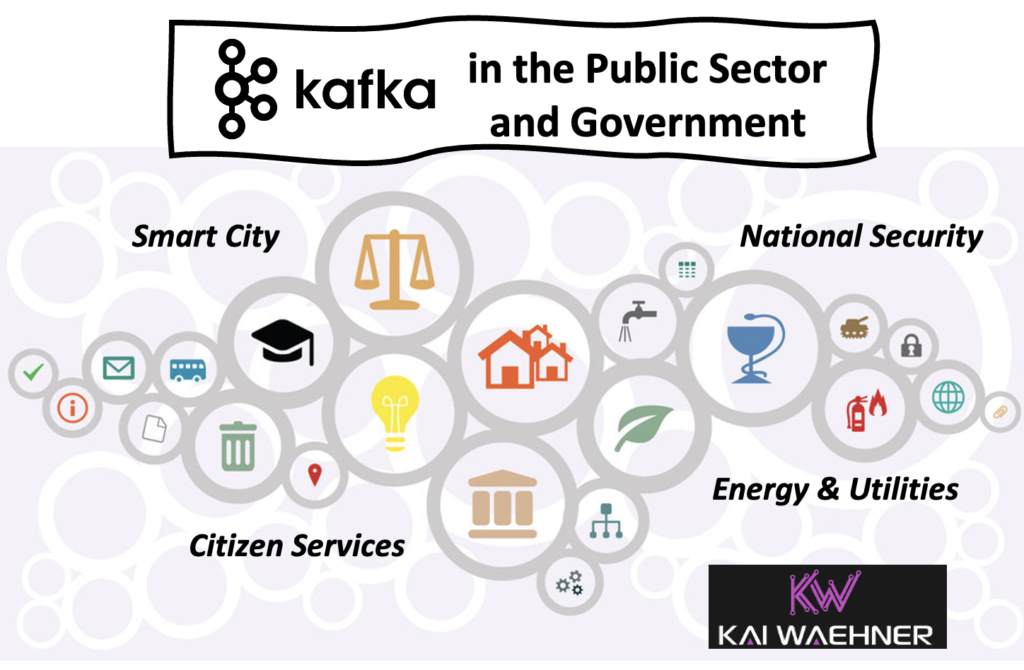

The public sector includes many different areas. Some groups leverage cutting-edge technology, like military leverage. Others like the public administration are years or even decades behind. This blog series explores how the public sector leverages data in motion powered by Apache Kafka to add value for innovative new applications and modernizing legacy IT infrastructures. Life is a stream of events. Therefore, examples include a broad spectrum of use cases across smart cities, citizen services, energy and utilities, and national security deployed across the edge, hybrid, and multi-cloud scenarios.

Blog series: Apache Kafka in the Public Sector and Government

This blog series explores why many governments and public infrastructure sectors leverage event streaming for various use cases. Learn about real-world deployments and different architectures for Kafka in the public sector:

- Life is a Stream of Events (THIS POST)

- Smart City

- Citizen Services

- Energy and Utilities

- National Security

Subscribe to my newsletter to get updates immediately after the publication. Besides, I will also update the above list with direct links to this blog series’s posts once published.

As a side note: If you wonder why healthcare is not on the above list. Healthcare is another blog series on its own. While the government can provide public health care through national healthcare systems, it is part of the private sector in many other cases.

The Public Sector is a Broad Spectrum of Use Cases

Real-time Data Beats Slow Data in the Public Sector

I won’t do yet another long introduction about the added value of real-time data. Check out my blog about “Use Cases across Industries for Data in Motion powered by Apache Kafka” to understand the broad spectrum and benefits. The public sector is not different: Real-time data beats slow data in almost every use case! Here are a few examples:

But think about your use cases! How often can you say that getting data late (like in one hour or the following day) is better than getting data when it happens (now, in a few milliseconds or seconds)? Probably not very often.

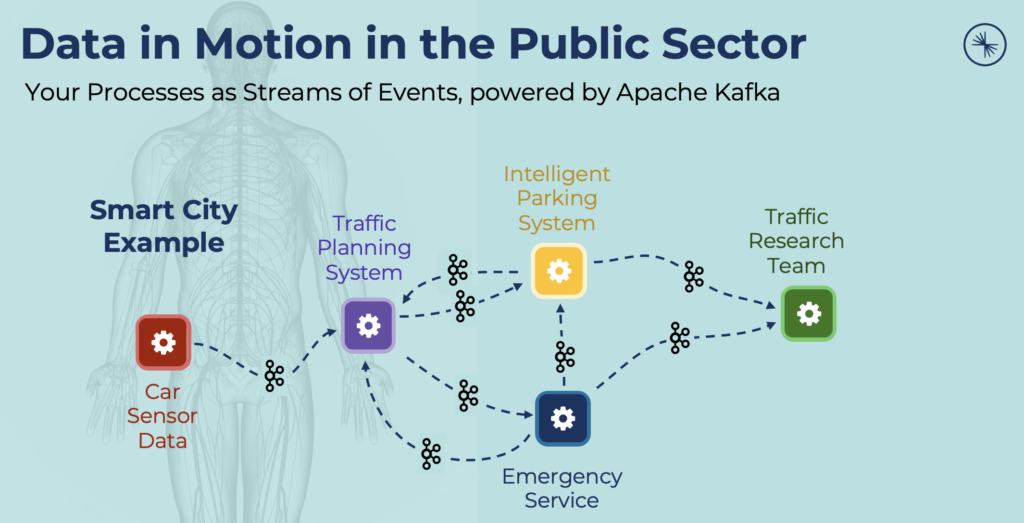

An important fact is that the added business value comes from correlating the events from different data sources. As an example, let’s look at the processes in a smart city:

The sensor data from the car is only valuable if an application correlates it with data from other vehicles in the traffic planning system. Intelligent parking is only reasonable if it integrates with the overall city planning. Emergency service needs to receive an alert in real-time if a crash happens. All of that needs to happen in real-time! It does not matter if the use case is about transactional workloads (usually smaller data sets) or analytical workloads (usually more extensive data sets).

Open API and Partnerships are Mandatory

Governments can build great applications. At least in theory. In practice, they rely on external data from partners and 3rd party applications for many potential use cases:

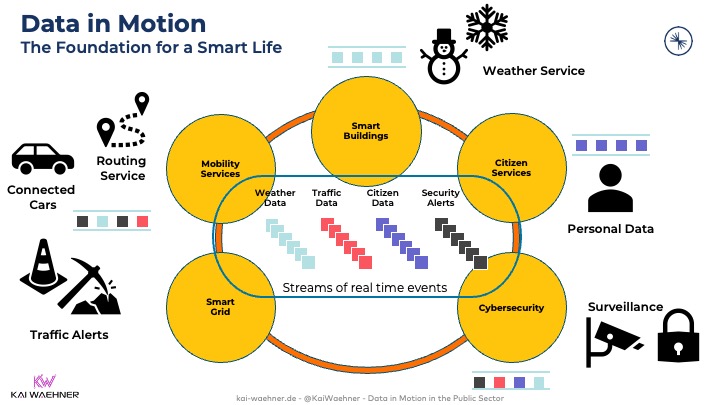

Governments and cities need to work with several other stakeholders, including carmakers, suppliers, telcos, mobility Services, cloud providers, software providers, etc. Standards and open APIs are mandatory for successful cross-cutting projects. The foundation of such an enterprise architecture is an open, reliable, scalable platform that can process data in real-time. Apache Kafka became the de facto standard for event streaming.

Data Mesh for Sharing Events between Government and 3rd Party Applications and Services

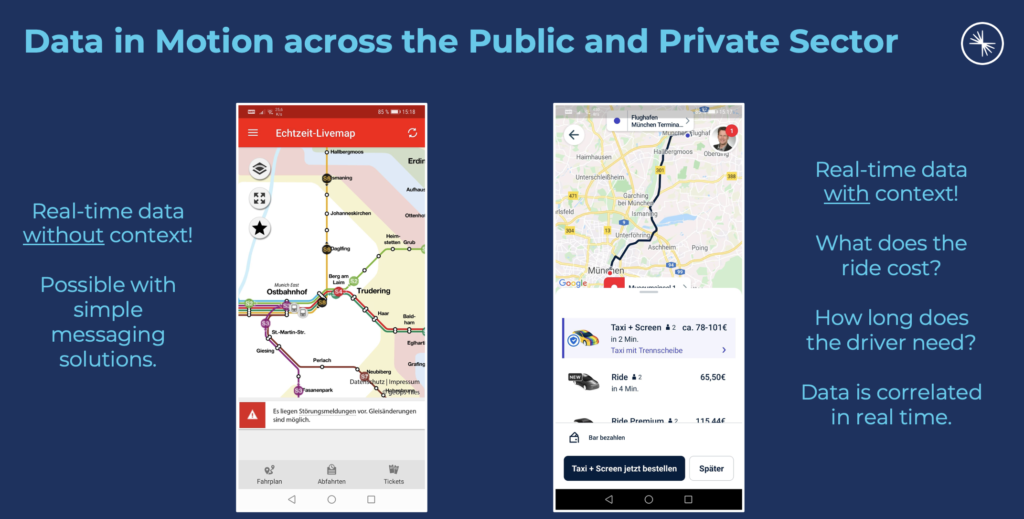

An example that shows the added value of data integration across stakeholders and processing the data in real-time: Transportation Services. A mobile app needs context. Think about hailing a taxi ride. It doesn’t help you if you see the position of each taxi on the city map in real-time. You want to know the estimated time of arrival, the estimated cost, the estimated time of arrival at your destination, the car model that will pick you up, and so much more.

This use case – like many others – is only possible if you integrate and correlate the data from many different interfaces like a mapping service, all taxi drivers, all customers in a city, the weather service, backend analytics services, and much more:

The left side of the picture shows a dashboard built with a real-time message queue like RabbitMQ. The right side shows data correlation of data from different sources in real-time with an event streaming platform like Apache Kafka.

I hope you agree on the added value of the event streaming platform. Just sending data from A to B in real-time is not enough. Only the data processing in real-time adds true value.

Data in Motion as Paradigm Shift in the Public Sector

Real-time beats slow data. No matter if you think about cutting-edge use cases in national security or modernizing the IT infrastructure in the public administration. Event Streaming is the foundation of this paradigm shift moving towards real-time data processing in the public sector. The upcoming posts of this blog series explore many different use cases and architectures. If you also want to learn more about Apache Kafka offerings on the market, check out my comparison of Apache Kafka products and cloud services.

How do you leverage event streaming in the public sector? What technologies and architectures do you use? What projects did you already work on or are in the planning? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.