The public sector includes many different areas. Some groups leverage cutting-edge technology, like military leverage. Others like the public administration are years or even decades behind. This blog series explores how the public sector leverages data in motion powered by Apache Kafka to add value for innovative new applications and modernizing legacy IT infrastructures. This post is part 2: Use cases and architectures for a Smart City.

Blog series: Apache Kafka in the Public Sector and Government

This blog series explores why many governments and public infrastructure sectors leverage event streaming for various use cases. Learn about real-world deployments and different architectures for Kafka in the public sector:

- Life is a Stream of Events

- Smart City (THIS POST)

- Citizen Services

- Energy and Utilities

- National Security

Subscribe to my newsletter to get updates immediately after the publication. Besides, I will also update the above list with direct links to this blog series’s posts once published.

As a side note: If you wonder why healthcare is not on the above list. Healthcare is another blog series on its own. While the government can provide public health care through national healthcare systems, it is part of the private sector in many other cases.

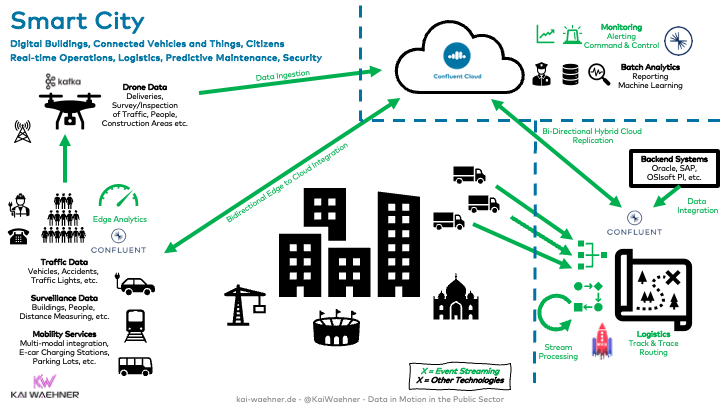

Real-time is Mandatory for a Smart City Everywhere

I wrote a lot about event streaming and Apache Kafka for smart city infrastructure and use cases. I won’t repeat myself. Check out the following event Streaming with Kafka as Foundation for a Smart City and Apache Kafka and MQTT for the Last Mile IoT integration in a Smart City.

This post dives deeper into architectural questions and how collaboration with 3rd party services can look from the government’s perspective and public administration of a smart city.

The Need for Real-time Data Processing Everywhere in a Smart City and how Kafka helps

A smart city is a very complex beast. I am glad that I only cover technology and not regulatory or political discussions. However, even the technology standpoint is not straightforward. A smart city needs to correlate data across data centers, devices, vehicles, and many other things. This scenario is an actual internet of things (IoT) and therefore includes plenty of different technologies, communication paradigms, and infrastructures:

Smart city projects require the integration of various 1st party and 3rd party services. Most use cases only work well if that data is correlated in real-time; think about traffic routing, emergency alerts, predictive monitoring and maintenance, mobility services such as ride-hailing, and other fancy smart city use cases. Without real-time data processing, the use case is either a bad user experience or not cost-efficient. Hence, Kafka is adopted more and more for these scenarios.

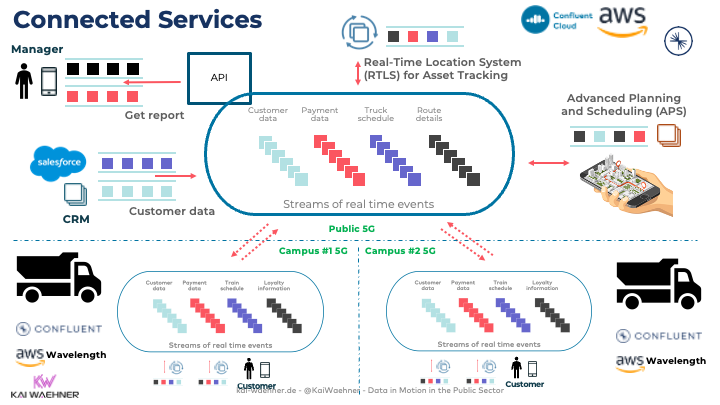

Low Latency and 5G Networks for (some) Data Streaming Use Cases

The term “real-time” needs to be defined. Processing data in a few seconds is good enough in most use cases and a significant game-changer compared to hourly, daily, or weekly batch processing.

Having said this, some use cases like location-based upselling in retail or condition monitoring in equipment and manufacturing require lower latency, meaning sub-second end-to-end data processing.

Here is an example of leveraging 5G networks for low latency. The demo was built by the AWS Wavelength team, Verizon, and Confluent:

Most real-world deployments use separation of concerns: Low-latency use cases run at the edge and everything else in the regular data center or public cloud region. Read the article “Low Latency Data Streaming with Apache Kafka and Cloud-Native 5G Infrastructure” for more details.

At this point, it is important to remind everybody that Kafka (and any IT software) is not hard real-time and not built for the OT world and embedded systems. Learn more in the article “Kafka is NOT hard real-time but soft real-time“. Also, (soft) real-time is not competitive to batch processing and data warehouse/data lake architecture. As you can learn in “Serverless Kafka in a Cloud-native Data Lake Architecture” it is complimentary.

Collaboration between Government, City, and 3rd Party via Open API

Real-time data processing is crucial in implementing smart city use cases. Additionally, most smart city projects require collaboration between different teams, infrastructures, and 3rd party services.

Let’s take a look at three very different real-world event streaming deployments to see the broad spectrum of use cases and integration challenges:

- Ohio Department of Transportation’s government-owned event streaming platform

- Deutsche Bahn’s single source of truth for customer communication in real-time and 3rd party integration with the Google Maps API

- Free Now’s mobility service in the cloud for real-time data correlation in compliance with regional laws and independent vehicles/drivers.

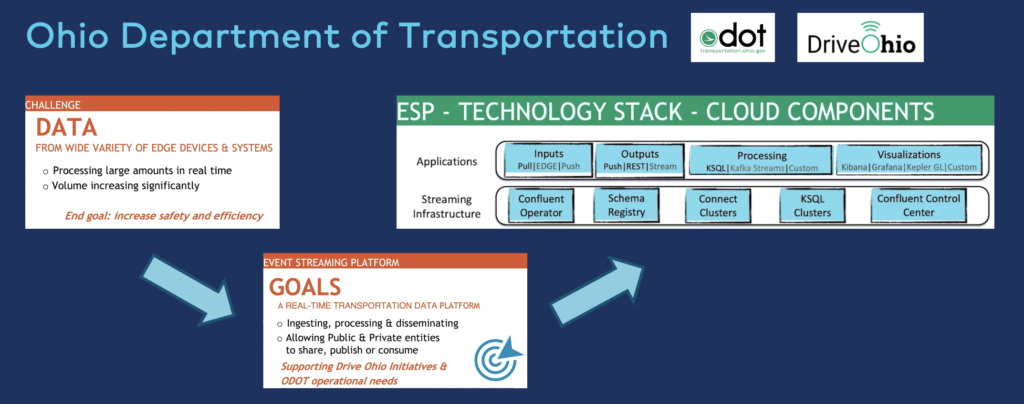

Ohio Department of Transportation (ODOT) – A Government-Owned Event Streaming Platform

Ohio Department of Transportation (ODOT) has an exciting initiative: DriveOhio. It aims to organize and accelerate smart vehicle and connected vehicle projects in the State of Ohio. DriveOhio offers to be the single point of contact for policymakers, agencies, researchers, and private companies to collaborate with one another on intelligent transportation efforts around the state.

ODOT presented their real-time data transportation data platform at the last Kafka Summit Americas:

The whole Kafka ecosystem powers ODOT’s cloud-native Event Streaming Platform (ESP). The platform enables continuous data integration and stream processing for transactional and analytical workloads. The ESP runs on Kubernetes to provide an elastic, flexible, and scalable infrastructure for real-time data processing.

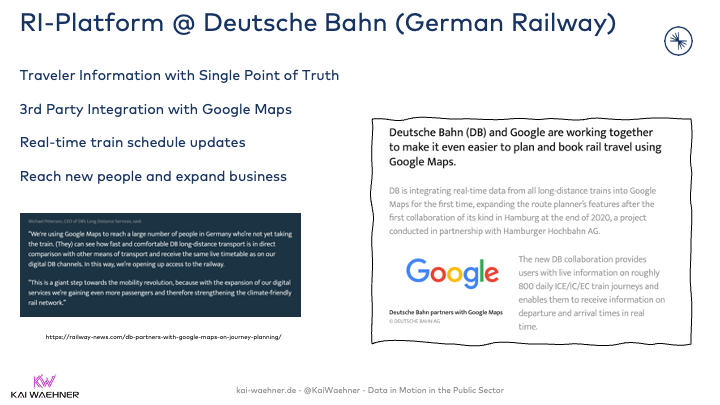

Deutsche Bahn – Single Source of Truth and Google Maps Integration in Real-time

Deutsche Bahn is a German railway company. It is a private joint-stock company (AG), with the Federal Republic of Germany being its single shareholder. I already talked about their real-time traveler information system in another blog post: “Mobility Services and Transportation powered by Apache Kafka“.

They leverage the Apache Kafka ecosystem powered by Confluent because it combines several characteristics that you would have to integrate with different technologies otherwise:

- Real-time messaging

- Data integration

- Data correlation

- Storage and caching

- Replication and high availability

- Elastic scalability

This example is excellent for this blog. It shows how an existing solution needs connectivity to other internal applications and 3rd party services to provide a better customer experience and expand the customer base.

Recently, Deutsche Bahn integrated its platform with Google Maps via Google’s Open API. In addition to a better customer experience, the railway company can reach out to many new end-users to expand their business. The Railway-News has a good article about this integration. Here is my summary:

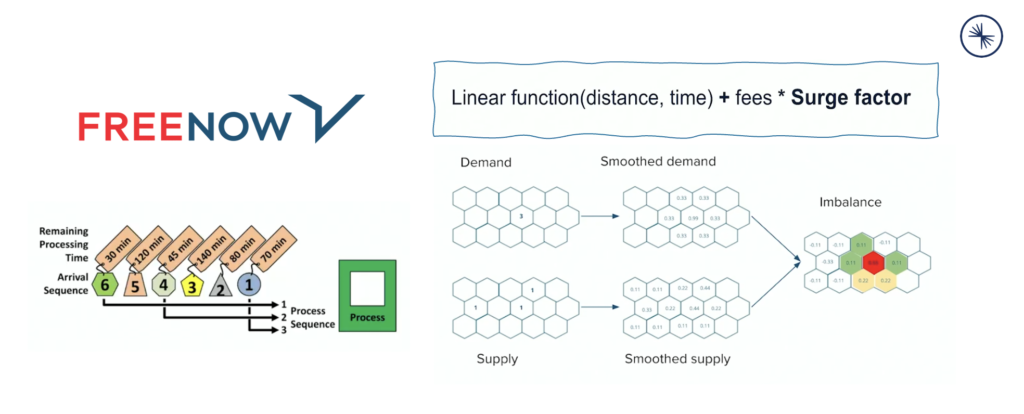

Free Now – Mobility Service in the Cloud Connected to Regional Laws and Vehicles

Free Now (former MyTaxi) is a mobility service. Their app uses mobile and GPS technology to match taxi drivers with passengers based on availability and proximity. Mobility services need to integrate with other 3rd party services for routing, payment, tax implications, and many different use cases.

Here is one example from Free Now’s Kafka Summit talk where they explain the added value of continuous stream processing for calculating context-specific dynamic pricing:

The public administration is always involved when a new mobility service is released to the public. While some cities build their mobility services, the reality is that most governments provide the infrastructure together with the Telco providers, and 3rd party vendors provide the mobility service. The specific relationship between the government, city, and mobility service provider differs across regions, countries, and continents.

Almost every mobility service uses Kafka as its backbone. Google for your favorite mobility service across the globe and add “Kafka” to the search. Chances are very high that you find some excellent blog posts, conferences talks, or at least job offers from the mobility service’s recruiting page. Here are just a few examples that posted great content about their Kafka usage: Uber, Lyft, Grab, Otonomo, Here Technologies, and many more.

Data in Motion with Kafka for a Connected and Innovative Smart City

Smart City is a vast topic. Many stakeholders are involved. Collaboration and Open APIs are critical for success. In most cases, governments work together with telco providers, infrastructure providers such as the cloud hyperscalers, and software vendors (including an event streaming platform like Kafka).

Most valuable and innovative smart city use cases require data processing in real-time. The use cases require data integration, storage, and backpressure handling, and data correlation. Event Streaming is the ideal technology for these use cases. Examples from the Ohio Department of Transportation, Deutsche Bahn and its Google Maps integration, and Free Now showed a few different angles to realize successful smart city projects.

How do you leverage event streaming in the public sector? Are you working on smart city projects? What technologies and architectures do you use? What projects did you already work on or are in the planning? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.