Decentralized finance with crypto and NFTs is a huge topic these days. It becomes a powerful combination with the coming metaverse platforms from social networks, cloud providers, gaming vendors, sports leagues, and fashion retailers. This blog post explores the relationship between crypto technologies like Ethereum, blockchain, NFTs, and modern enterprise architecture. I discuss how event streaming and Apache Kafka help build innovation and scalable real-time applications of a future metaverse. Let’s skip the buzz (and NFT bubble) and instead review practical examples and existing real-world deployments in the crypto and blockchain world powered by Kafka and its ecosystem.

What are crypto, NFT, DeFi, blockchain, smart contracts, metaverse?

I assume that most readers of this blog post have a basic understanding of the crypto market and event streaming with Apache Kafka. The target audience should be interested in the relationship between crypto technologies and a modern enterprise architecture powered by event streaming. Nevertheless, let’s explain each buzzword in a few words to have the same understanding:

- Blockchain: Foundation for cryptocurrencies and decentralized applications (dApp) powered by digital distributed ledgers with immutable records

- Smart contracts: dApps running on a blockchain like Ethereum

- DeFi (Decentralized Finance): Group of dApps to provide financial services without intermediaries

- Cryptocurrency (or crypto): Digital currency that works as an exchange through a computer network that is not reliant on any central authority, such as a government or bank

- Crypto coin: Native coin of a blockchain to trade currency or store value (e.g., Bitcoin in the Bitcoin blockchain or Ether for the Ethereum platform)

- Crypto token: Similar to a coin, but uses another coin’s blockchain to provide digital assets – the functionality depends on the project (e.g., developers built plenty of tokens for the Ethereum smart contract platform)

- NFT (Non-fungible Token): Non-interchangeable, uniquely identifiable unit of data stored on a blockchain (that’s different from Bitcoin where you can “replace” one Bitcoin with another one), covering use cases such as identity, arts, gaming, collectibles, sports, media, etc.

- Metaverse: A network of 3D virtual worlds focused on social connections. This is not just about Meta (former Facebook). Many platforms and gaming vendors are creating their metaverses these days. Hopefully, open protocols enable interoperability between different metaverses, platforms, APIs, and AR/VR technologies. Crypto and NFTs will probably be critical factors in the metaverse.

Cryptocurrency and DeFi marketplaces and brokers are used for trading between fiat and crypto or between two cryptocurrencies. Other use cases include long-term investments and staking. The latter compensates for locking your assets in a Proof-of-Stake consensus network that most modern blockchains use instead of the resource-hungry Proof-of-Work used in Bitcoin. Some solutions focus on providing services on top of crypto (monitoring, analytics, etc.).

Don’t worry if you don’t know what all these terms mean. A scalable real-time data hub is required to integrate crypto and non-crypto technologies to build innovative solutions for crypto and metaverse.

What’s my relation to crypto, blockchain, and Kafka?

It might help to share my background with blockchain and cryptocurrencies before I explore the actual topic about its relation to event streaming and Apache Kafka.

I worked with blockchain technologies 5+ years ago at TIBCO. I implemented and deployed smart contracts with Solidity (the smart contract programming language for Ethereum). I integrated blockchains such as Hyperledger and Ethereum with ESB middleware. And yes, I bought some Bitcoin for ~500 dollars. Unfortunately, I sold them afterward for ~1000 dollars as I was curious about the technology, not the investment part. 🙂

Middleware in real-time is vital for integration

I gave talks at international conferences and published a few articles about blockchain. For instance, “Blockchain – The Next Big Thing for Middleware” at InfoQ in 2016. The article is still pretty accurate from a conceptual perspective. The technologies, solutions, and vendors developed, though.

I thought about joining a blockchain startup. Coincidentally, one company I was talking about was building a “next-generation blockchain platform for transactions in real-time at scale”, powered by Apache Kafka. No joke.

However, I joined Confluent in 2017. I thought that processing data in motion at any scale for transactional and analytics workloads is the more significant paradigm shift. “Why I Move (Back) to Open Source for Messaging, Integration and Stream Processing” describes my decision in 2017. I think I was right. 🙂 Today, most enterprises leverage Kafka as an alternative middleware for MQ, ETL, and ESB tools or implement cloud-native iPaaS with serverless Kafka.

Blockchain, crypto, and NFT are here to stay (for some use cases)

Blockchain and crypto are here to stay, but it is not needed for every problem. Blockchain is a niche. For that, it is excellent. TL;DR: You only need a blockchain in untrusted environments. The famous example of supply chain management is valid. Cryptocurrencies and smart contracts are also here to stay. Partly for investment, partly for building innovative new applications.

Today, I work with customers across the globe. Crypto marketplaces, blockchain monitoring infrastructure, and custodian banking platforms are built on Kafka for good reasons (scale, reliability, real-time). The key to success for most customers is integrating crypto and blockchain platforms and the rest of the IT infrastructure, like business applications, databases, and data lakes.

Trust is important, and trustworthy marketplaces (= banks?) are needed (for some use cases)

Privately, I own several cryptocurrencies. I am not a day-trader. My strategy is a long-term investment (but only a fraction of my total investment; only the money I am okay to lose 100%). I invest in several coins and platforms, including Bitcoin, Ethereum, Solana, Polkadot, Chainlink, and a few even more risky ones. I firmly believe that crypto and NFTs are a game-changer for some use cases like gaming and metaverse, but I also think paying hundreds of thousands of dollars for a digital ape is insane (and just an investment bubble).

I sold my Bitcoins in 2016 because of the missing trustworthy marketplaces. I don’t care too much about the decentralization of my long-term investment. I do not want to hold my cold storage, write a long and complex code on paper, and put it into my safe. I want to have a secure, trustworthy custodian that takes over this burden.

For that reason, I use compliant German banks for crypto investments. If a coin is unavailable, I go to an international marketplace that feels trustworthy. For instance, the exchange of crypto.com and the NFT marketplace OpenSea recently did a great job letting their insurance pay for a hack and loss of customer coins and NFTs, respectively. That’s what I expect as a customer and why I am happy to pay a small fee for buying and selling cryptocurrencies or NFTs on such a platform.

“The False Promise of Web3” is a great read to understand why many crypto and blockchain discussions are not indeed about decentralization. As the article says, “the advertised decentralization of power out of the hands of a few has, in fact, been a re-centralization of power into the hands of fewer“. I am a firm believer in the metaverse, crypto, NFT, DeFi, and blockchain. However, I am fine if some use cases are centralized and provide regulation, compliance, security, and other guarantees.

With this long background story, let’s explore the current crypto and blockchain world and how this relates to event streaming and Apache Kafka.

Use cases for the metaverse and non-fungible tokens (NFT)

Let’s start with some history:

- 1995: Amazon was just an online shop for books. I bought my books in my local store.

- 2005: Netflix was just a DVD-in-mail service. Technology changed over time, but I also rent HD DVDs, Blu-Rays, and other mediums similarly.

- 2022: I will not use Zuckerberg’s Metaverse. Never. It will fail like Second Life (which was launched in 2003). And I don’t get the noise around NFT. It is just a jpeg file that you can right-click and save for free.

Well, we are still in the early stages of cryptocurrencies, and in the very early stages of Metaverse, DeFi, and NFT use cases and business models. However, this is not just hype like the Dot-com bubble in the early 2000s. Tech companies have exciting business models. And software is eating every industry today. Profit margins are enormous, too.

Let’s explore a few use cases where Metaverse, DeFi, and NFTs make sense:

Billions of players and massive revenues in the gaming industry

The gaming industry is already bigger than all other media categories combined, and this is still just the beginning of a new era. Millions of new players join the gaming community every month across the globe.

Connectivity and cheap smartphones are sold in less wealthy countries. New business models like “play to earn” change how the next generation of gamers plays a game. More scalable and low latency technologies like 5G enable new use cases. Blockchain and NFT (Non-Fungible Token) are changing the monetization and collection market forever.

NFTs for identity, collections, ticketing, swaggering, and tangible things

Let’s forget that Justin Bieber recently purchased a Bored Ape Yacht Club (BAYC) NFT for $1.29 million. That’s insane and likely a bubble. However, many use case makes a lot of sense for NFT, not just in (future) virtual metaverse but also in the real world. Let’s look at a few examples:

- Sports and other professional events: Ticketing for controlled pricing, avoiding fraud, etc.

- Luxury goods: Transparency, traceability, and security against tampering; the handbag, the watch, the necklace: for such luxury goods, NFTs can be used as certificates of authenticity and ownership.

- Arts: NFTs can be created in such a way that royalty and license fees are donated every time they are resold

- Carmakers: The first manufacturers link new cars with NFTs. Initially for marketing, but also to increase their resale value in the medium term. With the help of the blockchain, I can prove the number of previous owners of a car and provide reliable information about the repair and accident history.

- Tourism: Proof of attendance. Climbed Mount Everest? Walked at the Grand Canyon? Visited Hawaii for marriage? With a badge on the blockchain, this can be proven once and for all.

- Non-Profit: The technology is ideal for fundraising in charities – not only because it is transparent and decentralized. Every NFT can be donated or auctioned off for a good cause so that this new way of creating value generates new funds for the benefit of charitable projects. Vodafone has demonstrated this by selling the world’s first SMS.

- Swaggering: Twitter already allows you to connect your account to your crypto wallet to set up an NFT as your profile picture. Steph Curry from the NBA team, Golden State Warriors, presents his 55 ETH (~ USD 180,000) Bored Ape Yacht Club NFT as his Twitter profile picture when writing this blog.

With this in mind, I can think about plenty of other significant use cases for NFTs.

Metaverse for new customer experiences

If I think about the global metaverse (not just the Zuckerberg one), I see plenty so many use cases even I could imagine using:

- How many (real) dollars would you pay if your (virtual) house is next to your favorite actor or musician in a virtual world if you can speak with its digital twin (trained by the actual human) every day?

- How many (real) dollars would you pay if this actor visits his house once a week so that virtual neighbors or lottery winners can talk to the natural person via virtual reality?

- How many (real) dollars would you pay if you could bring your Fortnite shotgun (from a game from another gaming vendor) to this meeting and use it in the clay pigeon shooting competition with the neighbor?

- Give me a day, and I will add 1000 other items to this wish list…

I think you get the point. NFTs and metaverse make sense for many use cases. This statement is valid from the perspective of a great customer experience and to build innovative business models (with ridiculous profit margins).

So, finally, we come to the point of talking about the relation to event streaming and Apache Kafka.

Kafka inside a crypto platform

First, let’s understand how to qualify if you need a truly distributed, decentralized ledger or blockchain. Kafka is sufficient most times.

Kafka is NOT a blockchain, but a distributed ledger for crypto

Kafka is not a blockchain, but a distributed commit log. Many concepts and foundations of Kafka are very similar to a blockchain. It provides many characteristics required for real-world “enterprise blockchain” projects:

- Real-Time

- High Throughput

- Decentralized database

- Distributed log of records

- Immutable log

- Replication

- High availability

- Decoupling of applications/clients

- Role-based access control to data

I explored this in more detail in my post “Apache Kafka and Blockchain – Comparison and a Kafka-native Implementation“.

Do you need a blockchain? Or just Kafka and crypto integration?

A blockchain increases the complexity significantly compared to traditional IT projects. Do you need a blockchain or distributed ledger (DLT) at all? Qualify out early and choose the right tool for the job!

Use a Kafka for

- Enterprise infrastructure

- Real-time data hub for transactional and analytical workloads

- Open, scalable, real-time data integration and processing

- True decoupling between applications and databases with backpressure handling and data replayability

- Flexible architectures for many use cases

- Encrypted payloads

Use a real blockchain / DLTs like Hyperledger, Ethereum, Cardano, Solana, et al. for

- Deployment over various independent organizations (where participants verify the distributed ledger contents themselves)

- Specific use cases

- Server-side managed and controlled by multiple organizations

- Scenarios where the business value overturns the added complexity and project risk

Use Kafka and Blockchain together to combine the benefits of both for

- Blockchain for secure communication over various independent organizations

- Reliable data processing at scale in real-time with Kafka as a side chain or off-chain from a blockchain

- Integration between blockchain / DLT technologies and the rest of the enterprise, including CRM, big data analytics, and any other custom business applications

The last section shows that Kafka and blockchain, respectively crypto are complementary. For instance, many enterprises use Kafka as the data hub between crypto APIs and enterprise software.

It is pretty straightforward to build a metaverse without a blockchain (if you don’t want or need to offer true decentralization). Look at this augmented reality demo powered by Apache Kafka to understand how the metaverse is built with modern technologies.

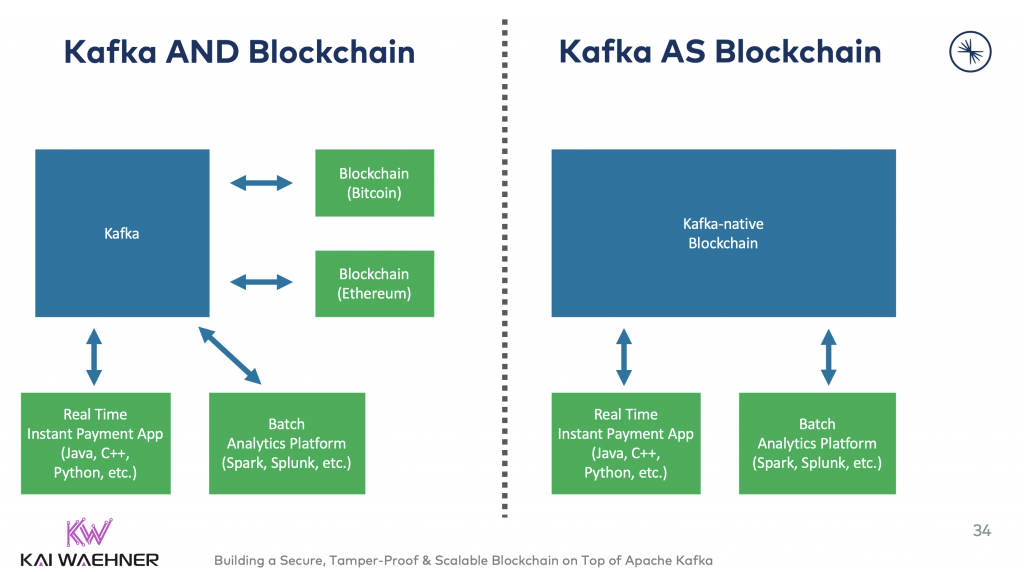

Kafka as a component of blockchain vs. Kafka as middleware for blockchain integration

Some powerful DLTs or blockchains are built on top of Kafka. See the example of R3’s Corda in the next section.

Kafka is used to implementing a side chain or off-chain platform in some other use cases, as the original blockchain does not scale well enough (blockchain is known as on-chain data). Not just Bitcoin has the problem of only processing single-digit (!) transactions per second. Most modern blockchain solutions cannot scale even close to the workloads Kafka processes in real-time.

Having said this, more interestingly, I see more and more companies using Kafka within their crypto trading platforms, market exchanges, and token trading marketplaces to integrate between the crypto and the traditional IT world.

Here are both options:

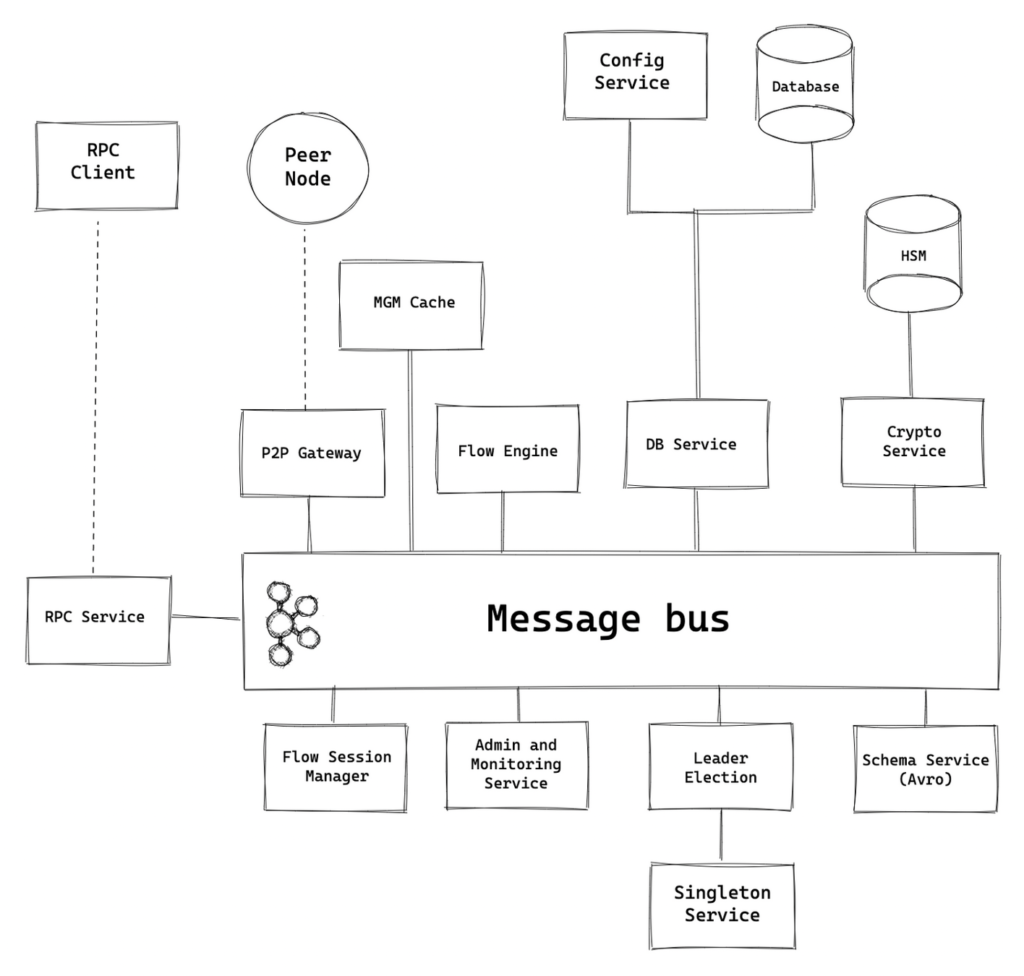

R3 Corda – A distributed ledger for banking and the finance industry powered by Kafka

R3’s Corda is a scalable, permissioned peer-to-peer (P2P) distributed ledger technology (DLT) platform. It enables the building of applications that foster and deliver digital trust between parties in regulated markets.

Corda is designed for the banking and financial industry. The primary focus is on financial services transactions. The architectural designs are simple when compared to true blockchains. Evaluate requirements such as time to market, flexibility, and use case (in)dependence to decide if Corda is sufficient or not.

Corda’s architectural history looks like many enterprise architectures: A messaging system (in this case, RabbitMQ) was introduced years ago to provide a real-time infrastructure. Unfortunately, the messaging solution does not scale as needed. It does not provide all essential features like data integration, data processing, or storage for true decoupling, backpressure handling, or replayability of events.

Therefore, Corda 5 replaces RabbitMQ and migrates to Kafka.

Here are a few reasons for the need to migrate R3’s Corda from RabbitMQ to Kafka:

- High availability for critical services

- A cost-effective way to scale (horizontally) to deal with bursty and high-volume throughputs

- Fully redundant, worker-based architecture

- True decoupling and backpressure handling to facilitate communication between the node services, including the process engine, database integration, crypto integration, RPC service (HTTP), monitoring, and others

- Compacted topics (logs) as the mechanism to store and retrieve the most recent states

Kafka in the crypto enterprise architecture

While using Kafka within a DLT or blockchain, the more prevalent use cases leverage Kafka as the scalable real-time data hub between cryptocurrencies or blockchains and enterprise applications. Let’s explore a few use cases and real-world examples for that.

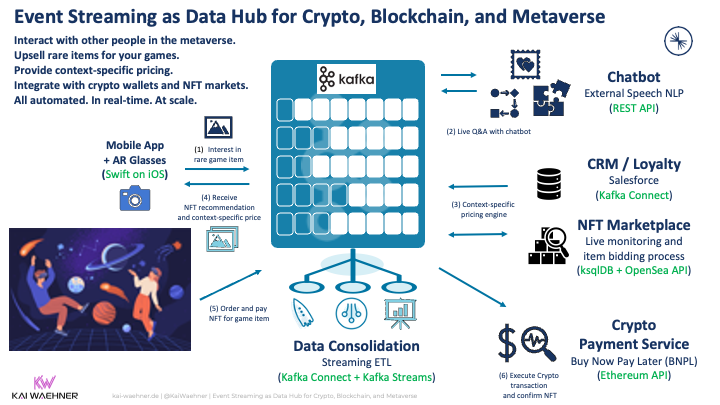

Example crypto architecture: Kafka as data hub in the metaverse

My recent post about live commerce powered by event streaming and Kafka transforming the retail metaverse shows how the retail and gaming industry connects virtual and physical things. The retail business process and customer communication happen in real-time, no matter if you want to sell clothes, a smartphone, or a blockchain-based NFT token for your collectible or video game.

The following architecture shows what an NFT sales play could look like by interesting and orchestration the information flow between various crypt and non-crpyto applications in real-time at any scale:

Kafka’s role as the data hub in crypto trading, marketplaces, and the metaverse

Let’s now explore the combination of Kafka and blockchains, respectively cryptocurrencies and decentralized finance (DeFi).

Once again, Kafka is not the blockchain nor the cryptocurrency. The blockchain is a cryptocurrency like Bitcoin or a platform providing smart contracts like Ethereum, where people build new distributed applications (dApps) like NFTs for the gaming or art industry. Kafka is the data hub in between to connect these blockchains with other Oracles (= the non-blockchain apps = traditional IT infrastructure) like the CRM system, data lake, data warehouse, business applications, and so on.

Let’s look at an example and explore a few technical use cases where Kafka helps:

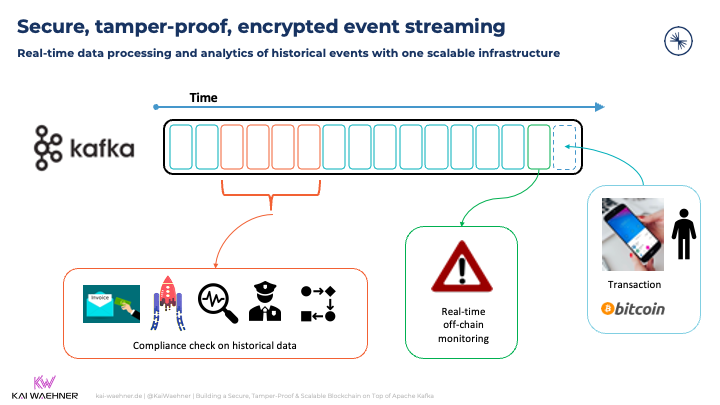

A Bitcoin transaction is executed from the mobile wallet. A real-time application monitors the data off-chain, correlates it, shows it in a dashboard, and sends push notifications. Another completely independent department replays historical events from the Kafka log in a batch process for a compliance check with dedicated analytics tools.

The Kafka ecosystem provides so many capabilities to use the data from blockchains and the crypto world with other data from traditional IT.

Holistic view in a data mesh across typical enterprise IT and blockchains

- Measuring the health of blockchain infrastructure, cryptocurrencies, and dApps to avoid downtime, secure the infrastructure, and make the blockchain data accessible.

- Kafka provides an agentless and scalable way to present that data to the parties involved and ensure that the relevant information is exposed to the right teams before a node is lost. This is relevant for innovative Web3 IoT projects like Helium or simpler closed distributed ledgers (DLT) like R3 Corda.

- Stream processing via Kafka Streams or ksqlDB to interpret the data to get meaningful information

- Processors that focus on helpful block metrics – with information related to average gas price (gas refers to the cost necessary to perform a transaction on the network), number of successful or failed transactions, and transaction fees

- Monitoring blockchain infrastructure and telemetry log events in real-time

- Regulatory monitoring and compliance

- Real-time cybersecurity (fraud, situational awareness, threat intelligence)

Continuous data integration at any scale in real-time

- Integration + true decoupling + backpressure handling + replayability of events

- Kafka as Oracle integration point (e.g. Chainlink -> Kafka -> Rest of the IT infrastructure)

- Kafka Connect connectors that incorporate blockchain client APIs like OpenEthereum (leveraging the same concept/architecture for all clients and blockchain protocols)

- Backpressure handling via throttling and nonce management over the Kafka backbone to stream transactions into the chain

- Processing multiple chains at the same time (e.g., monitoring and correlating transactions on Ethereum, Solana, and Cardano blockchains in parallel)

Continuous stateless or stateful data processing

- Stream processing with Kafka Streams or ksqlDB enables real-time data processing in DeFi / trading / NFT / marketplaces

- Most blockchain and crypto use cases require more than just data ingestion into a database or data lake – continuous stream processing adds enormous value to many problems

- Aggregate chain data (like Bitcoin or Ethereum), for instance, smart contract states or price feeds like the price of cryptos against USD

- Specialized ‘processors’ that take advantage of Kafka Streams’ utilities to perform aggregations, reductions, filtering, and other practical stateless or stateful operations

Building new business models and solutions on top of crypto and blockchain infrastructure

- Custody for crypto investments in a fully integrated, end-to-end solution

- Deployment and management of smart contracts via a blockchain API and user interface

- Customer 360 and loyalty platforms, for instance, NFT integration into retail, gaming, social media for new customer experiences by sending a context-specific AirDrop to a wallet of the customer

- These are just a few examples – the list goes on and on…

The following section shows a few real-world examples. Some are relatively simple monitoring tools. Others are complex and powerful banking platforms.

Real-world examples of Kafka in the crypto and DeFi world

I have already explored how some blockchain and crypto solutions (like R3’s Corda) use event streaming with Kafka under the hood of their platform. Contrary, the following focuses on several public real-world solutions that leverage Kafka as the data hub between blockchains / crypto / NFT markets and new business applications:

- TokenAnalyst: Visualization of crypto markets

- EthVM: Blockchain explorer and analytics engine

- Kaleido: REST API Gateway for blockchain and smart contracts

- Nash: Cloud-native trading platform for cryptocurrencies

- Swisscom’s Custodigit: Crypto banking platform

- Chainlink: Oracle network for connecting smart contracts from blockchains to the real world

TokenAnalyst – Visualization of crypto markets

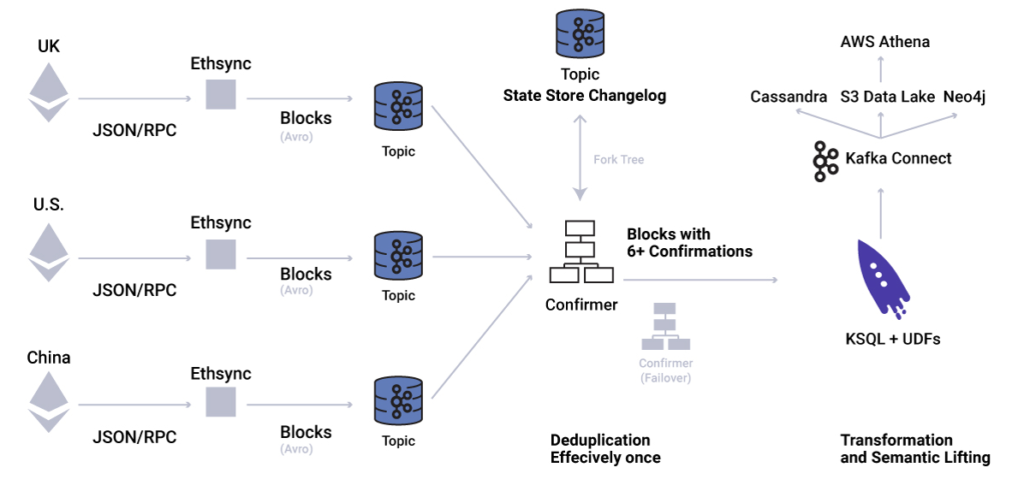

TokenAnalyst is an analytics tool to visualize and analyze the crypto market. TokenAnalyst is an excellent example that leverages the Kafka stack (Connect, Streams, ksqlDB, Schema Registry) to integrate blockchain data from Bitcoin and Ethereum with their analytics tools.

Kafka Connect helps with integrating databases and data lakes. The integration with Ethereum and other cryptocurrencies is implemented via a combination of the official crypto APIs and the Kafka producer client API.

Kafka Streams provides a stateful streaming application to prevent invalid blocks in downstream aggregate calculations. For example, TokenAnalyst developed a block confirmer component that resolves reorganization scenarios by temporarily keeping blocks and only propagates them when a threshold of some confirmations (children to that block are mined) is reached.

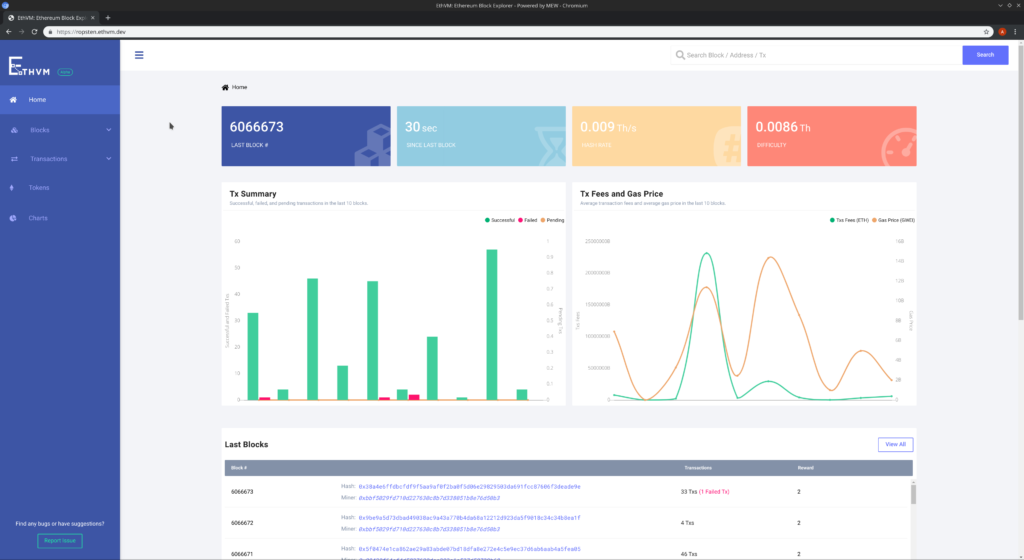

EthVM – A blockchain explorer and analytics engine

The beauty of public, decentralized blockchains like Bitcoin and Ethereum is transparency. The tamper-proof log enables Blockchain explorers to monitor and analyze all transactions.

EthVM is an open-source Ethereum blockchain data processing and analytics engine powered by Apache Kafka. The tool enables blockchain auditing and decision-making. EthVM verifies the execution of transactions and smart contracts, checks balances, and monitors gas prices. The infrastructure is built with Kafka Connect, Kafka Streams, and Schema Registry. A client-side visual block explorer is included, too.

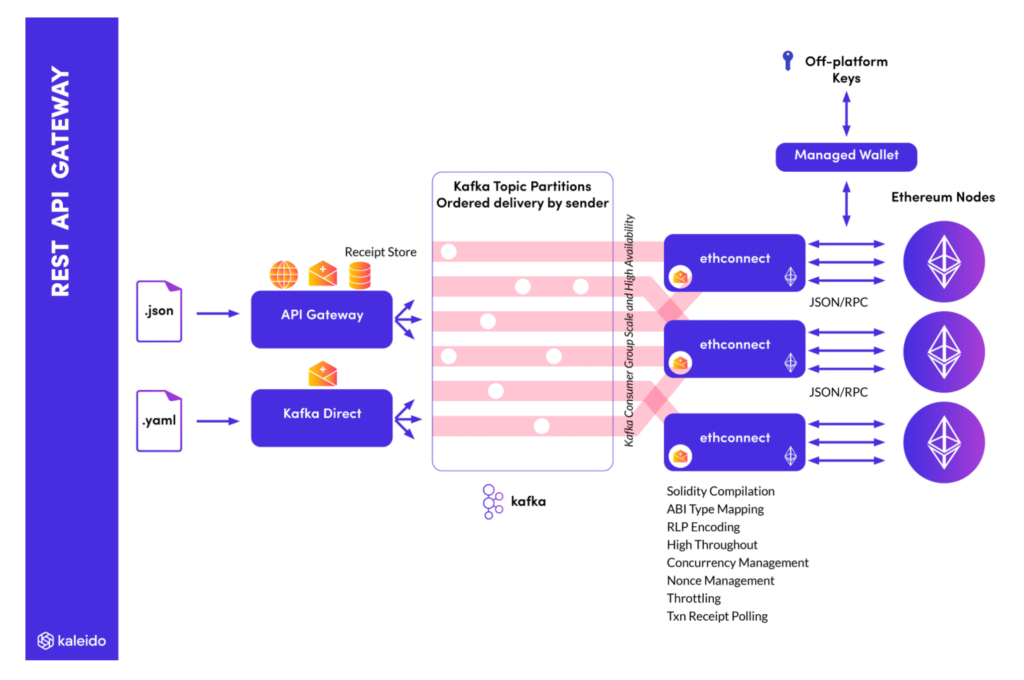

Kaleido – A Kafka-native Gateway for crypto and smart contracts

Kaleido provides enterprise-grade blockchain APIs to deploy and manage smart contracts, send Ethereum transactions, and query blockchain data. It hides the blockchain complexities of Ethereum transaction submission, thick Web3 client libraries, nonce management, RLP encoding, transaction signing, and smart contract management.

Kaleido offers REST APIs for on-chain logic and data. It is backed by a fully-managed high throughput Apache Kafka infrastructure.

One exciting aspect in the above architecture: Kaleido also provides a native direct Kafka connection from the client-side besides the API (= HTTP) gateway. This is a clear trend I discussed before already. Check out:

- API Management and Kafka – Friends, Enemies, or Frenemies?

- A Streaming Data Exchange for the Data Mesh powered by Kafka and Cluster Linking

- Streaming Machine Learning with Kafka-native Model Server

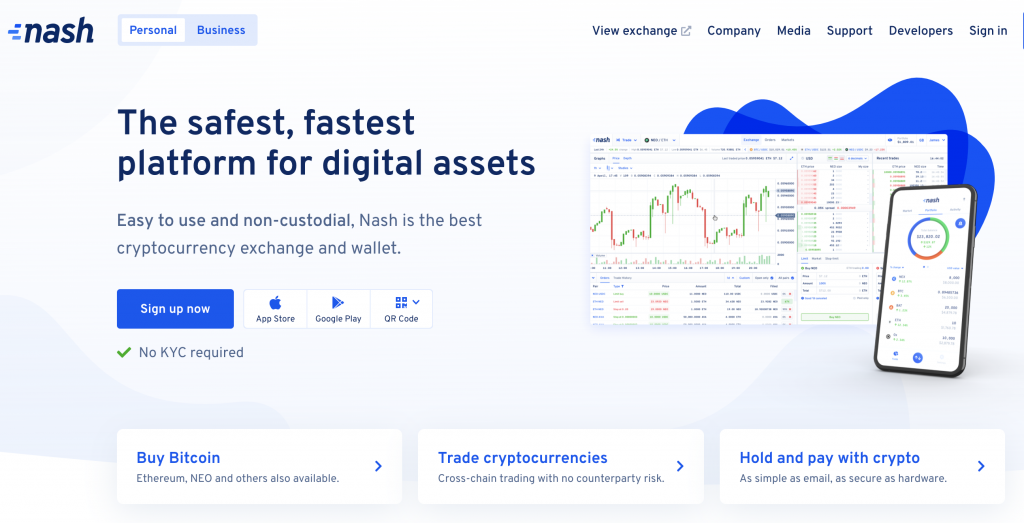

Nash – Cloud-native trading platform for cryptocurrencies

Nash is an excellent example of a modern trading platform for cryptocurrencies using blockchain under the hood. The heart of Nash’s platform leverages Apache Kafka. The following quote from their community page says:

“Nash is using Confluent Cloud, google cloud platform to deliver and manage its services. Kubernetes and apache Kafka technologies will help it scale faster, maintain top-notch records, give real-time services which are even hard to imagine today.”

Nash provides the speed and convenience of traditional exchanges and the security of non-custodial approaches. Customers can invest in, make payments, and trade Bitcoin, Ethereum, NEO, and other digital assets. The exchange is the first of its kind, offering non-custodial cross-chain trading with the full power of a real order book. The distributed, immutable commit log of Kafka enables deterministic replayability in its exact order.

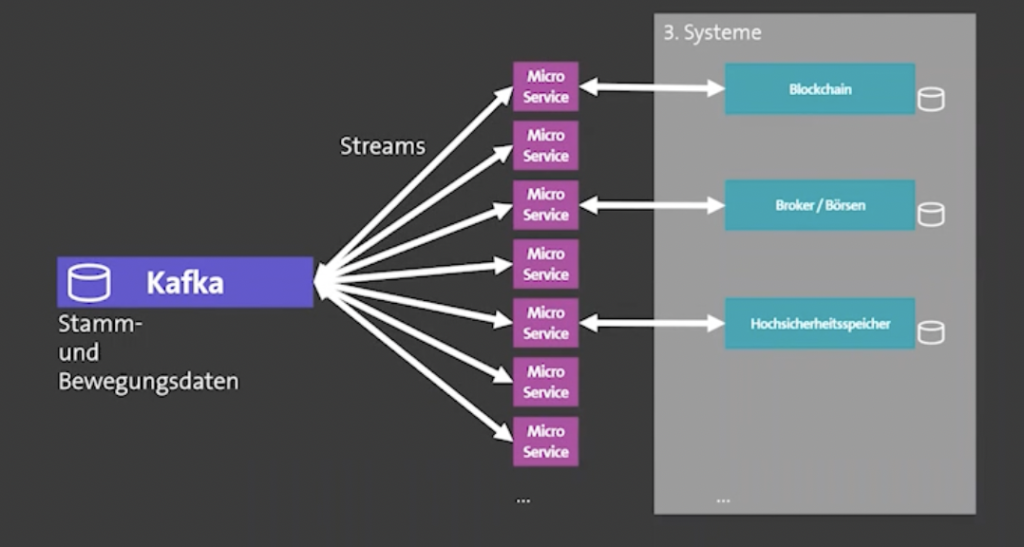

Swisscom’s Custodigit – A crypto banking platform powered by Kafka Streams

Custodigit is a modern banking platform for digital assets and cryptocurrencies. It provides crucial features and guarantees for seriously regulated crypto investments:

- Secure storage of wallets

- Sending and receiving on the blockchain

- Trading via brokers and exchanges

- Regulated environment (a key aspect and no surprise as this product is coming from the Swiss – a very regulated market)

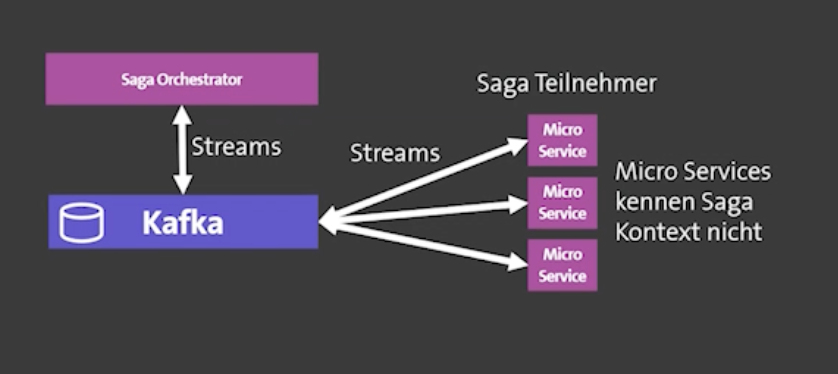

Kafka is the central nervous system of Custodigit’s microservice architecture and stateful Kafka Streams applications. Use cases include workflow orchestration with the “distributed saga” design pattern for the choreography between microservices. Kafka Streams was selected because of:

- lean, decoupled microservices

- metadata management in Kafka

- unified data structure across microservices

- transaction API (aka exactly-once semantics)

- scalability and reliability

- real-time processing at scale

- a higher-level domain-specific language for stream processing

- long-running stateful processes

Architecture diagrams are only available in Germany, unfortunately. But I think you get the points:

- Custodigit microservice architecture – some microservices integrate with brokers and stock markets, others with blockchain and crypto:

- Custodigit Saga pattern for stateful orchestration – stateless business logic is truly decoupled, while the saga orchestrator keeps the state for choreography between the other services:

Chainlink – Oracle network for connecting smart contracts from blockchains to the real world

Chainlink is the industry standard oracle network for connecting smart contracts to the real world. “With Chainlink, developers can build hybrid smart contracts that combine on-chain code with an extensive collection of secure off-chain services powered by Decentralized Oracle Networks. Managed by a global, decentralized community of hundreds of thousands of people, Chainlink introduces a fairer contract model. Its network currently secures billions of dollars in value for smart contracts across decentralized finance (DeFi), insurance, and gaming ecosystems, among others. The full vision of the Chainlink Network can be found in the Chainlink 2.0 white paper.”

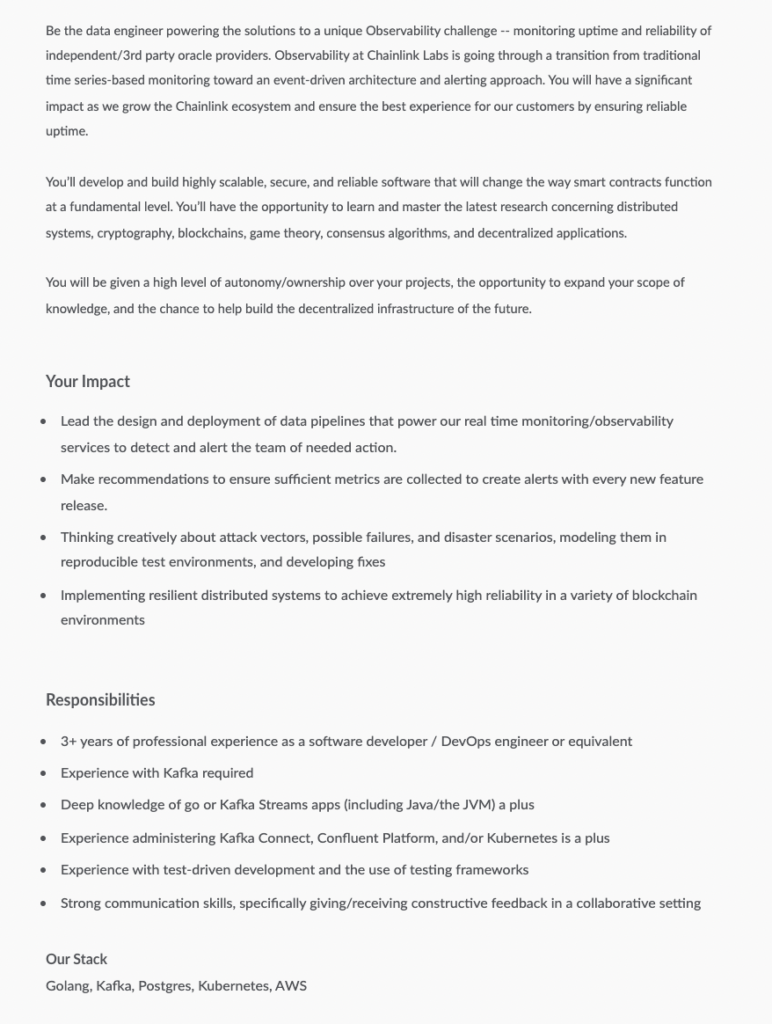

Unfortunately, I could not find any public blog post or conference talks about Chainlink’s architecture. Hence, I can only let Chainlink’s job offering speak about their impressive Kafka usage for real-time observability at scale in a critical, transactional financial environment.

Chainlink is transitioning from traditional time series-based monitoring toward an event-driven architecture and alerting approach.

This job offer sounds very interesting, doesn’t it? And it is a colossal task to solve cybersecurity challenges in this industry. If you look for a blockchain-based Kafka role, this might be for you.

Serverless Kafka enables focusing on the business logic in your crypto data hub infrastructure!

This article explored practical use cases for crypto marketplaces and the coming metaverse. Many enterprise architectures already leverage Apache Kafka and its ecosystem to build a scalable real-time data hub for crypto and non-crypto technologies.

This combination is the foundation for a metaverse ecosystem and innovative new applications, customer experiences, and business models. Don’t fear the metaverse. This discussion is not just about Meta (former Facebook), but about interoperability between many ecosystems to provide fantastic new user experiences (of course, with its drawbacks and risks, too).

A clear trend across all these fancy topics and buzzwords is the usage of serverless cloud offerings. This way, project teams can spend their time on the business logic instead of operating the infrastructure. Check out my articles about “serverless Kafka and its relation to cloud-native data lakes and lake houses” and my “comparison of Kafka offerings on the market” to learn more.

How do you use Apache Kafka with cryptocurrencies, blockchain, or DeFi applications? Do you deploy in the public cloud and leverage a serverless Kafka SaaS offering? What other technologies do you combine with Kafka? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.