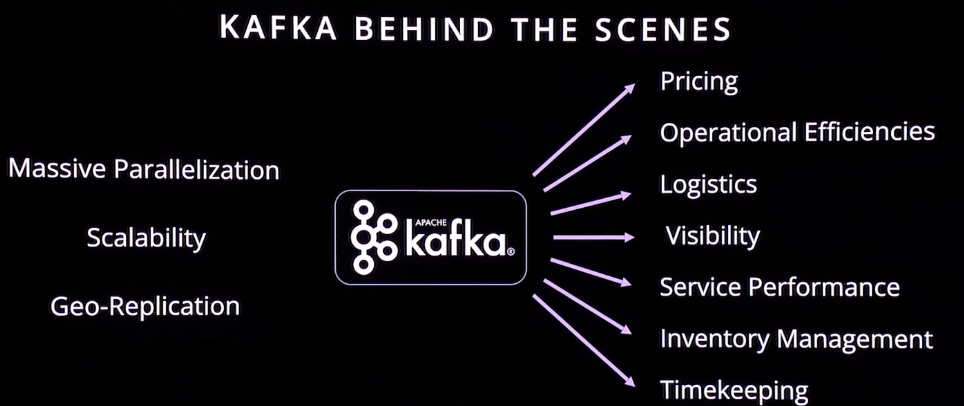

Logistics, shipping, and transportation require real-time information to build efficient applications and innovative business models. Data streaming enables correlated decisions, recommendations, and alerts. Kafka is everywhere across the industry. This blog post explores several real-world case studies from companies such as USPS, Swiss Post, Austrian Post, DHL, and Hermes. Use cases include cloud-native middleware modernization, track and trace, and predictive routing and ETA planning.

Logistics and transportation

Logistics is the detailed organization and implementation of a complex operation. It manages the flow of things between the point of origin and the point of consumption to meet the requirements of customers or corporations. The resources managed in logistics may include tangible goods such as materials, equipment, and supplies, as well as food and other consumable items.

Logistics management is the part of supply chain management (SCM) and supply chain engineering that plans, implements, and controls the efficient, effective forward, and reverse flow and storage of goods, services, and related information between the point of origin and the point of consumption to meet customers’ requirements.

The evolution of logistics technology

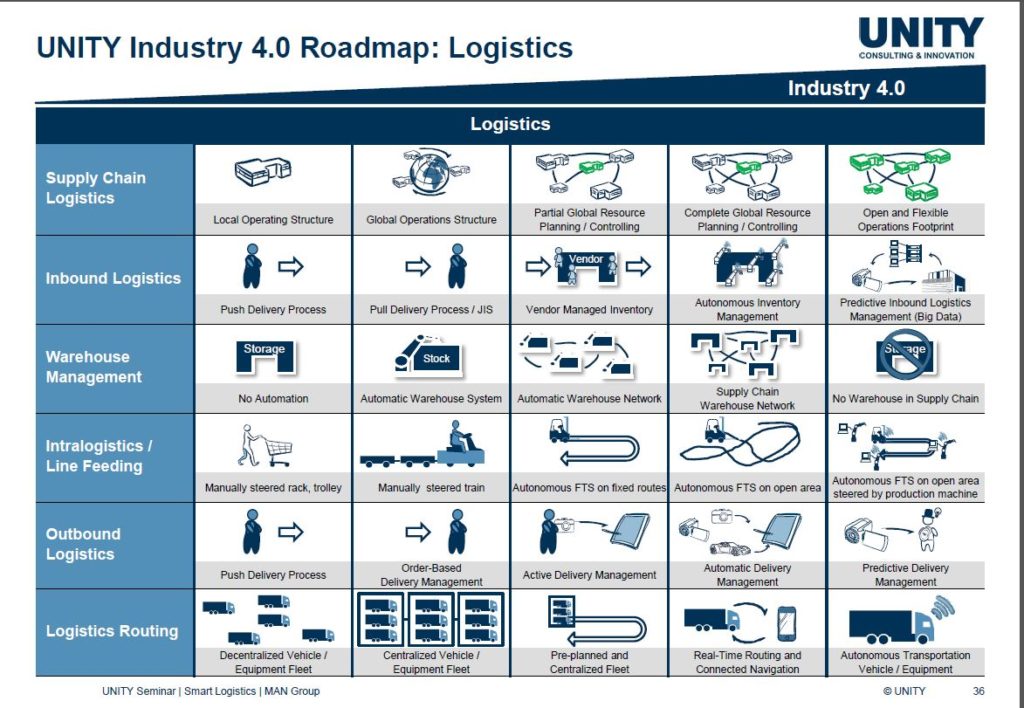

Unity created an excellent overview of the future of logistics and transportation:

The diagram shows the critical technical characteristics for innovation: Digitalization, automation, connectivity, and real-time data are must-haves for optimizing logistics and transportation infrastructure.

Data streaming with Apache Kafka in the shipping industry

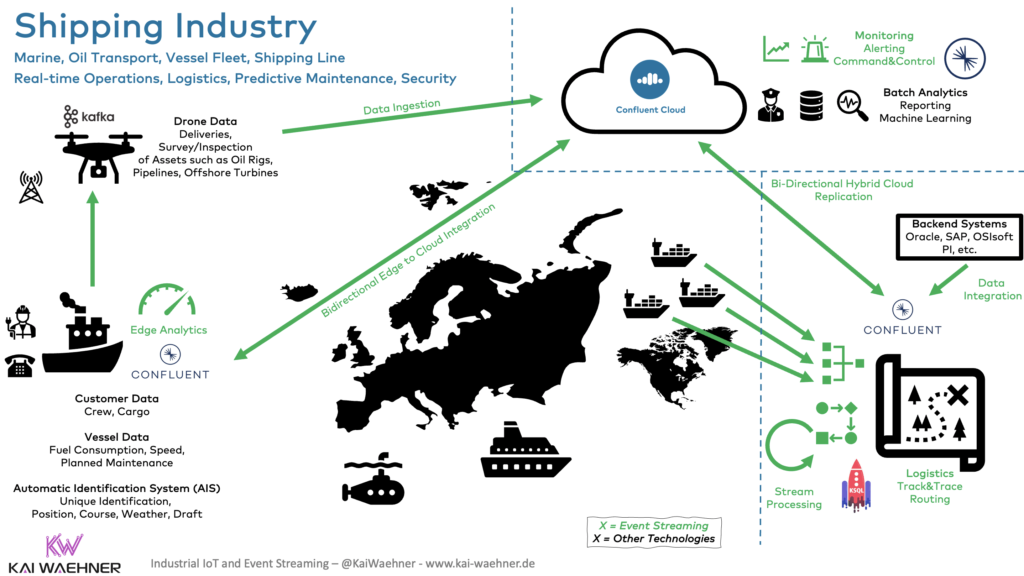

Real-time data is relevant everywhere in logistics and transportation. Apache Kafka is the de facto standard for real-time data streaming. Kafka works well almost everywhere. Here is an example of enterprise architecture for transporting goods across the globe:

Most companies have a cloud-first strategy. Kafka in the cloud as a fully-managed service enables project teams to focus on building applications and scale elastically depending on the needs. Use cases like big data analytics or a real-time supply chain control tower often run in the cloud today.

On-premise Kafka deployments connect to existing IT infrastructure such as Oracle databases, SAP ERP systems, and other monolith and often decade-old technology.

The edge either directly connects to the data center or cloud (if the network connection is relatively stable), or operates its own mission-critical edge Kafka cluster (e.g., on a ship) or a single broker (e.g., embedded into a drone) in a semi-connected or air-gapped environment.

Case studies for real-time transportation, shipping, and logistics with Apache Kafka

The following shows several real-world deployments of the logistics, shipping, and transportation industry for real-time data streaming with the broader Kafka ecosystem.

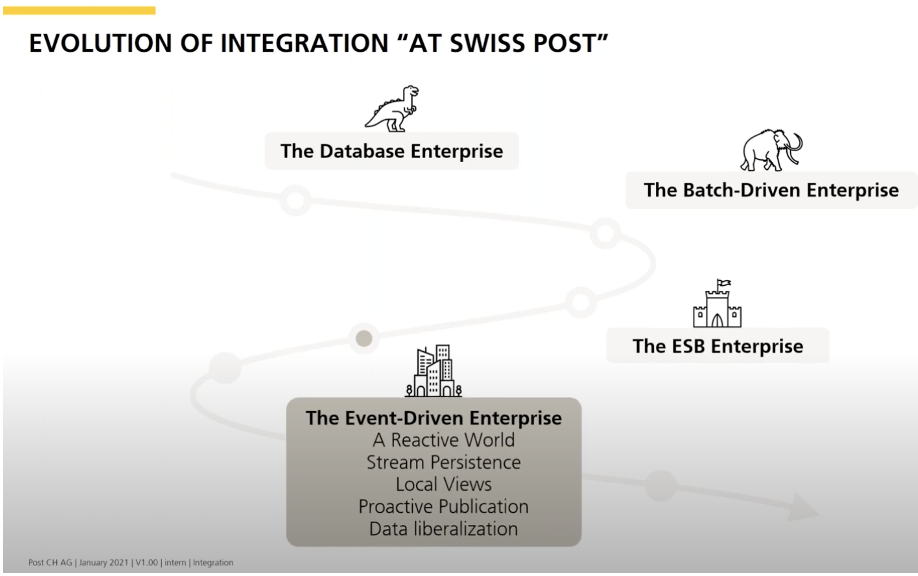

Swiss Post: Decentralized integration using data as an asset across the shipping pipeline

Swiss Post is the national postal service of Switzerland. Data streaming is a fundamental shift in their enterprise architecture. Swiss Post had several motivations:

- Data as an asset: Management and accessibility of strategic company data

- New requirements regarding the amount of event throughput (new parcel

center, loT, etc.) - Integration is not dependent on a central development team (self-service)

- Empowering organization and integration skill development

- Growing demand for real-time event processing (Event-driven architecture)

- Providing a flexible integration technology stack (no one fits all)

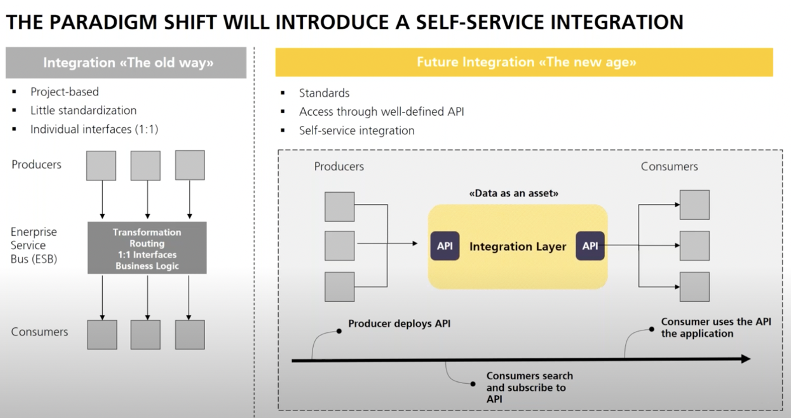

The Kafka-based integration layer processes small events and large legacy files and images.

The shift from ETL/ESB integration middleware to event-based and scalable Kafka is an approach many companies use nowadays:

DHL: Parcel and letter express service with cloud-native middleware

The German logistics company DHL is a subsidiary of Deutsche Post AG. DHL Express is the market leader for parcel services in Europe.

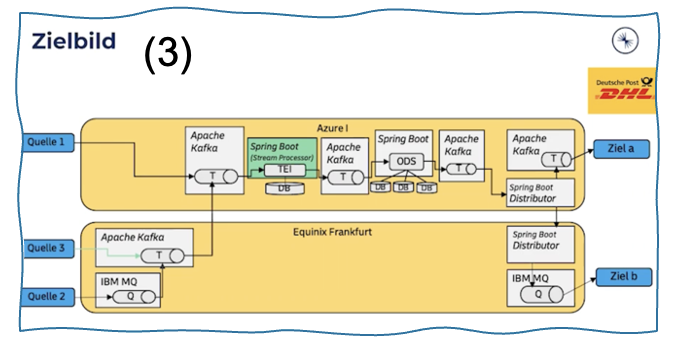

Like the Swiss Post, DHL modernized its integration architecture with data streaming. They complement MQ and ESB with data streaming powered by Kafka and Confluent. Check out the comparison between Message Queue systems and Apache Kafka to understand why adding Kafka is sometimes the better approach than initially trying to replace MQ with Kafka.

Here is the target future hybrid enterprise architecture of DHL with IBM MQ, Apache Kafka, and Spring Boot applications:

This is a very common approach to modernizing middleware infrastructure. Here, the on-premise middleware based on IBM MQ and Oracle Weblogic struggles with the scale, even though we are “only” talking about a few thousand messages per second.

A few more notes about DHL’s middleware migration journey:

- Migration to a cloud-native Kubernetes Microservices infrastructure

- Migration to Azure Cloud planned with Cluster Linking

- Mid-term: Replacement of the legacy ESB.

An interesting side note: DHL processes relatively large messages (70kb) with Kafka, resulting in hundreds of MB/sec.

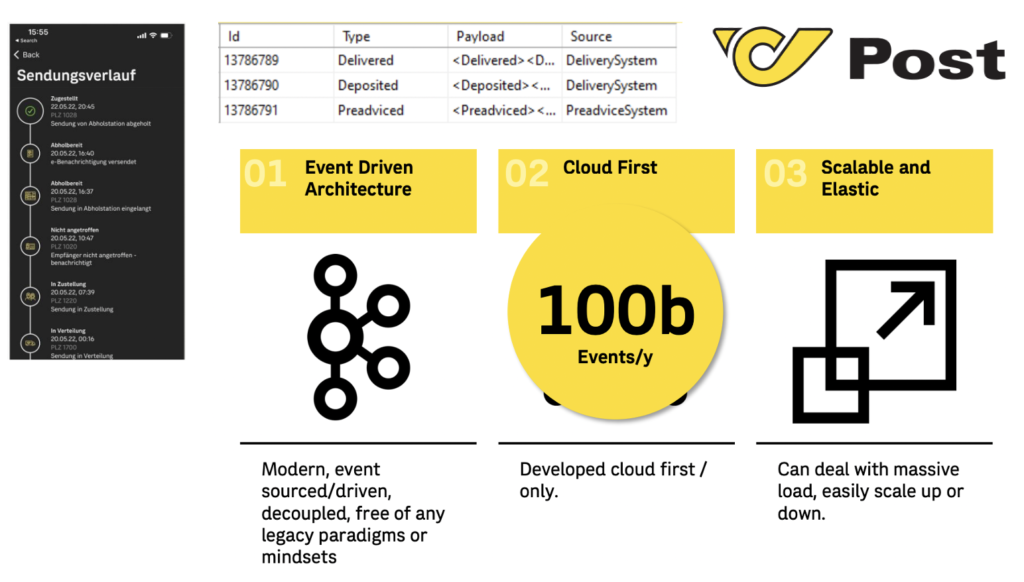

Austrian Post: Track & trace parcels in the cloud with Kafka

Austrian Post leverages data streaming to track and trace parcels end-to-end across the delivery routes:

The infrastructure for Austrian Post’s data streaming infrastructure runs on Microsoft Azure. They evaluated three technologies with the following results in their own words:

- Azure Event Hubs (fully managed, only the Kafka protocol, not true Kafka, with various limitations): Not flexible enough, limited stream processing, no schema registry.

- Apache Kafka (open source, self-managed): Way too much hassle.

- Confluent Cloud on Azure (fully-managed, complete platform): Selected option.

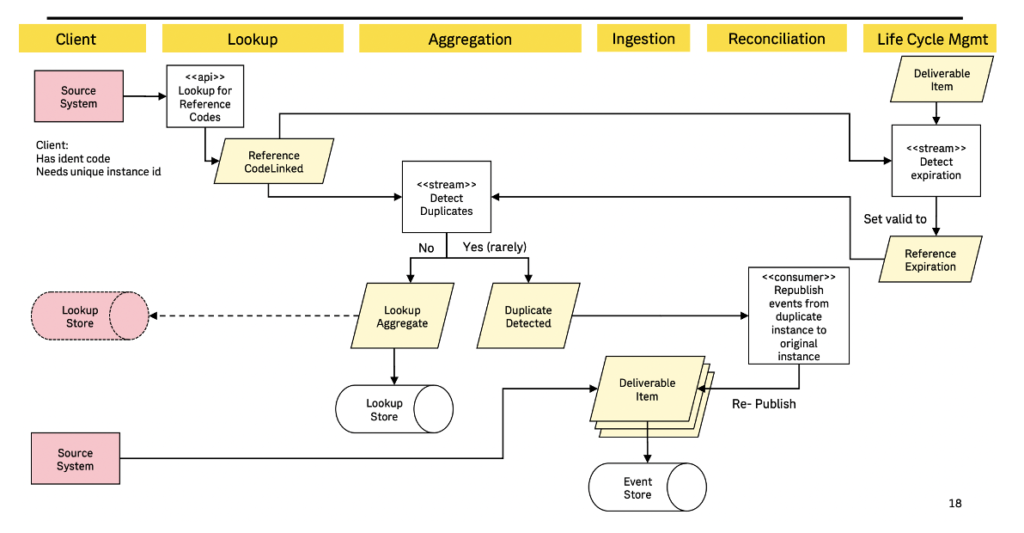

One example use case of Austrian Post is about problems with ident codes: They are not unique. Instead, they can (and will be) re-used. Shipments can have more than one ident code. Scan events for ident codes need to be added to the correct “digital twin” of parcel delivery.

Stream processing enables the implementation of such a stateful business process:

Hermes: Predictive delivery planning with CDC and Kafka

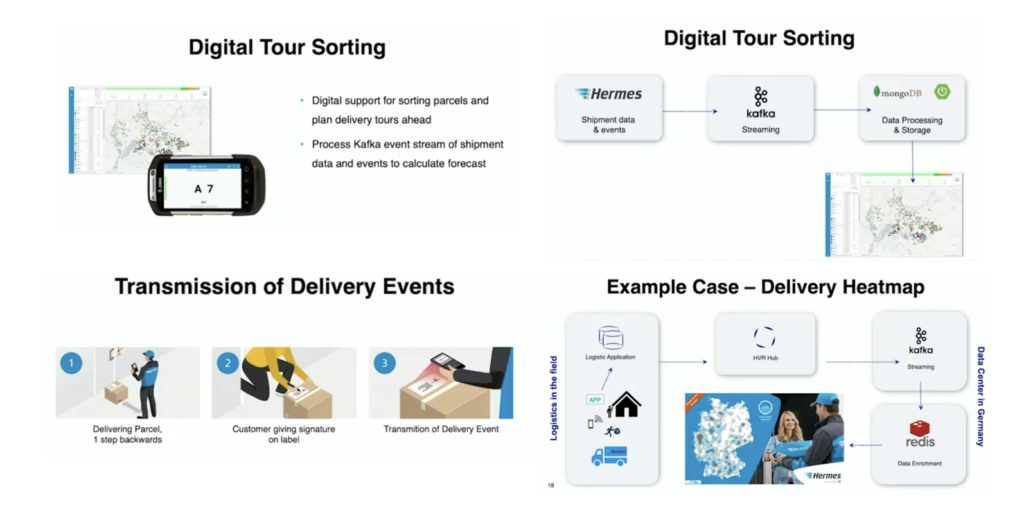

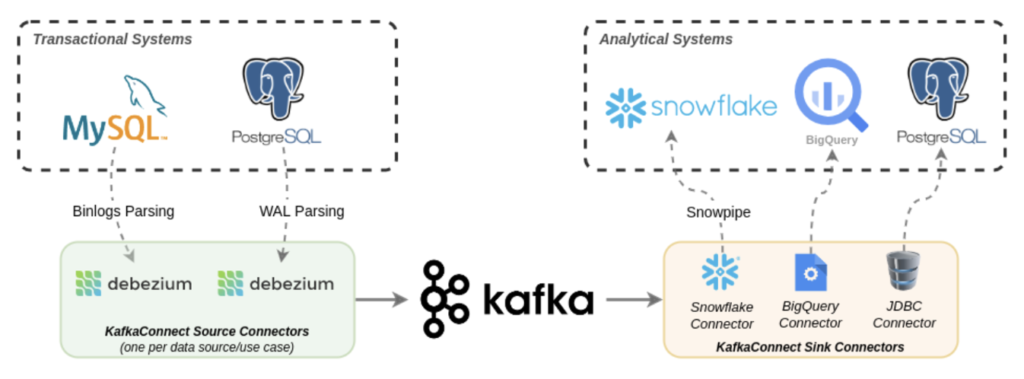

Hermes is another German delivery company. Their goal: Making business decisions more data-driven with real-time analytics. To achieve this goal, Hermes integrates, processes, and correlates data generated by machines, companies, humans, and interactions for predictive delivery planning.

They leverage Change Data Capture (CDC) with HVR and Kafka for real-time delivery and collection services. Databases like MongoDB and Redis provide long-term storage and analytical capabilities:

This is an excellent example of technology and architecture modernization, combining data streaming and various databases.

USPS: Digital representation of all critical assets in Kafka for real-time logistics

USPS (United States Postal Service) is by geography and volume the globe’s largest postal system. They started the Kafka journey in 2016. Today, USPS operates a hybrid multi-cloud environment including real-time replication across regions.

“Kafka processes every event that is important for us,” said USPS CIO Pritha Mehra at Current 2022. Kafka events process a digital representation of all assets important for USPS, including carrier movement, vehicle movement, trucks, package scans, etc. For instance, USPS processes 900 million scans per day.

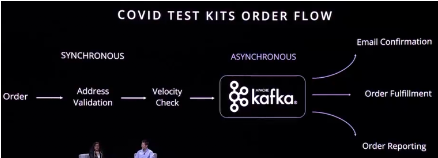

One interesting use case was an immediate response to a White House directive in late 2021 to send Covid test kits to every American free of charge. Time-to-market for the project was three weeks (!). USPS processed up to 8.7 million test kits per hour with help from Kafka:

Baader: Real-time logistics for dynamic routing and ETA calculations

BAADER is a worldwide manufacturer of innovative machinery for the food processing industry. They run an IoT-based and data-driven food value chain on Confluent Cloud.

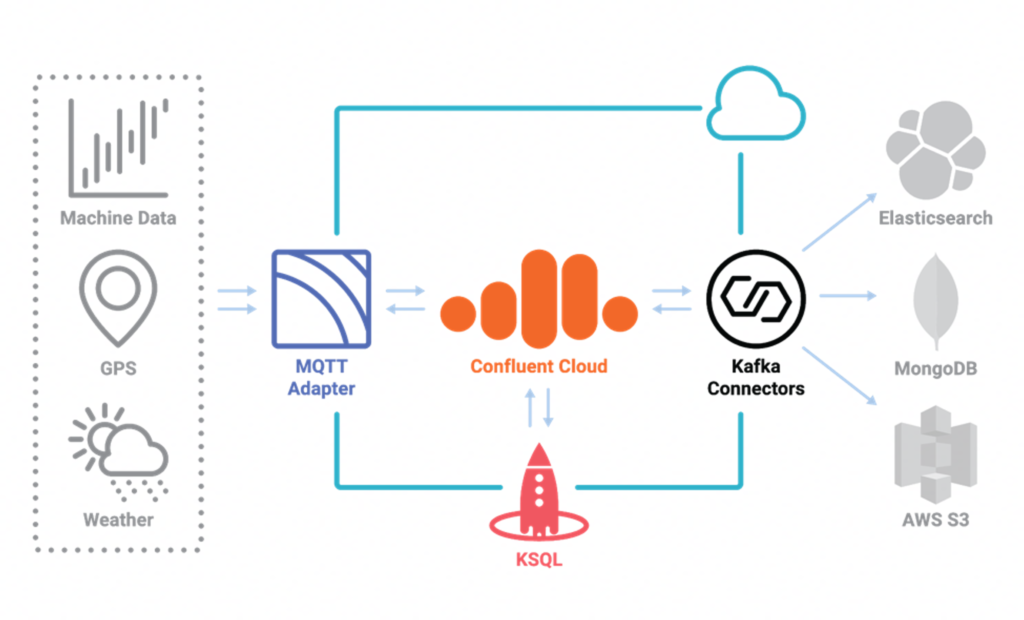

The Kafka-based infrastructure is running as a fully-managed service in the cloud. It provides a single source of truth across the factories and regions across the food value chain. Business-critical operations are available 24/7 for tracking, calculations, alerts, etc.:

MQTT provides connectivity to machines and GPS data from vehicles at the edge. Kafka Connect connectors integrate MQTT and IT systems, such as Elasticsearch, MongoDB, and AWS S3. ksqlDB processes the data in motion continuously.

Check my blog series about Kafka and MQTT for other related IoT use cases and examples.

Shippeo: A Kafka-native transportation platform for logistics providers, shippers, and carriers

Shippeo provides real-time and multimodal transportation visibility for logistics providers, shippers, and carriers. Its software uses automation and artificial intelligence to share real-time insights, enable better collaboration, and unlock your supply chain’s full potential. The platform can give instant access to predictive, real-time information for every delivery.

This is a terrific example of cloud-native enterprise architecture leveraging a “best of breed” approach for data warehousing and analytics. Kafka decouples the analytical workloads from the transactional systems and handles the backpressure for slow consumers.

A real-time locating system (RTLS) built with Apache Kafka

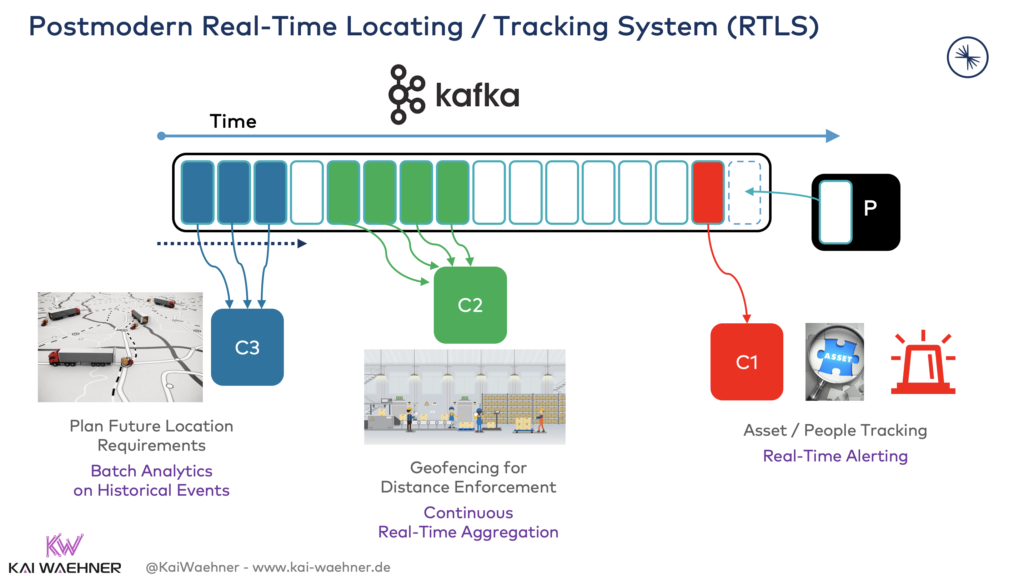

I want to end this blog post with a more concrete example of a Kafka implementation. The following picture shows a multi-purpose Kafka-native real-time locating system (RTLS) for transportation and logistics:

The example shows three use cases of how produced events (“P”) are consumed and processed:

- (“C1”) Real-time alerting on a single event: Monitor assets and people and send an alert to a controller, mobile app, or any other interface if an issue happens.

- (“C2”) Continuous real-time aggregation of multiple events: Correlation data while in motion. Calculate average, enforce business rules, and apply an analytic model for predictions on new events, or any other business logic.

- (“C3”) Batch analytics on all historical events: Take all historical data to find insights, e.g., for analyzing past issues, planning future location requirements, or training analytic models.

The Kafka-native RTLS can run in the data center, cloud, or closer to the edge, e.g., in a factory close to the shop floor and production lines. The blog post “Real Time Locating System (RTLS) with Apache Kafka for Transportation and Logistics” explores this use case in more detail.

The logistics and transportation industry requires Kafka-native real-time data streams!

Real-time data beats slow data. That’s true almost everywhere. But logistics, shipping, and transportation cannot build efficient and innovative business models without real-time information and correlated decisions, recommendations, and alerts. Kafka is everywhere in this industry. And it is just getting started.

After writing the blog post, I realized most case studies were from European companies. This is just accidentally. I assure you that similar companies in the US, Asia, or Australia have built or are building similar enterprise architectures.

If you still want to learn more, here are more related blog posts:

- Real-time supply chain optimization with Apache Kafka

- End-to-end visibility with a Kafka-powered supply chain control tower

- Data streaming with Kafka in the aviation, airline, and travel industry.

- Improving the customer experience in transportation at Deutsche Bahn (German railway) with Kafka.

- Supply chain integration across the food value chain with Kafka

What role plays data streaming in your logistics and transportation scenarios? Do you run everything around Kafka in the cloud or operate hybrid edge scenarios? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.