Energy trading and data streaming are connected because real-time data helps traders make better decisions in the fast-moving energy markets. This data includes things like price changes, supply and demand, smart IoT meters and sensors, and weather, which help traders react quickly and plan effectively. As a result, data streaming with Apache Kafka and Apache Flink makes the market clearer, speeds up information sharing, and improves forecasting and risk management. This blog post explores the use cases and architectures for scalable and reliable real-time energy trading, including real-world deployments from Uniper, re.alto and Powerledger.

What is Energy Trading?

Energy trading is the process of buying and selling energy commodities in order to manage risk, optimize costs, and ensure the efficient distribution of energy. Commodities traded include:

- Electricity: Traded in wholesale markets to balance supply and demand.

- Natural Gas: Bought and sold for heating, electricity generation, and industrial use.

- Oil: Crude oil and refined products like gasoline and diesel are traded globally.

- Renewable Energy Certificates (RECs): Represent proof that energy was generated from renewable sources.

Market Participants:

- Producers: Companies that extract or generate energy.

- Utilities: Entities that distribute energy to consumers.

- Industrial Consumers: Large energy users that purchase energy directly.

- Traders and Financial Institutions: Participants that buy and sell energy contracts for profit or risk management.

Objectives of Energy Trading

The objectives for energy trading are risk management (hedging against price volatility and supply disruptions), cost optimization (securing energy at the best possible prices) and revenue generation (profiting from price differences in different markets).

Market types include:

- Spot Markets: Immediate delivery and payment of energy commodities.

- Futures Markets: Contracts to buy or sell a commodity at a future date, helping manage price risks.

- Over-the-Counter (OTC) Markets: Direct trades between parties, often customized contracts.

- Exchanges: Platforms like the New York Mercantile Exchange (NYMEX) and Intercontinental Exchange (ICE) where standardized contracts are traded.

Energy trading is subject to extensive regulation to ensure fair practices, prevent market manipulation, and protect consumers.

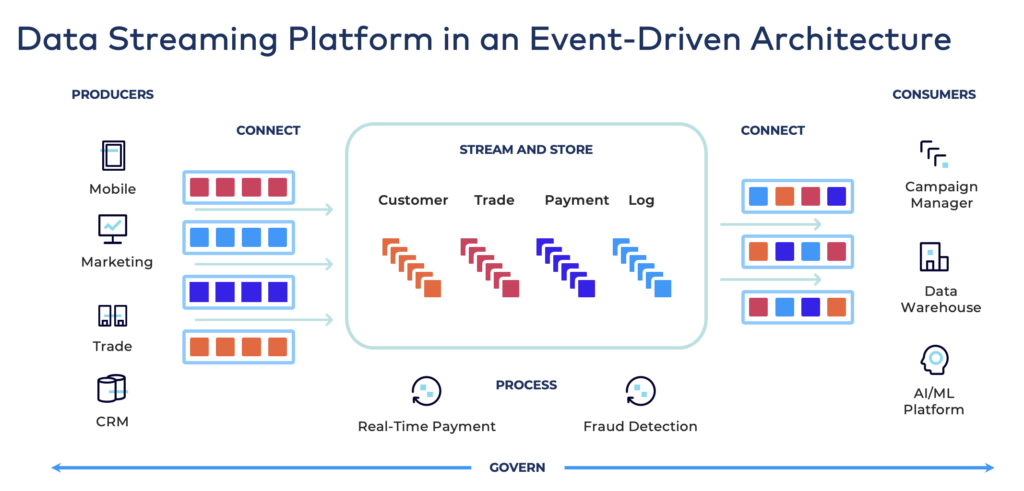

What is Data Streaming with Apache Kafka and Flink?

Data streaming with Apache Kafka and Flink provides a unique combination of capabilities:

- Real-time messaging at scale for analytical and transactional workloads.

- Event store for durability, true decoupling and the ability to travel back in time for replayability of events with guaranteed ordering.

- Data integration with any data source and sink (real-time, near real-time, batch, request response APIs, files, etc.)

- Stream processing for stateless and stateful correlations of data for streaming ETL and business applications

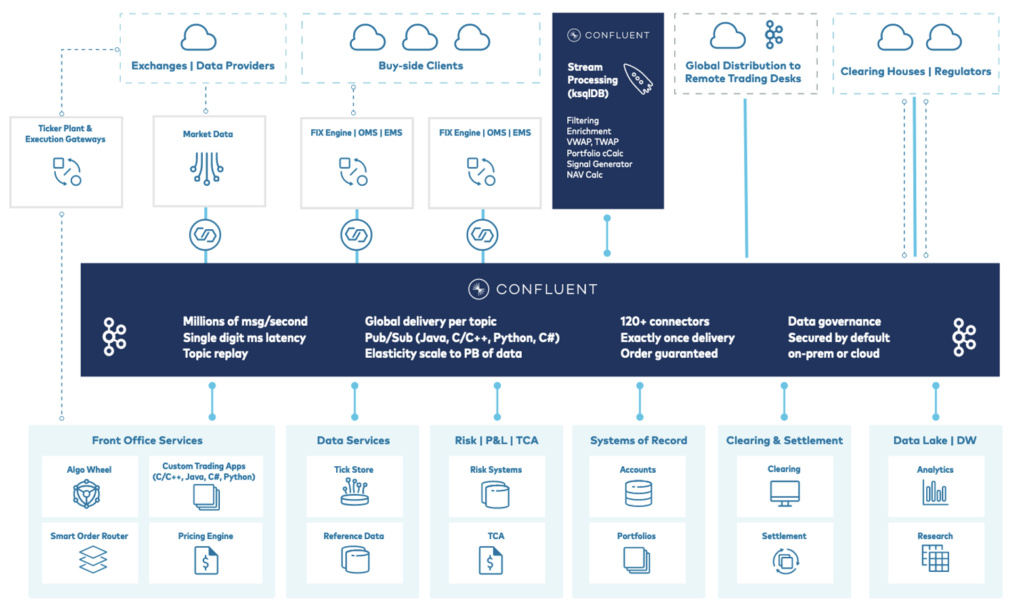

Trading Architecture with Apache Kafka

Many trading markets use data streaming with Apache Kafka under the hood to integrate with internal systems, external exchanges and data providers, clearing houses and regulators:

For instance, NASDAQ combines critical stock exchange trading with low-latency streaming analytics. This is not much different for energy trading, even though the interfaces and challenges differ a bit as additionally various IoT data sources are involved.

Why Apache Kafka and Flink for Energy Trading?

Data streaming with Apache Kafka and Apache Flink is highly beneficial for energy trading for several reasons across the end-to-end business process and data pipelines.

Here is why these technologies are often used in the energy sector:

Real-Time Data Processing

Real-Time Analytics: Energy trading relies on real-time data to make informed decisions. Kafka and Flink can process data streams in real-time, providing immediate insights into market conditions, energy consumption and production levels.

Immediate Response: Real-time processing allows traders to respond instantly to market changes, such as price fluctuations or sudden changes in supply and demand, optimizing trading strategies and mitigating risks.

Scalability and Performance

Scalability: Both Kafka and Flink handle high-throughput data streams. This scalability is crucial for energy markets, which generate vast amounts of data from multiple sources, including sensors, smart meters, and market feeds.

High Performance: Data streaming enables fast data processing and analysis. Kafka ensures low-latency data ingestion, while Flink provides efficient, distributed stream processing.

Fault Tolerance and Reliability

Fault Tolerance: Kafka’s distributed architecture ensures data durability and fault tolerance, essential for the continuous operation of energy trading systems.

Reliability: Flink offers exactly-once processing semantics, ensuring that each piece of data is processed accurately without loss or duplication, which is critical for maintaining data integrity in trading operations.

Integration and Flexibility

Integration Capabilities: Kafka can integrate with various data sources and sinks via Kafka Connect or Client APIs like Java, C, C++, Python, JavaScript or REST/HTTP. This making it versatile for collecting data from different energy systems. Flink can process this data in real-time and output the results to various storage systems or dashboards.

Flexible Data Processing: Flink supports complex event processing, windowed computations, and machine learning, allowing for advanced analytics and predictive modeling in energy trading.

Event-Driven Architecture (EDA)

Event-Driven Processing: Energy trading can benefit from an event-driven architecture where trading decisions and alerts are triggered by specific events, such as market price thresholds or changes in energy production. Kafka and Flink facilitate this approach by efficiently handling event streams.

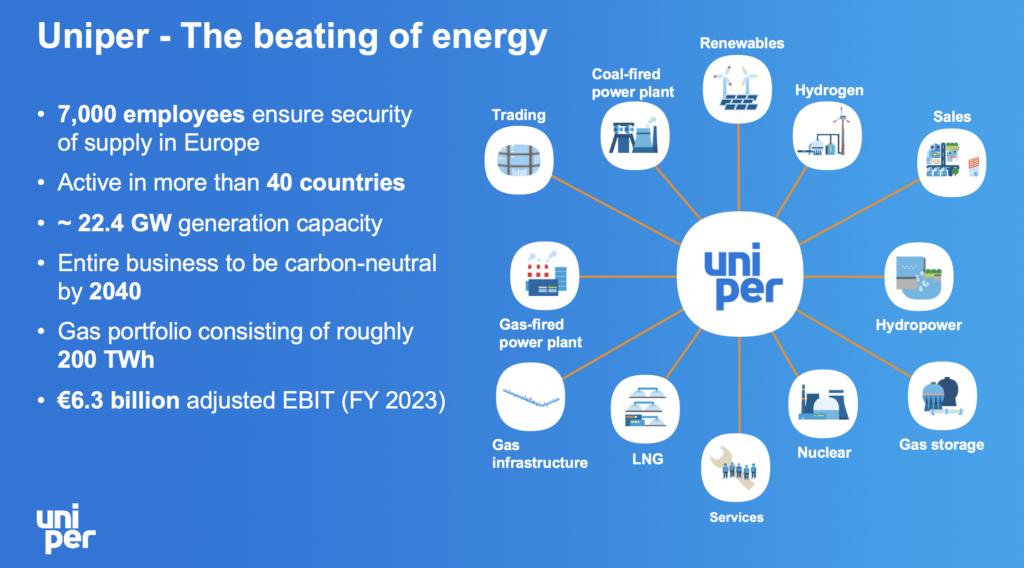

Energy Trading at Uniper

Uniper is a global energy company headquartered in Germany that focuses on power generation, energy trading, and energy storage solutions, providing electricity, natural gas, and other energy services to customers worldwide.

Uniper’s Business Value of Data Streaming

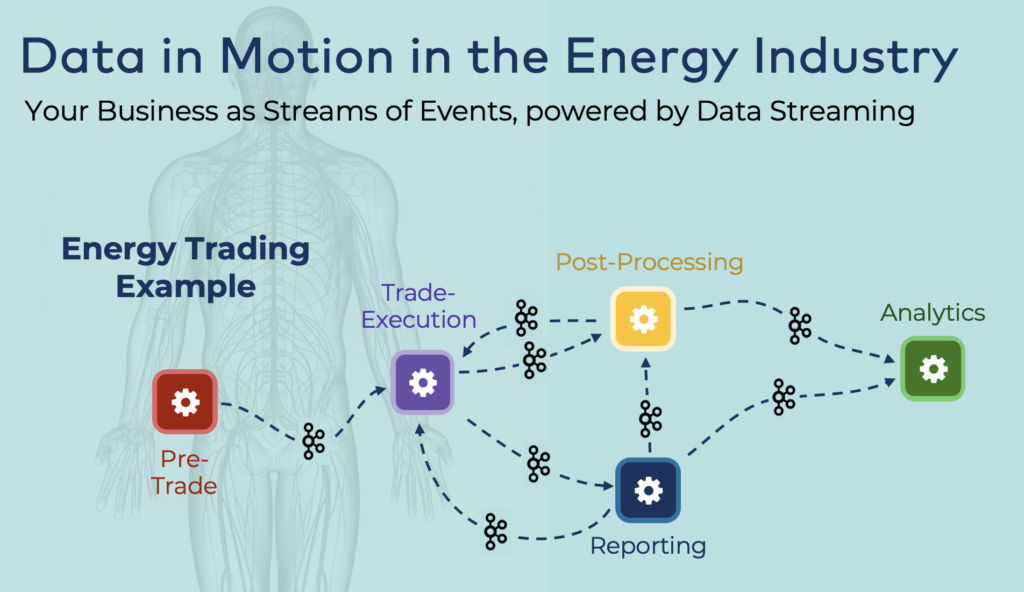

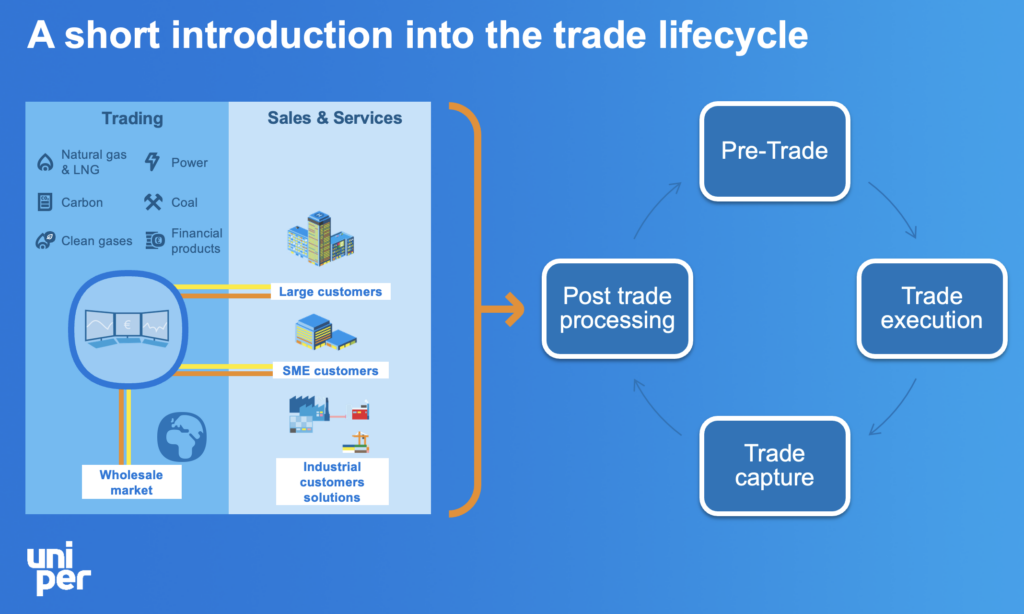

Why has Uniper chosen to use the Apache Kafka and Apache Flink ecosystem? If you look at the trade lifecycle in the energy sector, you probably can find out by yourself:

The underlying process is much more complex than the above picture. For instance, pre-trading including aspects like capacity management. If you trade energy between the Netherlands and Germany, the transportation of the energy needs to be planned while executing the trade. Uniper explained the process in much more details in the below webinar recording.

Here are Uniper’s benefits of implementing the trade lifecycle with data streaming using Kafka and Flink, as they described them:

Business-driven:

- Increase of trading volumes

- More messages per day

- Faster processing of data

Architecture-driven:

- Decoupling of applications

- Faster processing of data – batch vs. streaming data

- Reusability of data

Uniper’s IT Landscape

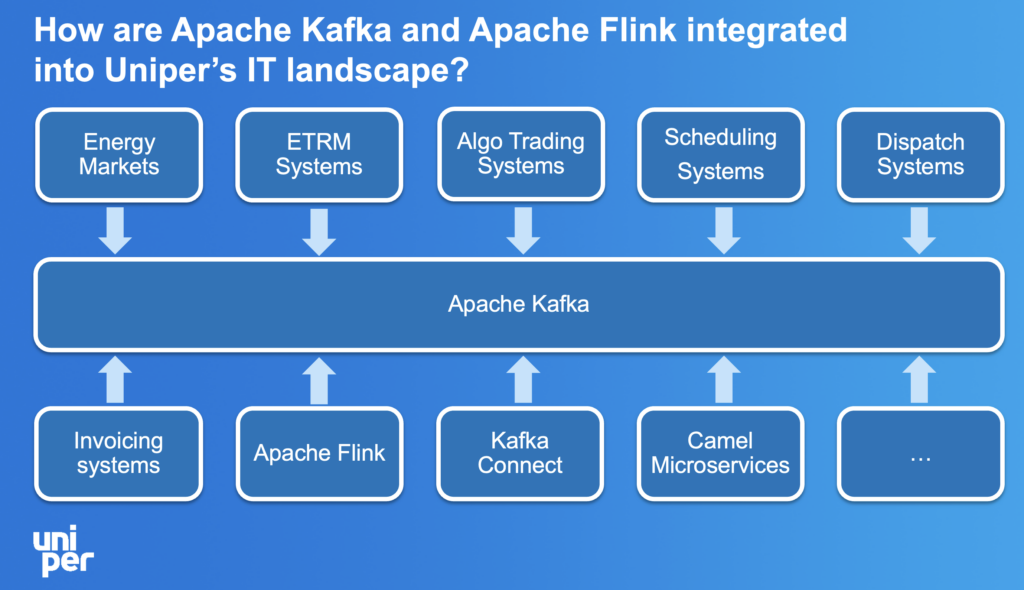

Uniper’s enterprise architecture leverages data streaming as central nervous systems between various technical platforms (integrated via Kafka Connect or Apache Camel) and business applications (e.g., algorithmic trading, dispatch and invoicing systems).

Uniper runs mission-critical workloads through Kafka. Confluent Cloud provides the right scale, elasticity, and SLAs for such use cases. Apache Flink serves ETL use cases for continuous stream processing.

Kafka Connect provides many connectors for direct integration with (non)streaming interfaces. Apache Camel is used for some other protocols that do not fit well into a native Kafka connector. Camel is an integration framework with native Kafka integration.

Fun fact: If you did not know: I have a history with Apache Camel, too. I worked a lot with this open source framework as independent consultant and at Talend with its Enterprise Service Bus (ESB) powered by Apache Camel. Hence, my blog has some articles about Apache Camel, too. Including: “When to use Apache Camel vs. Apache Kafka?”

Webinar Recording: Energy Trading with Kafka and Flink @ Uniper

The following on-demand webinar recording explores the relation between data streaming and energy trading in more detail. Uniper’s Alex Esseling (Platform & Security Architect, Sales & Trading IT) discusses Apache Kafka and Flink inside energy trading at Uniper:

IoT Data for Energy Trading

Energy trading differs a bit from traditional trading on Nasdaq and similar financial markets as IoT data is an important additional data source for several key reasons:

1. Real-Time Market Insights

- Live Data Feed: IoT devices, such as smart meters and sensors, provide real-time data on energy production, consumption, and grid status, enabling traders to make informed decisions based on the latest market conditions.

- Demand Forecasting: Accurate demand forecasting relies on real-time consumption data, which IoT devices supply continuously, helping traders anticipate market movements and adjust their strategies accordingly.

2. Enhanced Decision Making

- Predictive Analytics: IoT data allows for sophisticated predictive analytics, helping traders forecast price trends, identify potential supply disruptions, and optimize trading positions.

- Risk Management: Continuous monitoring of energy infrastructure through IoT sensors helps in identifying and mitigating risks, such as equipment failures or grid imbalances, which could affect trading decisions.

A typical use case in energy trading might involve:

- Data Collection: IoT devices across the energy grid collect data on energy production from renewable sources, consumption patterns in residential and commercial areas, and grid stability metrics.

- Data Analysis: This data is streamed and processed in real-time using platforms like Apache Kafka and Flink, enabling immediate analysis and visualization.

- Trading Decisions: Traders use the insights derived from this analysis to make informed decisions about buying and selling energy, optimizing their strategies based on current and predicted market conditions.

In summary, IoT data is essential in energy trading for providing real-time insights, enhancing decision-making, optimizing grid operations, ensuring compliance, and integrating renewable energy sources, ultimately leading to a more efficient and responsive energy market.

Data Streaming to Ingest IoT Data into Energy Trading

Data streaming with Kafka and Flink is deployed in various edge and hybrid cloud energy use cases.

As discussed above, some of the IoT data is very helpful for energy trading, not just for OT and operational workloads. Read about data streaming in the IoT space in the following articles:

- Apache Kafka for Industrial IoT and Manufacturing 4.0

- A cloud-native SCADA System for Industrial IoT built with Apache Kafka

- OPC UA, MQTT, and Apache Kafka – The Trinity of Data Streaming in IoT

Powerledger – Energy Trading with Kafka, MongoDB and Blockchain

- Tracking, tracing and trading of renewable energy

- Blockchain-based energy trading platform

- Facilitating peer-to-peer (P2P) trading of excess electricity from rooftop solar power installations and virtual power plants

- Traceability with non-fungible tokens (NFTs) representing renewable energy certificates (RECs)

Powerledger uses a decentralised rather than the conventional unidirectional market. Benefits include reduced customer acquisition costs, increased customer satisfaction, better prices for buyers and sellers (compared with feed-in and supply tariffs), and provision for cross-retailer trading.

Apache Kafka via Confluent Cloud as a core piece of infrastructure, specifically to ingest data from smart electricity meters and feed it into the trading system.

Wondering why to combine Kafka and Blockchain? Learn more here: “Apache Kafka and Blockchain – Comparison and a Kafka-native Implementation“.

re.alto – Solar Trading: Insights into the Production of Solar Plants

re.alto is a company that provides a digital marketplace for energy data and services. Their platform connects energy providers, consumers, and developers, facilitating the exchange of data and APIs (application programming interfaces) to optimize energy usage and distribution. By enabling seamless access to diverse energy-related data, re.alto supports innovation, enhances energy efficiency, and helps create smarter, more flexible energy systems.

re.alto presented their data streaming use cases at the Data in Motion Tour in Brussels, Belgium:

- Xenn: Real time monitoring of energy costs

- Smart charging: Schedule the charging of an electric vehicle to reduce costs or environmental impact

- Solar trading: Insights into the production of solar plants

Let’s explore solar trading in more detail. re.alto presented about its platform at the Confluent Data in Motion Tour 2024 in Brussels, Belgium. re.alto’s platform provides connectivity and APIs for market pricing data but also IoT integration to smart meters, grid data, batteries, SCADA systems, etc.:

Solar trading includes three steps:

- Data collection from sources such as SMA, FIMER, HUAWEI, solar edge

- Data processing with data streaming, time series analytics and overvoltage detection

- Providing data via ePox Spot and APIs / marketplace

Energy Trading Needs Reliable Real-Time Data Feeds, Connectivity and Reliability

Energy trading requires scalable and reliable real-time data processing. In contrary to trading in financial markets, the energy sector additional integrates IoT data sources like smart meters and sensors.

Uniper, re.alto and Powerledger are excellent examples of how to build a reliable energy trading platform powered by data streaming.

How does your enterprise architecture look like for energy trading? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.