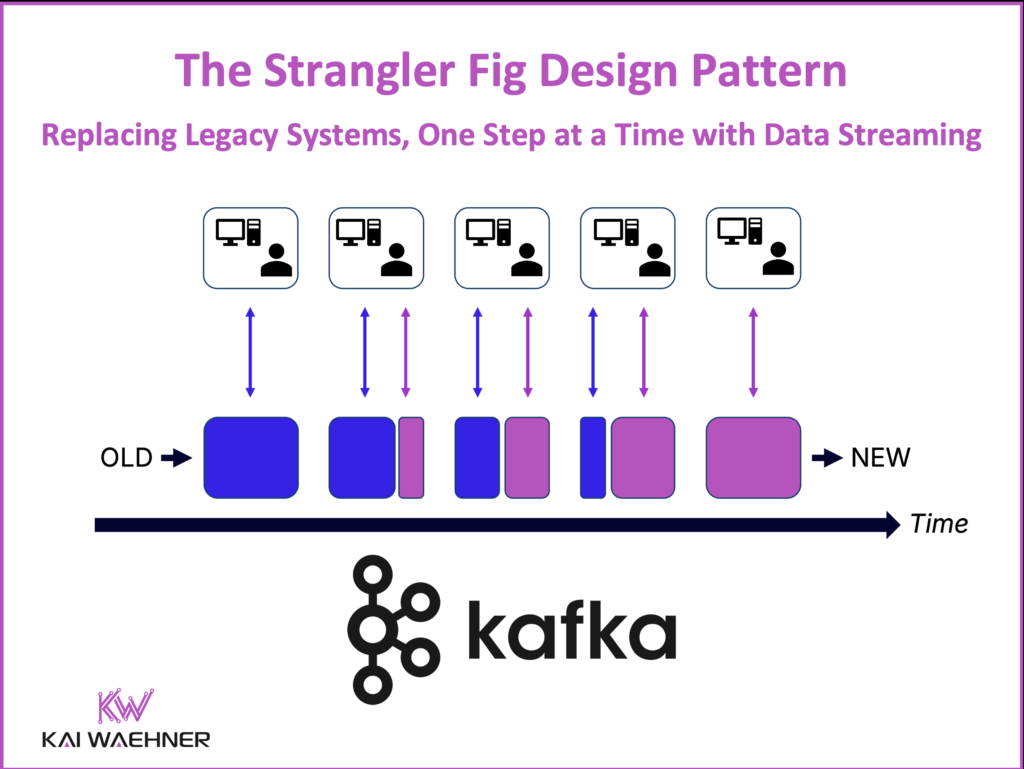

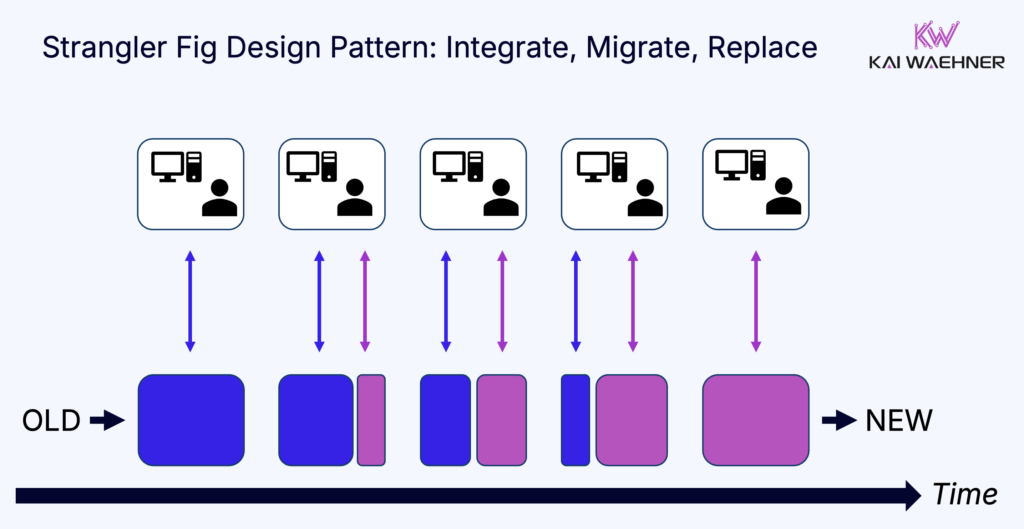

Organizations looking to modernize legacy applications often face a high-stakes dilemma: Do they attempt a complete rewrite or find a more gradual, low-risk approach? Enter the Strangler Fig Pattern, a method that systematically replaces legacy components while keeping the existing system running. Unlike the “Big Bang” approach, where companies try to rewrite everything at once, the Strangler Fig Pattern ensures smooth transitions, minimizes disruptions, and allows businesses to modernize at their own pace. Data streaming transforms the Strangler Fig Pattern into a more powerful, scalable, and truly decoupled approach. Let’s explore why this approach is superior to traditional migration strategies and how real-world enterprises like Allianz are leveraging it successfully.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And download my free book about data streaming architectures and use cases, including various use cases from the retail industry.

What is the Strangler Fig Design Pattern?

The Strangler Fig Pattern is a gradual modernization approach that allows organizations to replace legacy systems incrementally. The pattern was coined and popularized by Martin Fowler to avoid risky “big bang” system rewrites.

Inspired by the way strangler fig trees grow around and eventually replace their host, this pattern surrounds the old system with new services until the legacy components are no longer needed. By decoupling functionalities and migrating them piece by piece, businesses can minimize disruptions, reduce risk, and ensure a seamless transition to modern architectures.

When combined with data streaming, this approach enables real-time synchronization between old and new systems, making the migration even smoother.

Why Strangler Fig is Better than a Big Bang Migration or Rewrite

Many organizations have learned the hard way that rewriting an entire system in one go is risky. A Big Bang migration or rewrite often:

- Takes years to complete, leading to stale requirements by the time it’s done

- Disrupts business operations, frustrating customers and teams

- Requires a high upfront investment with unclear ROI

- Involves hidden dependencies, making the transition unpredictable

The Strangler Fig Pattern takes a more incremental approach:

- It allows gradual replacement of legacy components one service at a time

- Reduces business risk by keeping critical systems operational during migration

- Enables continuous feedback loops, ensuring early wins

- Keeps costs under control, as teams modernize based on priorities

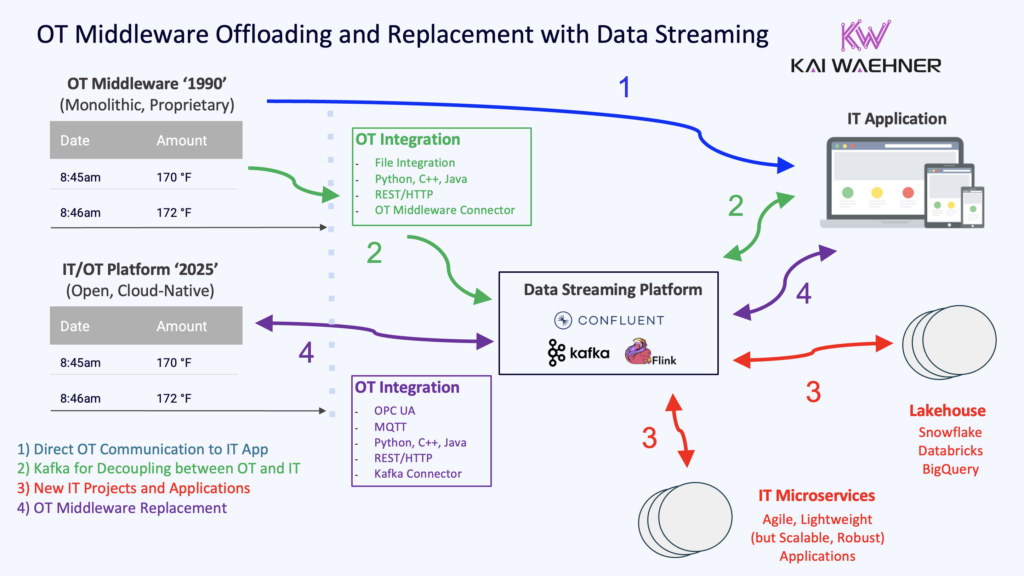

Here is an example from the Industrial IoT space for the strangler fig pattern leveraging data streaming to modernizing OT Middleware:

If you come from the traditional IT world (banking, retail, etc.) and don’t care about IoT, then you can explore my article about mainframe integration, offloading and replacement with data streaming.

Instead of replacing everything at once, this method surrounds the old system with new components until the legacy system is fully replaced—just like a strangler fig tree growing around its host.

Better Than Reverse ETL: A Migration with Data Consistency across Operational and Analytical Applications

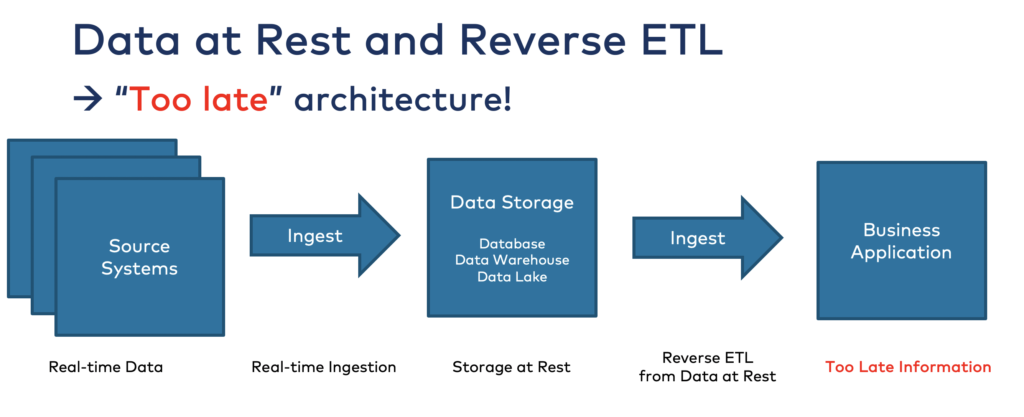

Some companies attempt to work around legacy constraints using Reverse ETL—extracting data from analytical systems and pushing it back into modern operational applications. On paper, this looks like a clever workaround. In reality, Reverse ETL is a fragile, high-maintenance anti-pattern that introduces more complexity than it solves.

Reverse ETL carries several critical flaws:

- Batch-based by nature: Data remains at rest in analytical lakehouses like Snowflake, Databricks, Google BigQuery, Microsoft Fabric or Amazon Redshift. It is then periodically moved—usually every few hours or once a day—back into operational systems. This results in outdated and stale data, which is dangerous for real-time business processes.

- Tightly coupled to legacy systems: Reverse ETL pipelines still depend on the availability and structure of the original legacy systems. A schema change, performance issue, or outage upstream can break downstream workflows—just like with traditional ETL.

- Slow and inefficient: It introduces latency at multiple stages, limiting the ability to react to real-world events at the right moment. Decision-making, personalization, fraud detection, and automation all suffer.

- Not cost-efficient: Reverse ETL tools often introduce double processing costs—you pay to compute and store the data in the warehouse, then again to extract, transform, and sync it back into operational systems. This increases both financial overhead and operational burden, especially as data volumes scale.

In short, Reverse ETL is a short-term fix for a long-term challenge. It’s a temporary bridge over the widening gap between real-time operational needs and legacy infrastructure.

Why Data Streaming with Apache Kafka and Flink is an Excellent Fit for the Strangler Fig Pattern

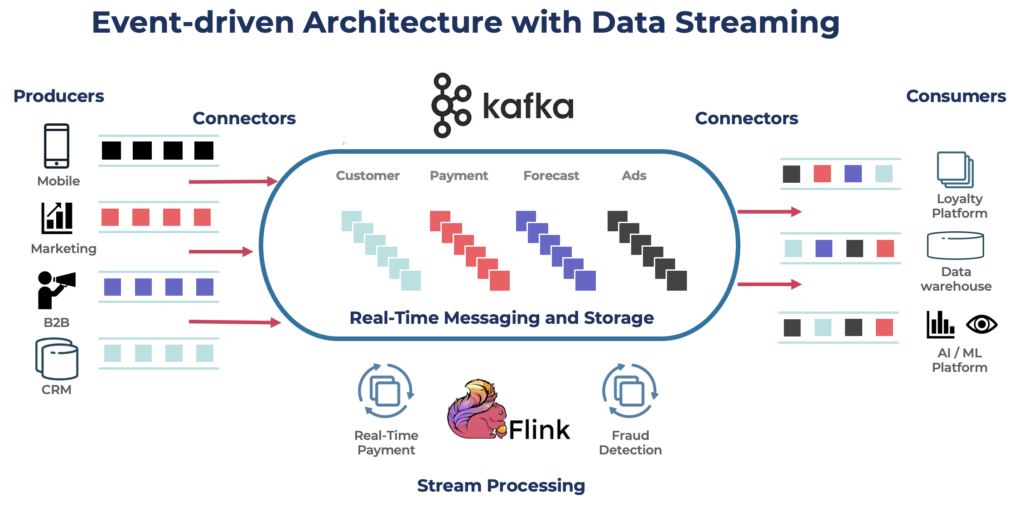

Many modernization efforts fail because they tightly couple old and new systems, making transitions painful. Data streaming with Apache Kafka and Flink changes the game by enabling real-time, event-driven communication.

1. True Decoupling of Old and New Systems

Data streaming using Apache Kafka with its event-driven streaming and persistence layer enables organizations to:

- Decouple producers (legacy apps) from consumers (modern apps)

- Process real-time and historical data without direct database dependencies

- Allow new applications to consume events at their own pace

This avoids dependency on old databases and enables teams to move forward without breaking existing workflows.

2. Real-Time Replication with Persistence

Unlike traditional migration methods, data streaming supports:

- Real-time synchronization of data between legacy and modern systems

- Durable persistence to handle retries, reprocessing, and recovery

- Scalability across multiple environments (on-prem, cloud, hybrid)

This ensures data consistency without downtime, making migrations smooth and reliable.

3. Supports Any Technology and Communication Paradigm

Data streaming’s power lies in its event-driven architecture, which supports any integration style—without compromising scalability or real-time capabilities.

The data product approach using Kafka Topics with data contracts handles:

- Real-time messaging for low-latency communication and operational systems

- Batch processing for analytics, reporting and AI/ML model training

- Request-response for APIs and point-to-point integration with external systems

- Hybrid integration—syncing legacy databases with cloud apps, uni- or bi-directionally

This flexibility lets organizations modernize at their own pace, using the right communication pattern for each use case while unifying operational and analytical workloads on a single platform.

4. No Time Pressure – Migrate at Your Own Pace

One of the biggest advantages of the Strangler Fig Pattern with data streaming is flexibility in timing.

- No need for overnight cutovers—migrate module by module

- Adjust pace based on business needs—modernization can align with other priorities

- Handle delays without data loss—thanks to durable event storage

This ensures that companies don’t rush into risky migrations but instead execute transitions with confidence.

5. Intelligent Processing in the Data Migration Pipeline

In a Strangler Fig Pattern, it’s not enough to just move data from old to new systems—you also need to transform, validate, and enrich it in motion.

While Apache Kafka provides the backbone for real-time event streaming and durable storage, Apache Flink adds powerful stream processing capabilities that elevate the modernization journey.

With Apache Flink, organizations can:

- Real-time preprocessing: Clean, filter, and enrich legacy data before it’s consumed by modern systems.

- Data product migration: Transform old formats into modern, domain-driven event models.

- Improved data quality: Validate, deduplicate, and standardize data in motion.

- Reusable business logic: Centralize processing logic across services without embedding it in application code.

- Unified streaming and batch: Support hybrid workloads through one engine.

Apache Flink allows you to roll out trusted, enriched, and well-governed data products—gradually, without disruption. Together with Kafka, it provides the real-time foundation for a smooth, scalable transition from legacy to modern.

Allianz’s Digital Transformation and Transition to Hybrid Cloud: An IT Modernization Success Story

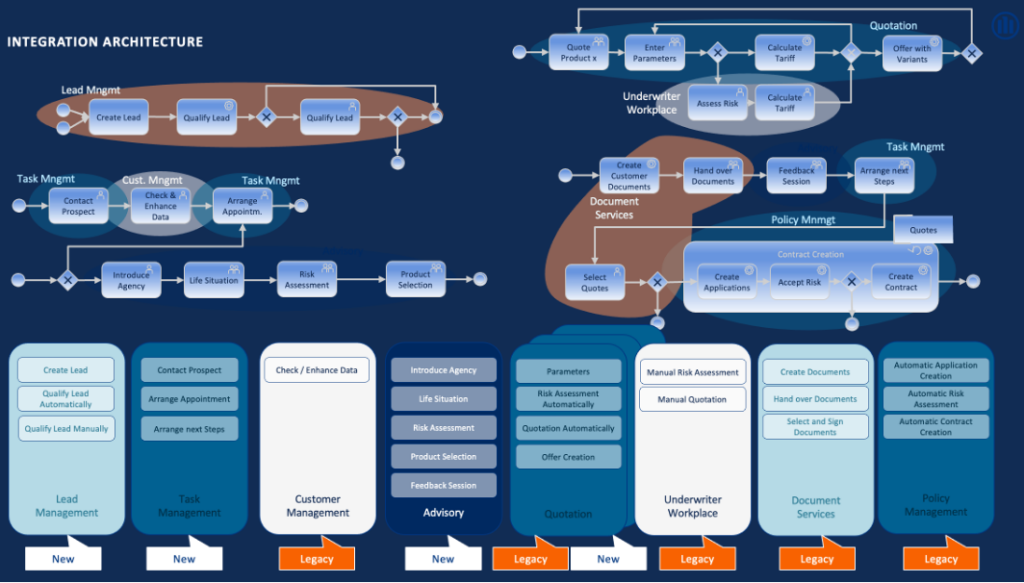

Allianz, one of the world’s largest insurers, set out to modernize its core insurance systems while maintaining business continuity. A full rewrite was too risky, given the critical nature of insurance claims and regulatory requirements. Instead, Allianz embraced the Strangler Fig Pattern with data streaming.

This approach allows an incremental, low-risk transition. By implementing data streaming with Kafka as an event backbone, Allianz could gradually migrate from legacy mainframes to a modern, scalable cloud architecture. This ensured that new microservices could process real-time claims data, improving speed and efficiency without disrupting existing operations.

To achieve this IT modernization, Allianz leveraged automated microservices, real-time analytics, and event-driven processing to enhance key operations, such as pricing, fraud detection, and customer interactions.

A crucial component of this shift was the Core Insurance Service Layer (CISL), which enabled decoupling applications via a data streaming platform to ensure seamless integration across various backend systems.

With the goal of migrating over 75% of applications to the cloud, Allianz significantly increases agility, reduced operational complexity, and minimized technical debt, positioning itself for long-term digital success by transitioning many applications to the cloud, as you can read (in German) in the CIO.de article:

“As one of the largest insurers in the world, Allianz plans to migrate over 75 percent of its applications to the cloud and modernize its core insurance system.”

Event-Driven Innovation: Community and Knowledge Sharing at Allianz

Beyond technology, Allianz recognized that successful modernization also required cultural transformation. To drive internal adoption of event-driven architectures, the company launched Allianz Eventing Day—an initiative to educate teams on the benefits of Kafka-based streaming.

Hosted in partnership with Confluent, the event brought together Allianz experts and industry leaders to discuss real-world implementations, best practices, and future innovations. This collaborative environment reinforced Allianz’s commitment to continuous learning and agile development, ensuring that data streaming became a core pillar of its IT strategy.

Allianz also extended this engagement to the broader insurance industry, organizing the first Insurance Roundtable on Event-Driven Architectures with top insurers from across Germany and Switzerland. Experts from Allianz, Generali, HDI, Swiss Re, and others exchanged insights on topics like real-time data analytics, API decoupling, and event discovery. The discussions highlighted how streaming technologies drive competitive advantage, allowing insurers to react faster to customer needs and continuously improve their services. By embracing domain-driven design (DDD) and event storming, Allianz ensured that event-driven architectures were not just a technical upgrade, but a fundamental shift in how insurance operates in the digital age.

The Future of IT Modernization and Legacy Migrations with Strangler Fig using Data Streaming

The Strangler Fig Pattern is the most pragmatic approach to modernizing enterprise systems, and data streaming makes it even more powerful.

By decoupling old and new systems, enabling real-time synchronization, and supporting multiple architectures, data streaming with Apache Kafka and Flink provides the flexibility, reliability, and scalability needed for successful migrations and long-term integrations between legacy and cloud-native applications.

As enterprises continue to strengthen, this approach ensures that modernization doesn’t become a bottleneck, but a business enabler. If your organization is considering legacy modernization, data streaming is the key to making the transition seamless and risk-free.

Are you ready to transform your legacy systems without the risks of a big bang rewrite? What’s one part of your legacy system you’d “strangle” first—and why? If you could modernize just one workflow with real-time data tomorrow, what would it be?

Subscribe to my newsletter for insights into data streaming and connect with me on LinkedIn to continue the conversation. And download my free book about data streaming architectures, use cases and success stories in the retail industry.