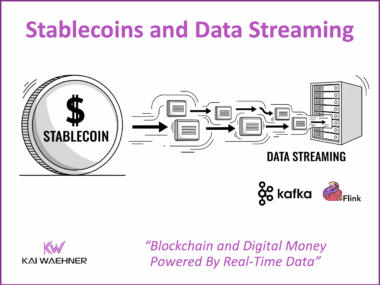

How Stablecoins Use Blockchain and Data Streaming to Power Digital Money

Stablecoins are reshaping digital money by linking traditional finance with blockchain technology. Built for stability and speed, they enable real time payments, settlements, and programmable financial services. To operate reliably at scale, stablecoin systems require continuous data movement and analysis across ledgers, compliance tools, and banking platforms. A Data Streaming Platform using technologies like Apache Kafka and Apache Flink can provide this foundation by ensuring transactions, reserves, and risk signals are processed instantly and consistently. Together, blockchain, data streaming, and AI pave the way for a new financial infrastructure that runs on live, contextual data.