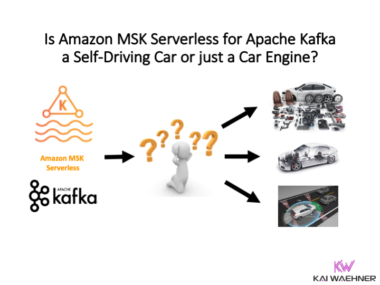

When NOT to choose Amazon MSK Serverless for Apache Kafka?

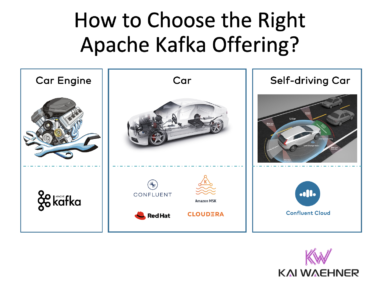

Apache Kafka became the de facto standard for data streaming. Various cloud offerings emerged and improved in the last years. Amazon MSK Serverless is the latest Kafka product from AWS. This blog post looks at its capabilities to explore how it relates to “the normal” partially managed Amazon MSK, when the serverless version is a good choice, and when other fully-managed cloud services like Confluent Cloud are the better option.